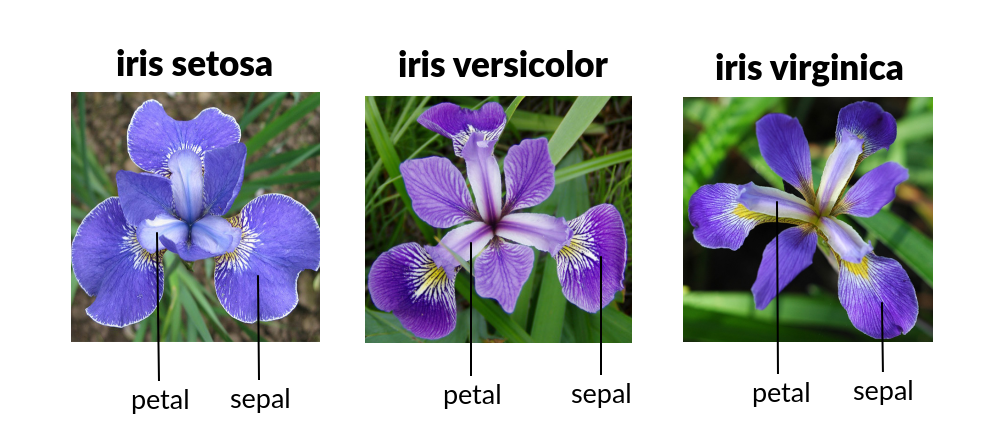

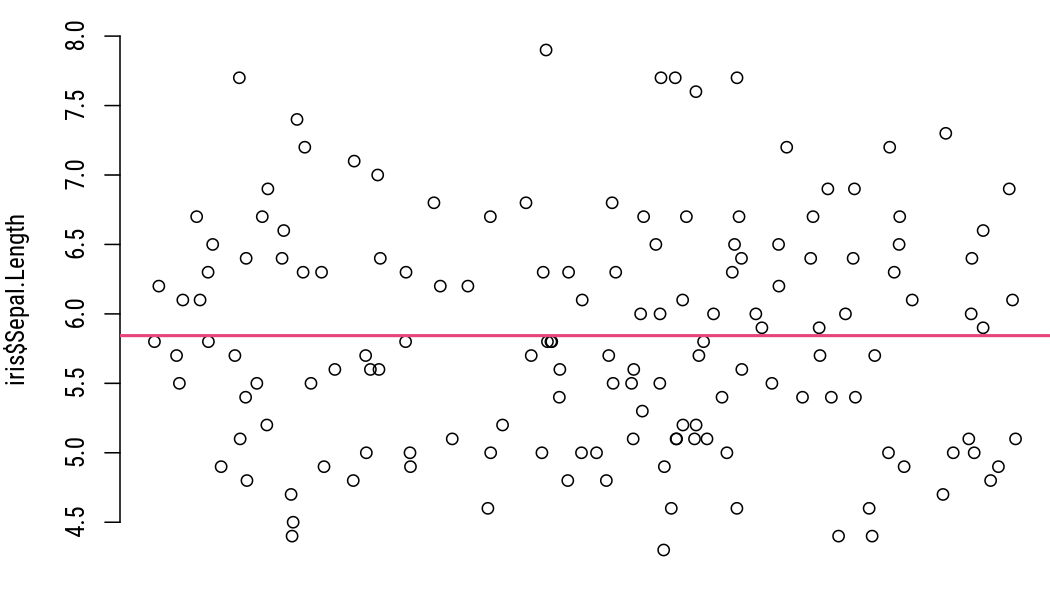

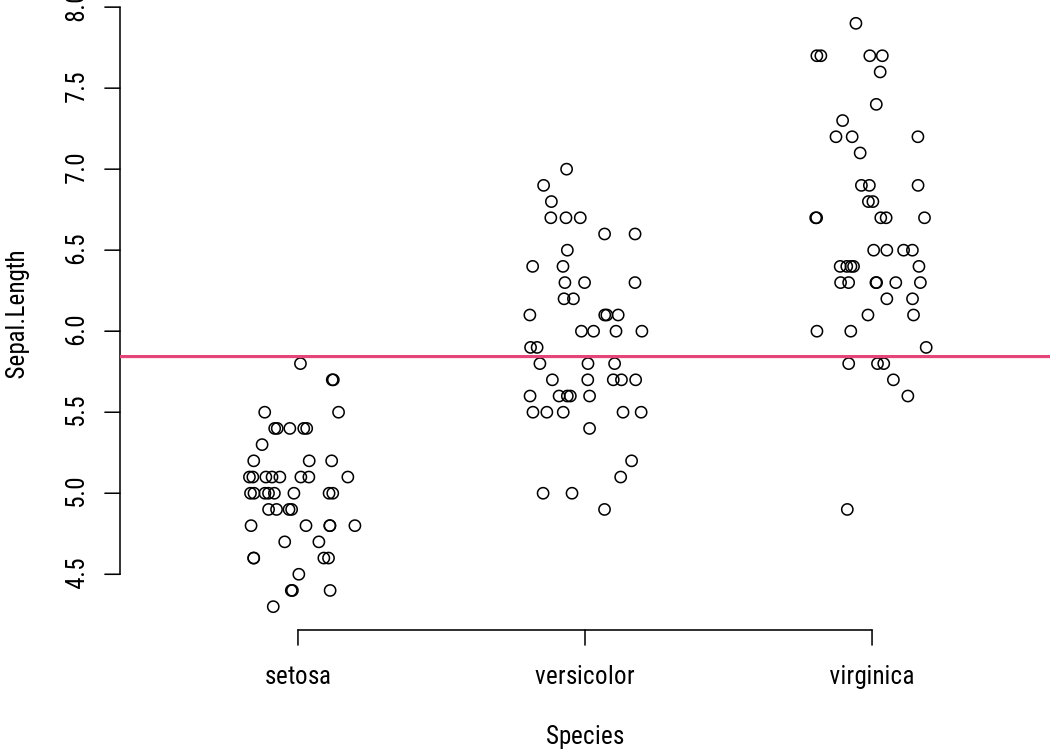

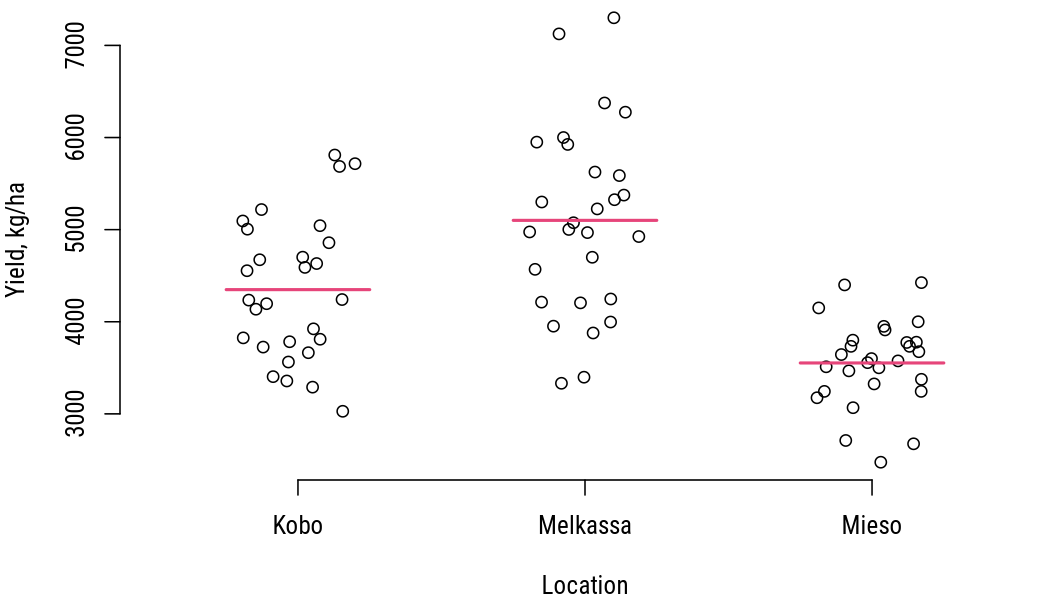

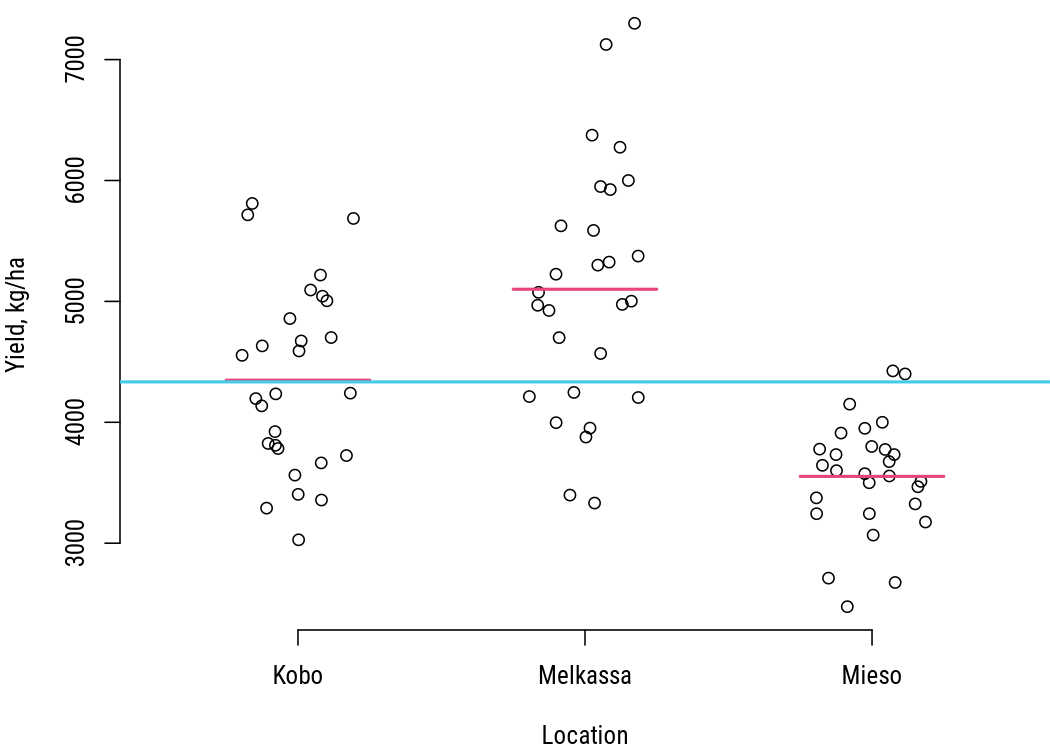

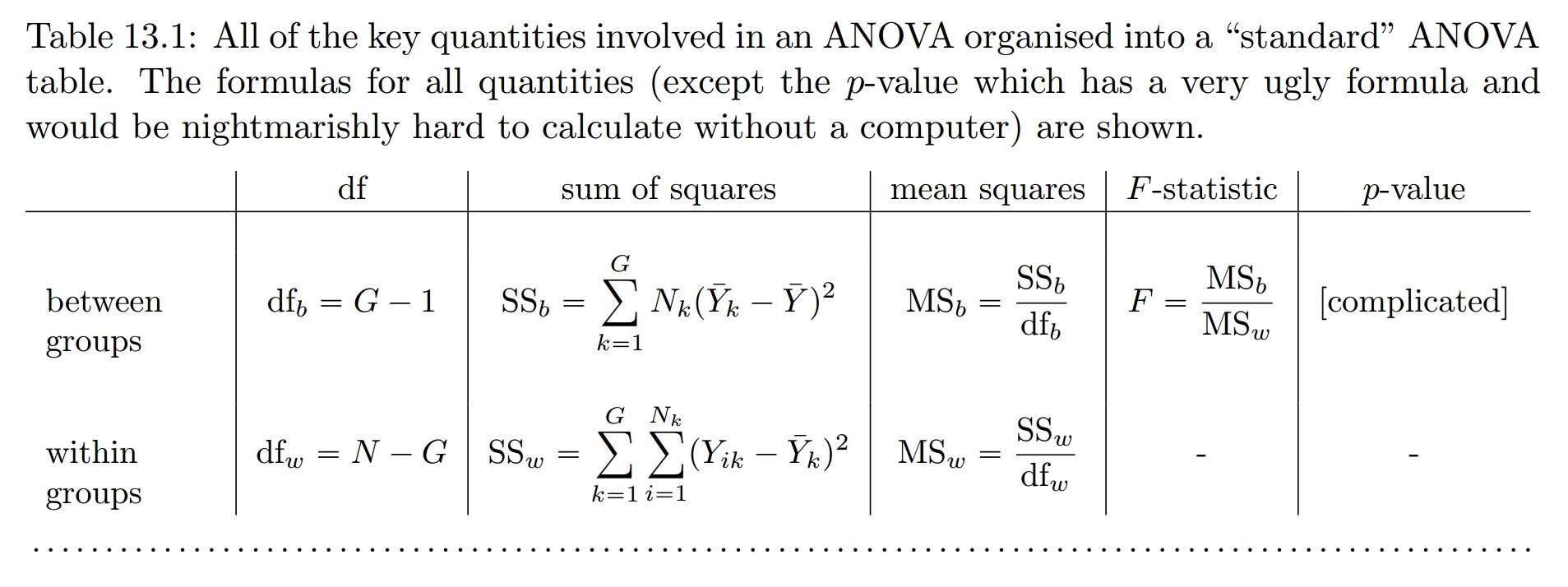

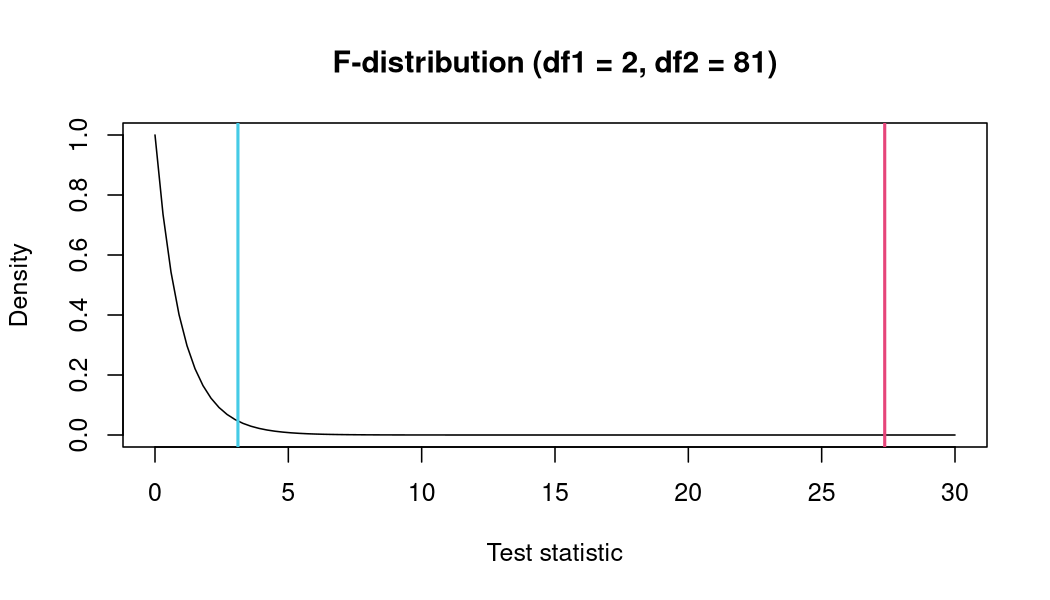

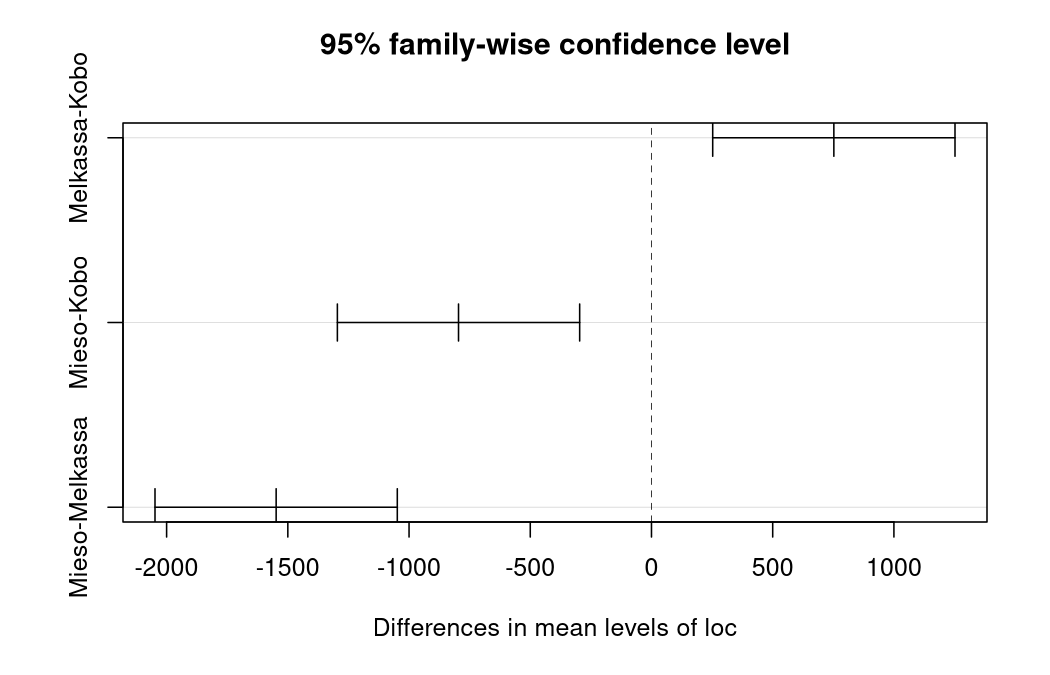

class: center, middle, inverse, title-slide # Analysis of variance ## Research methods ### Jüri Lillemets ### 2021-11-30 --- class: center middle clean # Are differences between groups higher than within groups? --- class: center middle inverse # Two sources of variation in data --- ## Why analysis of variance? Variance expresses mean squared deviation from average: `$$Var(X) = \frac{1}{N} \sum{^N_{i=1}{(X_i-\bar{X})^2}},$$` where `\(X_i\)` is the value of observation `\(i\)` and `\(\bar{X}\)` the mean value. --- Let's use data of some iris flowers for illustration.  .footnote[https://dodona.ugent.be/en/activities/779139786/] --- Variance is a measure of how different on average are **individual values from mean value**. <!-- --> -- These differences represent **variation** in data. ??? Average squared difference from the mean. --- We can actually separate variation in into two parts: `$$Var(Y) = \frac{1}{N} \sum^{G}_{j=1}{\sum^{N_k}_{i=1}{(Y_{ij} - \bar{Y})^2}},$$` where - `\(N\)` is the number of all observations, - `\(Y_{ij}\)` is the value of variable `\(Y\)` for observation `\(i\)` in group `\(j\)`, and - `\(\bar{Y}\)` the overall mean of variable `\(Y\)` of all observations in all samples. --- <!-- --> --- ## The two parts as a linear model We can also express each value as a model that distinguishes between the two sources of variation: `$$Y_{ij}=\bar{x}+\alpha_{j}+\varepsilon_{ij},$$` where - `\(Y_{ij}\)` is the value of `\(Y\)` for observation `\(i\)` from group `\(j\)`, - `\(\bar{x}\)` the overall mean, - `\(\alpha_{i}\)` the difference between mean of group `\(j\)` and overall mean `\(\bar{x}\)`, and - `\(\varepsilon_{ij}\)` the difference between group mean and value for observation `\(i\)` from factor `\(j\)`. --- Total variation can be expressed as the deviation of each observation from the mean. `$$Y_{ij}= \bar{x} + \varepsilon_{i}$$` | |Species | Sepal.Length| x| epsilon_i| |:---|:----------|------------:|--------:|----------:| |1 |setosa | 5.1| 6.022222| -0.9222222| |2 |setosa | 4.9| 6.022222| -1.1222222| |3 |setosa | 4.7| 6.022222| -1.3222222| |51 |versicolor | 7.0| 6.022222| 0.9777778| |52 |versicolor | 6.4| 6.022222| 0.3777778| |53 |versicolor | 6.9| 6.022222| 0.8777778| |101 |virginica | 6.3| 6.022222| 0.2777778| |102 |virginica | 5.8| 6.022222| -0.2222222| |103 |virginica | 7.1| 6.022222| 1.0777778| --- We can also separate factor `\(\alpha\)` from total variation: `$$Y_{ij}=\bar{x}+\alpha_{j}+\varepsilon_{ij},$$` - `\(Y_{ij}\)` is the value for observation `\(i\)` from group `\(j\)`, - `\(\bar{x}\)` the overall mean, - `\(\alpha_{i}\)` the difference between mean of group `\(j\)` and overall mean `\(\bar{x}\)`, and - `\(\varepsilon_{ij}\)` the difference between group mean and value for observation `\(i\)` from factor `\(j\)`. --- class: center middle `$$Y_{ij}=\bar{x}+\alpha_{j}+\varepsilon_{ij}$$` | |Species | Sepal.Length| x| epsilon_i| alpha_j| epsilon_ij| |:---|:----------|------------:|--------:|----------:|----------:|----------:| |1 |setosa | 5.1| 6.022222| -0.9222222| -1.1222222| 0.2000000| |2 |setosa | 4.9| 6.022222| -1.1222222| -1.1222222| 0.0000000| |3 |setosa | 4.7| 6.022222| -1.3222222| -1.1222222| -0.2000000| |51 |versicolor | 7.0| 6.022222| 0.9777778| 0.7444444| 0.2333333| |52 |versicolor | 6.4| 6.022222| 0.3777778| 0.7444444| -0.3666667| |53 |versicolor | 6.9| 6.022222| 0.8777778| 0.7444444| 0.1333333| |101 |virginica | 6.3| 6.022222| 0.2777778| 0.3777778| -0.1000000| |102 |virginica | 5.8| 6.022222| -0.2222222| 0.3777778| -0.6000000| |103 |virginica | 7.1| 6.022222| 1.0777778| 0.3777778| 0.7000000| --- For `$$Y_{ij}=\bar{x}+\alpha_{j}+\varepsilon_{ij},$$` there are two sources of variation: - variation **between groups**, expressed by `\(\alpha_{j}\)` - random variation **within a group**, expressed by `\(\varepsilon_{ij}\)` -- The idea behind ANOVA is comparing these two sources of variation. If the variation between groups is much higher than variation within groups, we can say that the groups are different not only in our sample but also in population. --- class: center middle inverse # Comparing the two sources of variation --- ## One way ANOVA Assumptions: - normality within each group - independence - homogeneity of variance (only for Welch's test) ??? I will discuss the homogeneity of variance later. --- Let's use data set "Multi-environment trial of sorghum at 3 locations across 5 years". We wish to compare yields in different locations in 2004. .pull-left[ | |gen |trial |env | yield| year|loc | |:---|:---|:-----|:---|-----:|----:|:--------| |150 |G01 |T1 |E09 | 6275| 2004|Melkassa | |151 |G02 |T1 |E09 | 6375| 2004|Melkassa | |152 |G03 |T1 |E09 | 5925| 2004|Melkassa | |153 |G04 |T1 |E09 | 7125| 2004|Melkassa | |154 |G05 |T1 |E09 | 6000| 2004|Melkassa | |155 |G06 |T1 |E09 | 5950| 2004|Melkassa | ] .pull-right[ |loc | Freq| |:--------|----:| |Kobo | 28| |Melkassa | 28| |Mieso | 28| ] --- ### Hypotheses Are mean values equal? `\(H_0: \bar{x}_1 = \bar{x}_2 = \bar{x}_3\)` `\(H_1: \bar{x}_1 = \bar{x}_2 \neq \bar{x}_3\)` or `\(\bar{x}_1 \neq \bar{x}_2 \neq \bar{x}_3\)` -- In other words, our `\(H_1\)` is that at least one group mean is different from others. --- Is mean yield different among locations? Does location have an effect on yield? <!-- --> ??? Do we even need ANOVA? Yes, because this is only a sample. -- Let's set significance level `\(\alpha = 0.05\)`. --- ### Test statistic `\(F\)` Test statistic `\(F\)` is calculated as `\(F = MSA / MSE,\)` where `\(MSA\)` expresses variation between group and `\(MSE\)` represents random variaton. --- Sums of squares of variable `\(y\)` for observations `\(i\)` in groups `\(j\)`: - **within-group** sum of squares, i.e. sum of squares of errors (SSE) `\(SSE=\sum_{j=1}^{k}\sum_{i=1}^{n} (y_{ij}-\overline{y_{j}})^{2}\)`, `\(df = n - k\)` - **between group** sum of squares, i.e. sum of squares between groups (SSA) `\(SSA=\sum_{j=1}^{k} (\overline{y_{j}}-\overline{y})^{2}\)`, `\(df = k - 1\)` - **total** sum of squares (SST=SSE+SSA) `\(SST=\sum_{j=1}^{k}\sum_{i=1}^{n} (y_{ij}-\overline{y})^{2}\)`, `\(df = n - 1\)` --- We can use these variations to find `\(MSA\)` and `\(MSE\)` to calculate `\(F = MSA / MSE\)` as follows: - mean squares for SSE (MSE) `\(MSE = SSE / (n - k)\)` - mean squares for SSA (MSA) `\(MSA = SSA / (k - 1)\)` --- > Why are we taking **sums of squares** instead of using just sums? --- If we simply sum the differences, the result would be zero, i.e. no variation. | |gen |trial |env | yield| year|loc | x| epsilon_i| |:---|:---|:-----|:---|-----:|----:|:--------|--------:|----------:| |161 |G12 |T1 |E09 | 5300| 2004|Melkassa | 4414.667| 885.3333| |172 |G23 |T2 |E09 | 4569| 2004|Melkassa | 4414.667| 154.3333| |183 |G06 |T1 |E10 | 3375| 2004|Mieso | 4414.667| -1039.6667| ```r (sorg3$yield - mean(sorg3$yield)) %>% sum %>% round ``` ``` ## [1] 0 ``` ```r (sorg3$yield - mean(sorg3$yield))^2 %>% sum %>% round ``` ``` ## [1] 1888541 ``` ??? Does it have to be this complicated? --- The test statistic `\(F = MSA / MSE\)` simply compares within-group differences to between group differences. <!-- --> --- class: middle .center[] .footnote[Navarro D.J., Foxcroft D.R. (2018). Learning statistics with jamovi: a tutorial for psychology students and other beginners. Danielle J. Navarro and David R. Foxcroft. doi:10.24384/HGC3-7P15] --- ### Test statistic `\(F\)` on F-distribution Value of test statistic is 27.364. Test statistic is evaluated on t-distribution with df `\(k - 1\)` and `\(n - k\)`. <!-- --> --- P-value is 0 and we chose significance level to be `\(\alpha = 0.05\)`. > Is mean yield different among locations? > Does location have an effect on yield? --- class: center middle inverse # Post-hoc tests and checking homogeneity of variance --- ## Tukey HSD We found that at least one group is different from others. But which one(s)? -- We can use post-hoc tests to see pairwise differences. An example test is **Tukey HSD** (hhonest significant differences). ``` ## Tukey multiple comparisons of means ## 95% family-wise confidence level ## ## Fit: aov(formula = yield ~ loc, data = sorg) ## ## $loc ## diff lwr upr p adj ## Melkassa-Kobo 752.2500 252.5353 1251.9647 0.0016007 ## Mieso-Kobo -795.9286 -1295.6433 -296.2138 0.0007997 ## Mieso-Melkassa -1548.1786 -2047.8933 -1048.4638 0.0000000 ``` --- We can also plot differences in means along with their **confidence intervals**. <!-- --> ??? All CIs are outside 0. --- ## Levene's test of homogeneity of means For each observation we 1. calculate its difference from its group mean, and then 2. use ANOVA to test if these within-group differences are different. -- `\(H_0: s_1 = s_2 = s_3\)` `\(H_1: s_1 = s_2 \neq s_3\)` or `\(s_1 \neq s_2 \neq s_3\)` If we fail to reject `\(H_0\)` (p-value is `\(\ge \alpha\)`), then we conclude that all groups have the same variance. --- ``` ## Levene's Test for Homogeneity of Variance (center = median) ## Df F value Pr(>F) ## group 2 6.4342 0.00255 ** ## 81 ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` -- > Are variances homogenous? -- > What to do if they are not? --- class: inverse --- ## Practice 1. Download the data set `chickwts` from the course notes. 2. Go to cloud.jamovi.org. 3. Open the data set in Jamovi. > Are the weights normally distributed in each diet? > Are the samples independent? > Are variances of diets homogenous? > Does diet have an impact on weight? --- class: inverse