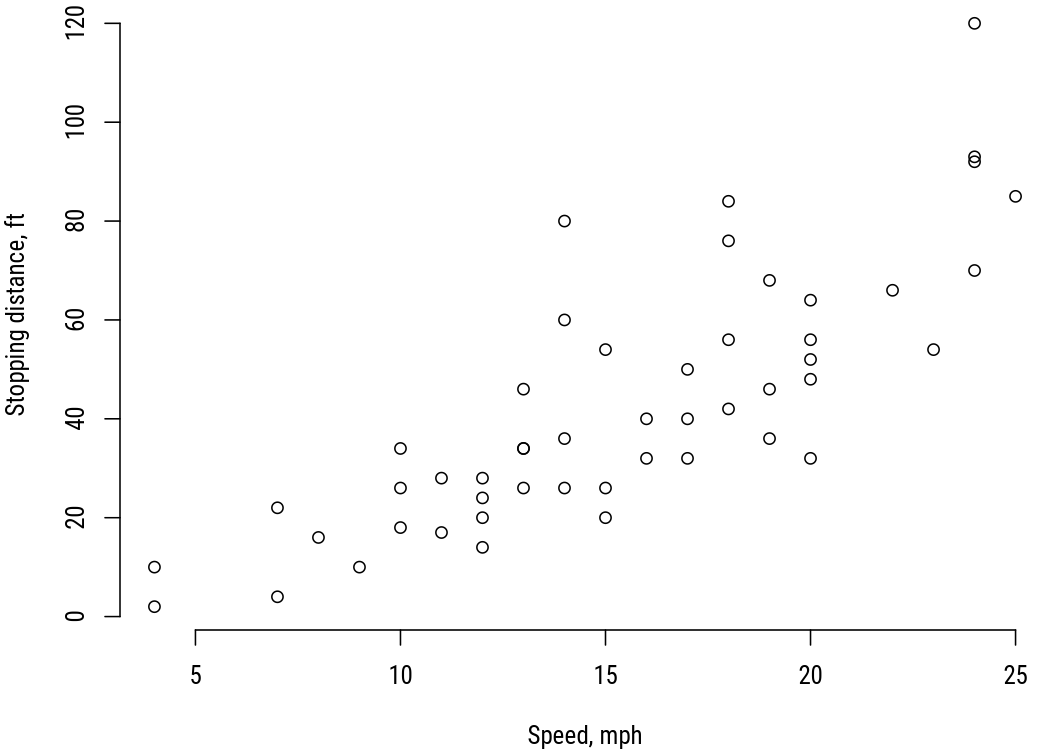

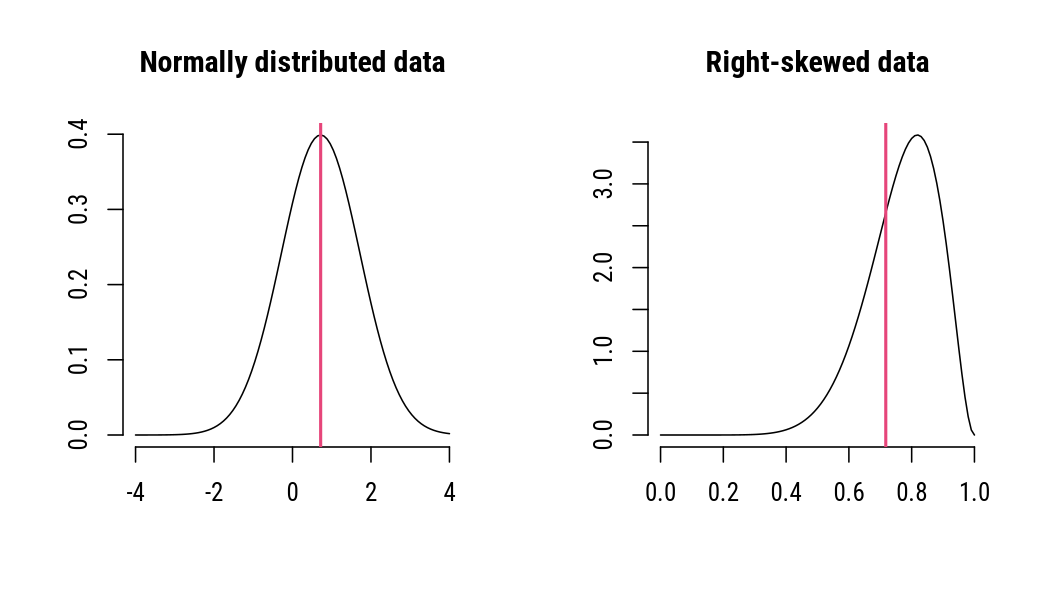

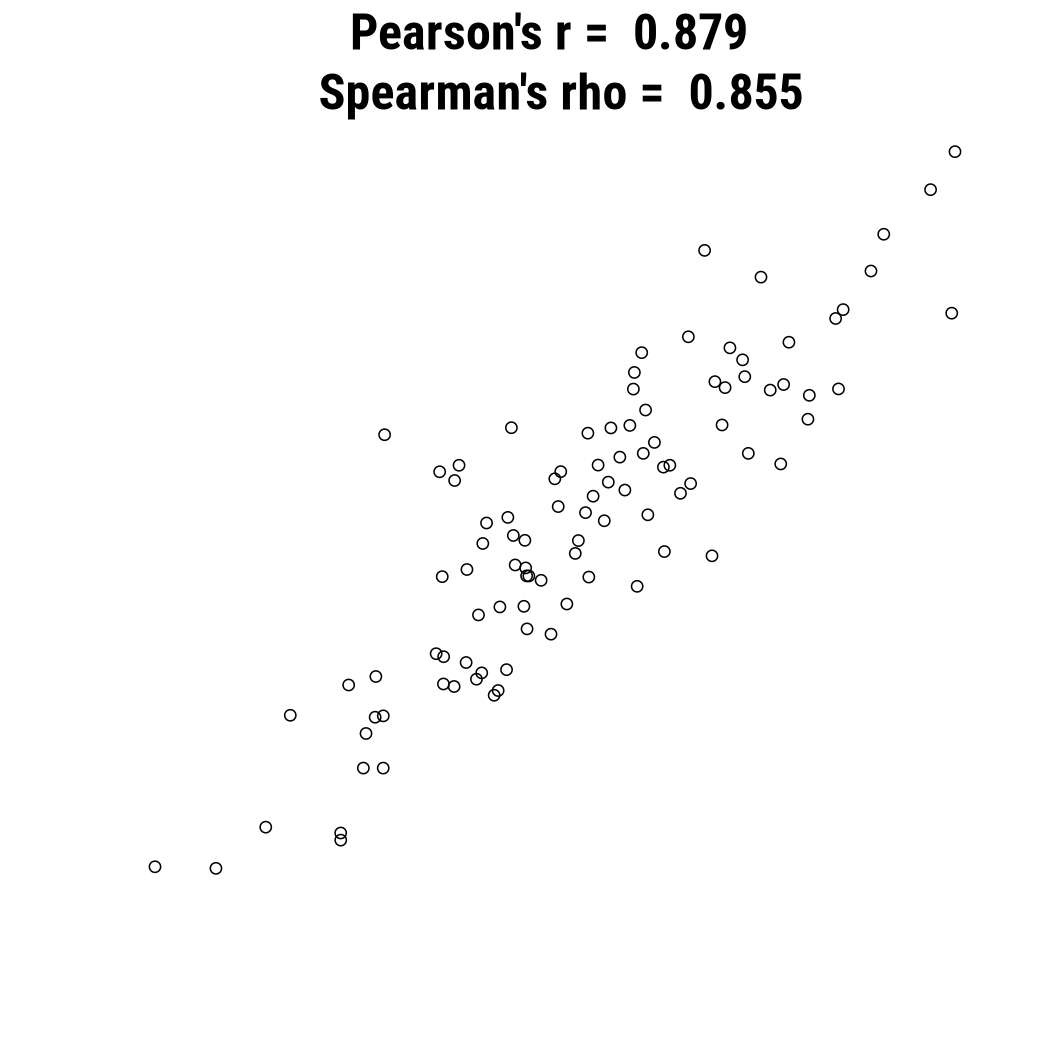

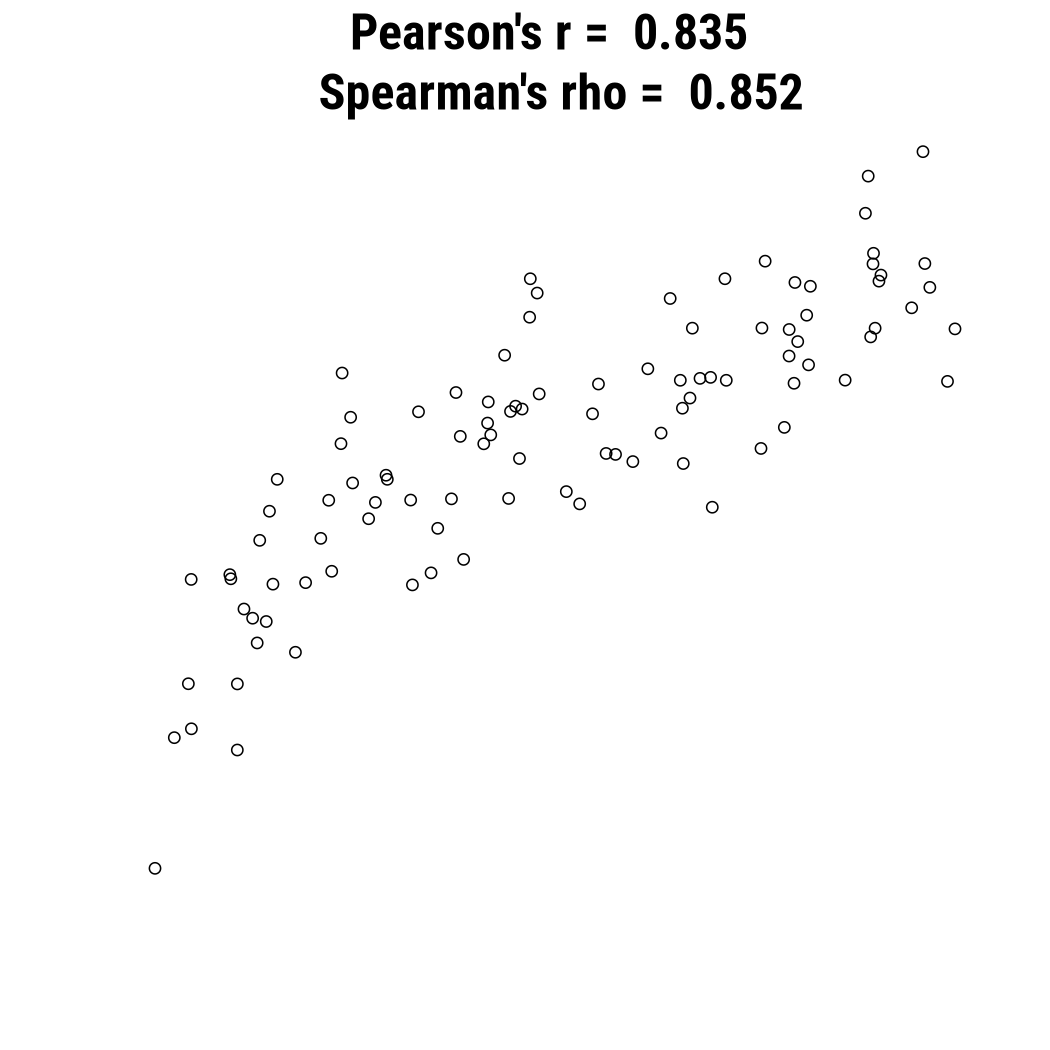

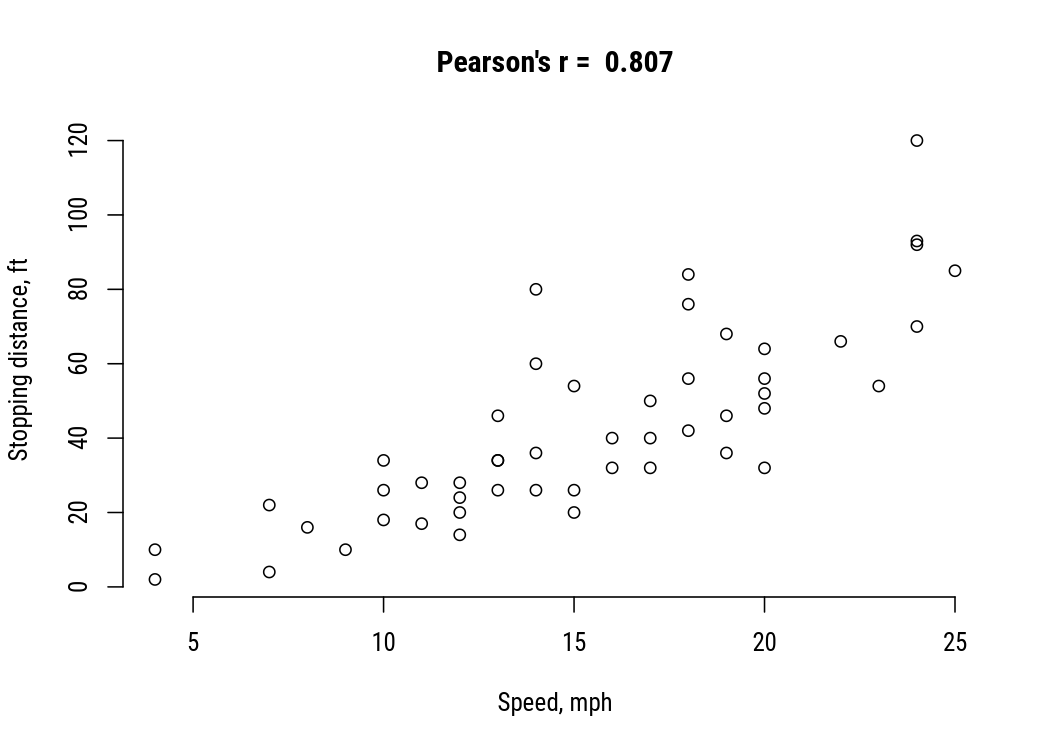

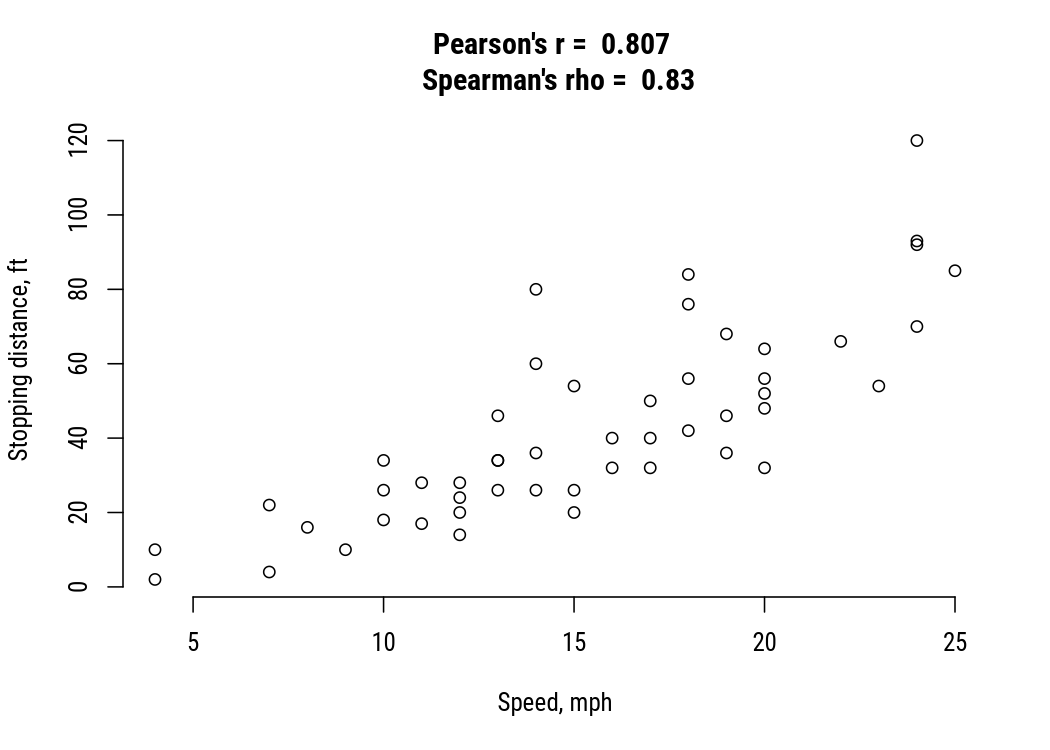

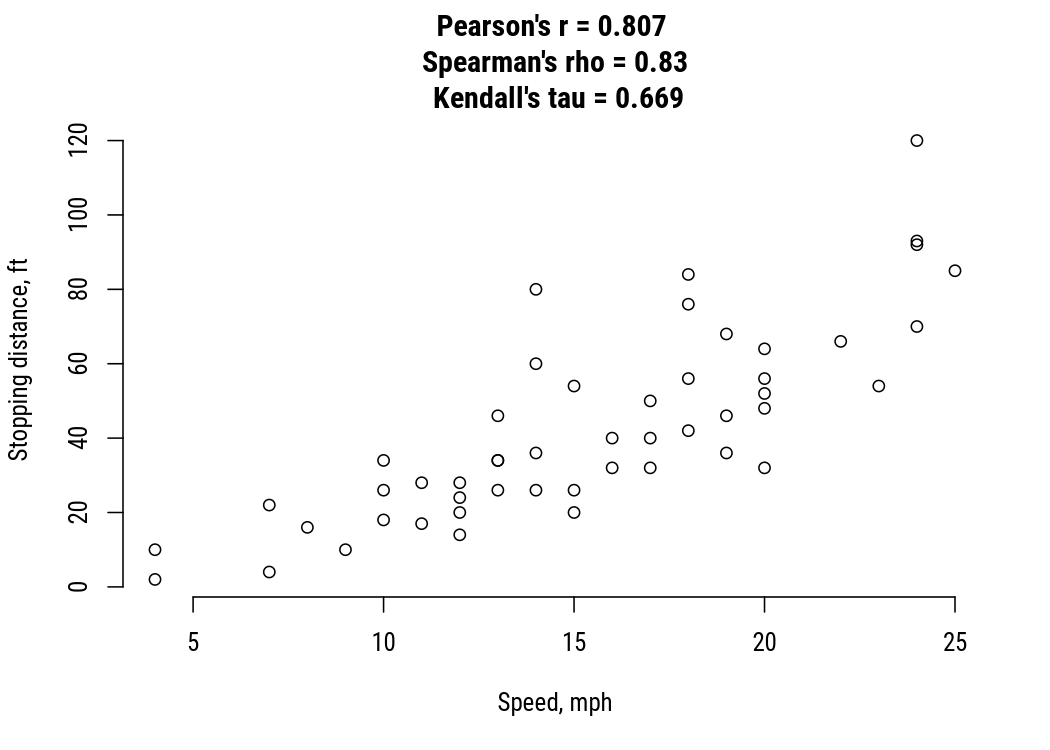

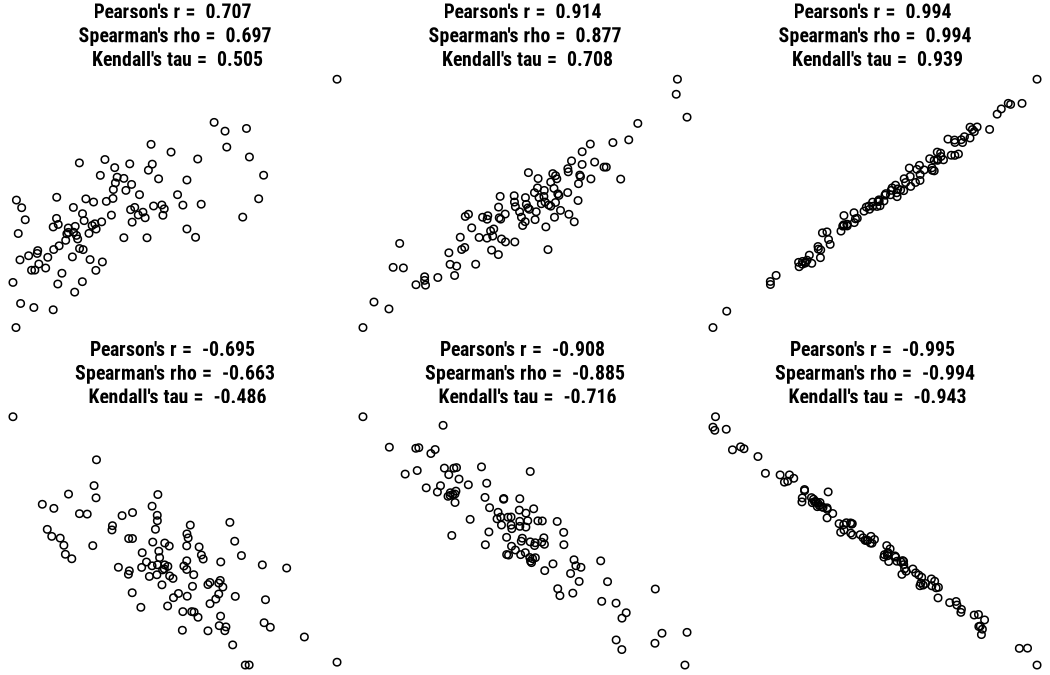

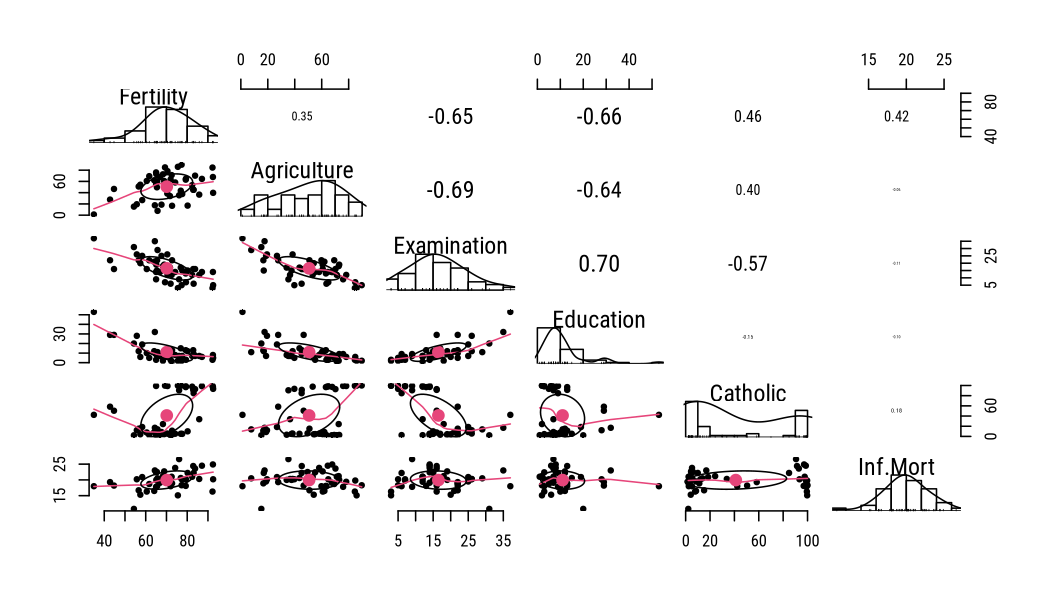

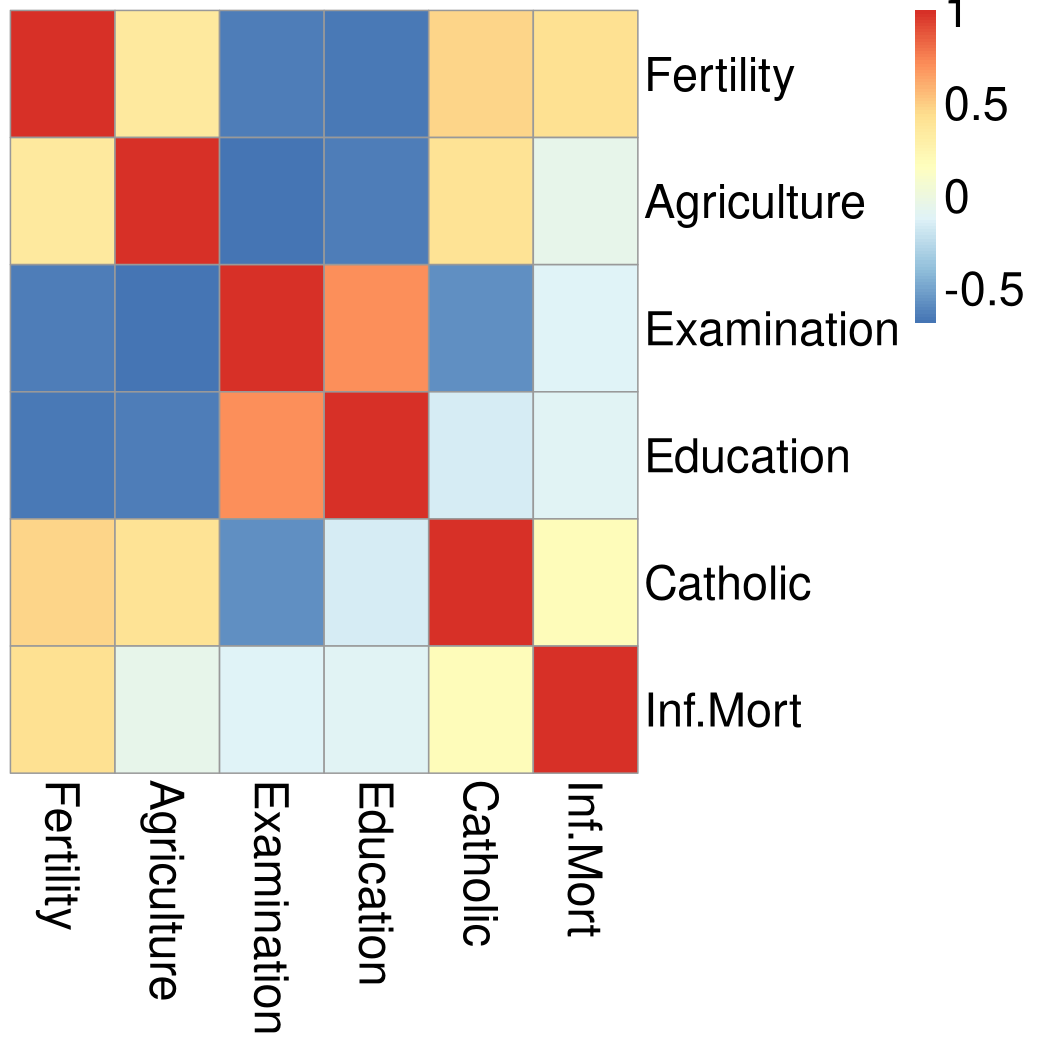

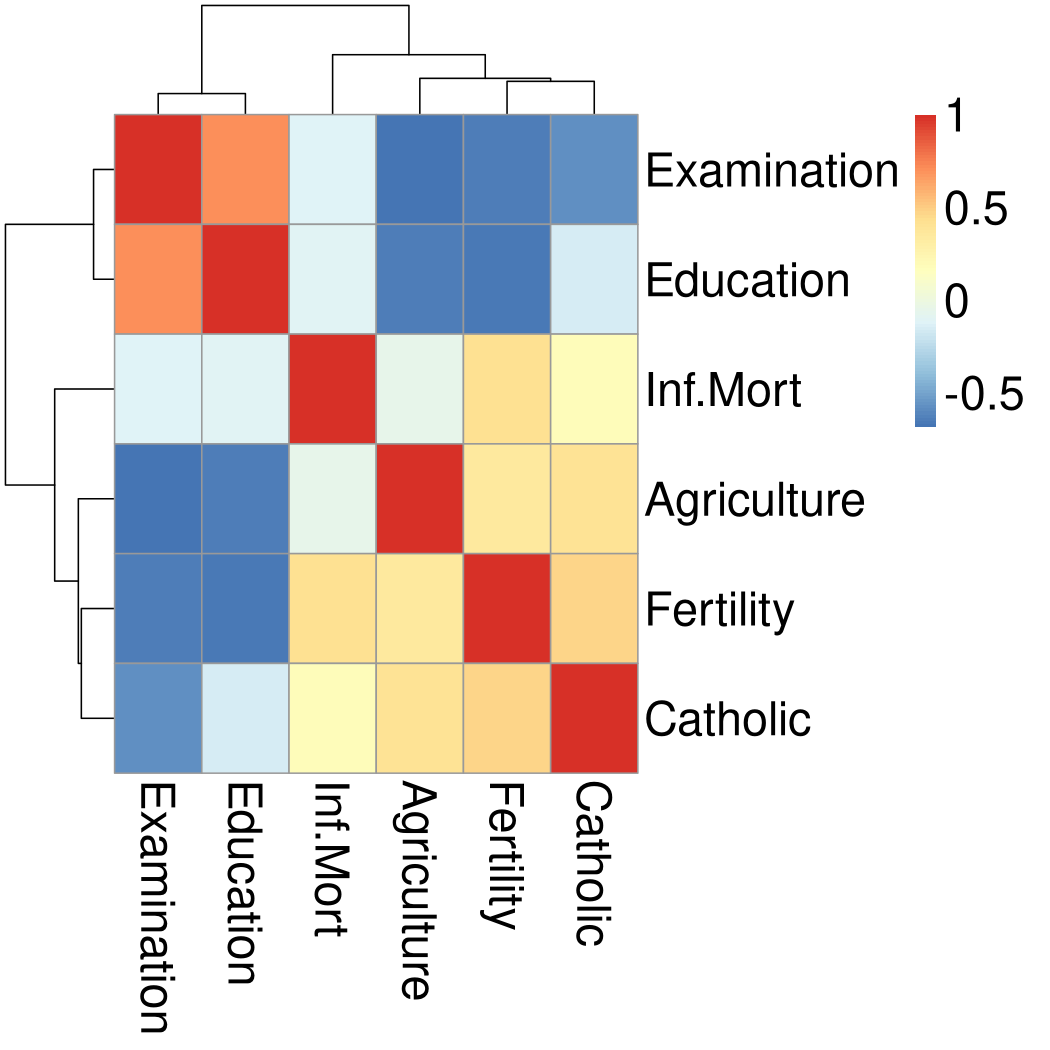

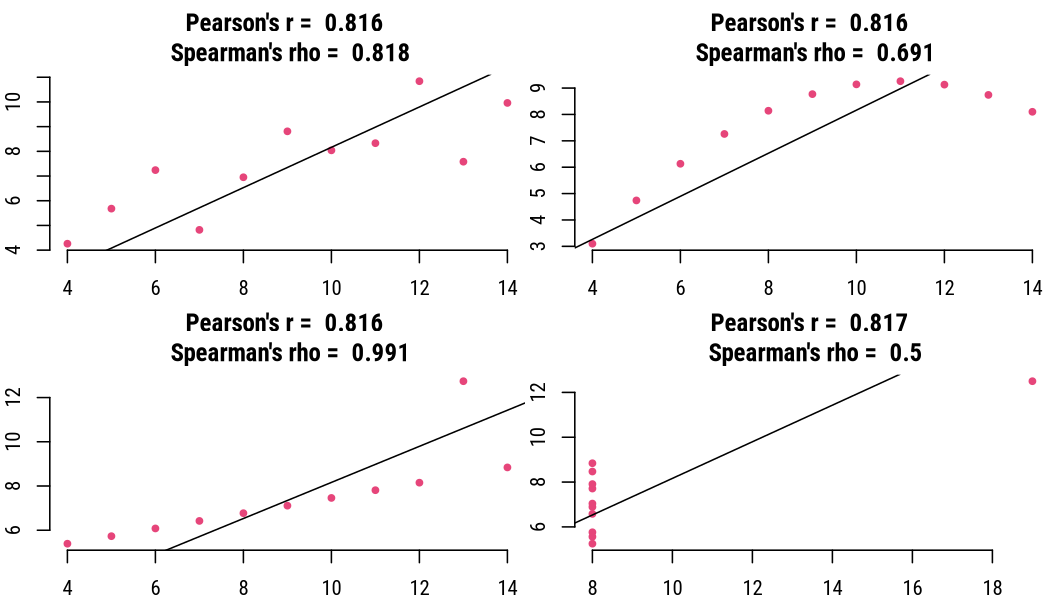

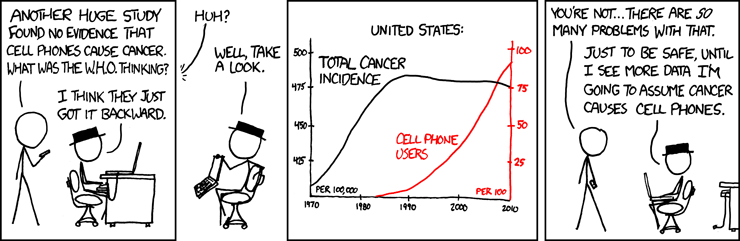

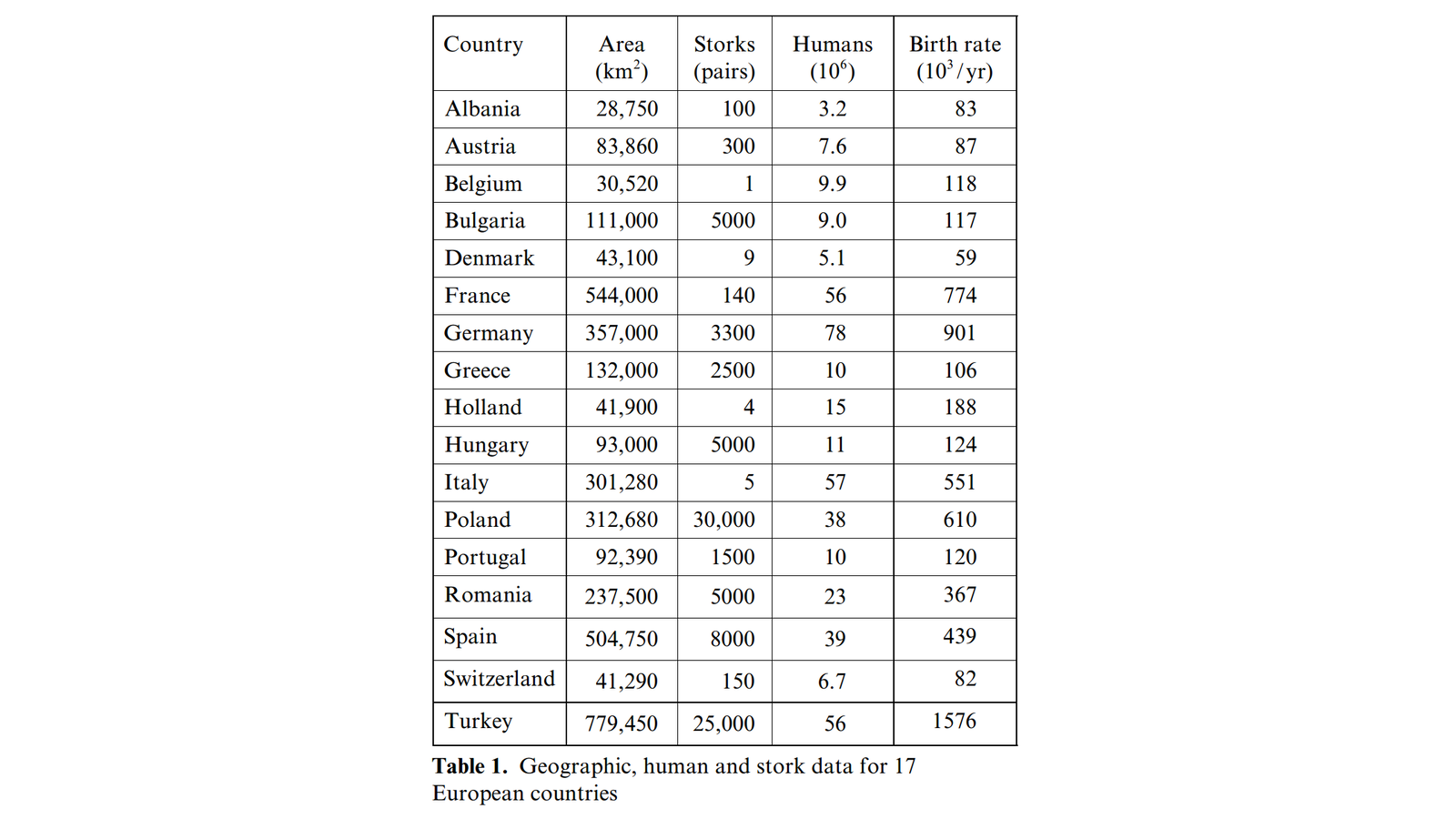

class: center, middle, inverse, title-slide # Correlation analysis ## Research methods ### Jüri Lillemets ### 2021-12-01 --- class: center middle clean # How are two variables associated? --- class: center middle inverse # Covariation --- When we want to understand how two variables are associated, then we need to examine how they **vary** together. -- So we need to estimate their **covariation**. -- **Covariance** between variables `\(x\)` and `\(y\)` can be calculated as follows: `$$Cov(x,y) = \frac{1}{n-1} \sum^n_{i=1}(x_i - \bar{x})(y_i - \bar{y})$$` --- Let's look at data on "Speed and Stopping Distances of Cars". | speed| dist| |-----:|----:| | 4| 2| | 4| 10| | 7| 4| | 7| 22| | 8| 16| | 9| 10| | 10| 18| | 10| 26| | 10| 34| | 11| 17| --- <!-- --> ??? Draw lines of possible relationships. --- The value of covariance is not very meaningful itself and can not be interpreted. | | speed| dist| |:-----|---------:|--------:| |speed | 27.95918| 109.9469| |dist | 109.94694| 664.0608| -- We need something else ... --- class: center middle inverse # Correlation coefficients --- There are several different ways to calculate correlation but they all have the same interpretation: - Minimum value is -1 and maximum value is 1. - Value of 0 indicates lack of any correlation. - Value of -1 or 1 indicates perfect correlation. - Negative value indicates inverse (negative) relationship, positive value direct (positive) relationship. --- ## Parametric and nonparametric correlation We can distinguish between two types of correlation coefficients: **parametric** and **nonparametric**. -- When we use **raw values**, then - we use differences from **mean**, - which is a **parametric** method and - we measure **linearity** of association. -- When we use **ranking of values**, then - we use **ranks**, - which is a **nonparametric** method and - we measure **monotonicity** of association. --- What is the difference in practice? Parametric correlation - uses more information and is thus **more powerful**, - is limited to **normally distributed interval or ratio data** and - measures the **linearity and not monotonicity** of association. ??? Why do we say that parametric correlation uses more information? --- > Why do we say "parametric" when we use mean? -- <!-- --> --- ## Linearity and monotonicity .pull-left[ Monotonically increasing linear relationship <!-- --> ] -- .pull-right[ Monotonically increasing non-linear relationship <!-- --> ] --- Correlation does not tell us all about the underlying data. This is why you should create scatterplots!  --- ## Pearson’s correlation coefficient A **parametric** method that evaluates **linearity** of an association. The calculation assumes - **interval or ratio** data, - **normally distributed** data, - **linearity** of relationship, and - a lack of substantial **outliers**. --- *Pearson's `\(r\)`* for association between variables `\(x\)` and `\(y\)` can be calculated as follows: `$$r = \frac{\sum_{i=1}^{n}(x_i-\bar{x})(y_i-\bar{y})}{\sum_{i=1}^{n}(x_i-\bar{x})^2 \sum_{i=1}^{n}(y_i-\bar{y})^2}$$` Essentially, we compare differences from mean value for values of each variable. --- <!-- --> --- ## Spearman rank-order correlation coefficient A **nonparametric** method that evaluates **monotonicity** of an association. The calculation assumes - **ordinal**, interval or ratio data, and - **monotonicity** of association. --- *Spearman's `\(\rho\)`* for association between variables `\(x\)` and `\(y\)` can be calculated as follows: `$$\rho = 1 - \frac{6\sum (R(x_{i})-R(y_{i}))^{2}}{n(n^{2}-1)}$$` Simply put, we compare ranks of values from each group. --- What are ranks? .pull-left[ Raw values. | speed| dist| |-----:|----:| | 4| 2| | 4| 10| | 7| 4| | 7| 22| | 8| 16| | 9| 10| | 10| 18| | 10| 26| | 10| 34| | 11| 17| ] .pull-right[ Ranks of raw values | speed| dist| |-----:|----:| | 1.5| 1.0| | 1.5| 3.5| | 3.5| 2.0| | 3.5| 8.0| | 5.0| 5.0| | 6.0| 3.5| | 8.0| 7.0| | 8.0| 9.0| | 8.0| 10.0| | 10.0| 6.0| ] --- <!-- --> --- ## Kendall rank correlation Equivalent to Spearman's rank-order correlaton coefficient. A **nonparametric** method that evaluates **monotonicity** of an association. The calculation assumes - **ordinal**, interval or ratio data, and - **monotonicity** of association. --- *Kendall's `\(\tau\)`* for association between variables `\(x\)` and `\(y\)` can be calculated as follows: `$$\tau = \frac{n_c - n_d}{\frac{1}{2} n (n-1)}$$` We are essentially evaluating if rankings of `\(x\)` and `\(y\)` are similar when we compare all observations pairwise. --- <!-- --> --- ### Spearman's `\(\rho\)` and Kendall's `\(\tau\)` As opposed to Kendall's `\(\tau\)`, Spearman's `\(\rho\)` is - **more simple** to compute, - less suitable for **small sample sizes** and - can not be directly **interpreted**. --- ## Size and direction of coefficients <!-- --> --- ## Interpretation The value of a correlation coefficient can be interpreted by: - its sign that indicates if a correlation is **positive or negative**, - its absolute size to determine the **strength of the relationship**, and - its **statistical significance**. --- The interpretation of a correlation coefficient depends on particular variables and research area. | Correlation | Interpretation | | ------------ | -------------------- | | -1.0 to -0.9 | Very strong negative | | -0.9 to -0.7 | Strong negative | | -0.7 to -0.4 | Moderate negative | | -0.4 to -0.2 | Weak negative | | -0.2 to 0 | Negligible negative | | 0 to 0.2 | Negligible positive | | 0.2 to 0.4 | Weak positive | | 0.4 to 0.7 | Moderate positive | | 0.7 to 0.9 | Strong positive | | 0.9 to 1.0 | Very strong positive | ??? E.g. physics and social sciences --- ## Statistical significance We can calculate the statistical significance for correlation coefficients using the following hypotheses: `\(H_0:\)` Variables are not associated, `\(H_1:\)` Variables are associated. -- > Can we say that the correlation also exists in population if we reject `\(H_0\)`? --- P-value for Pearson's `\(r\)` correlation coefficient can be found by calculating the probability of t-statistic on t-distribution: `$$t=r\sqrt{n-2}/\sqrt{1-r^{2}}$$` ??? The higher the absolute value of correlation coefficient and the more observations it is based on, the more likely is the coefficient generalizable to population. --- class: center middle inverse # Correlation in practice --- ## Correlation matrices and heatmaps We are rarely interested the correlation coefficient between two variables. Correlaton analysis is more useful when we wish to evaluate a lot of associations easily. -- ***Correlation matrices*** and ***heatmaps*** can be used to quickly find patterns in data. This is particularly useful for data sets with large number of variables. ??? Easier than e.g. doing a lot of tests. --- Let's look at "Swiss Fertility and Socioeconomic Indicators (1888) Data". | | Fertility| Agriculture| Examination| Education| Catholic| Inf.Mort| |:------------|---------:|-----------:|-----------:|---------:|--------:|--------:| |Courtelary | 80.2| 17.0| 15| 12| 9.96| 22.2| |Delemont | 83.1| 45.1| 6| 9| 84.84| 22.2| |Franches-Mnt | 92.5| 39.7| 5| 5| 93.40| 20.2| |Moutier | 85.8| 36.5| 12| 7| 33.77| 20.3| |Neuveville | 76.9| 43.5| 17| 15| 5.16| 20.6| |Porrentruy | 76.1| 35.3| 9| 7| 90.57| 26.6| |Broye | 83.8| 70.2| 16| 7| 92.85| 23.6| |Glane | 92.4| 67.8| 14| 8| 97.16| 24.9| |Gruyere | 82.4| 53.3| 12| 7| 97.67| 21.0| |Sarine | 82.9| 45.2| 16| 13| 91.38| 24.4| --- ### Pairs plot This includes simply pairwise plots between all variables. <!-- --> --- ### Correlation matrix .small[ | | Fertility| Agriculture| Examination| Education| Catholic| Inf.Mort| |:-----------|---------:|-----------:|-----------:|---------:|--------:|--------:| |Fertility | 1.000| 0.353| -0.646| -0.664| 0.464| 0.417| |Agriculture | 0.353| 1.000| -0.687| -0.640| 0.401| -0.061| |Examination | -0.646| -0.687| 1.000| 0.698| -0.573| -0.114| |Education | -0.664| -0.640| 0.698| 1.000| -0.154| -0.099| |Catholic | 0.464| 0.401| -0.573| -0.154| 1.000| 0.175| |Inf.Mort | 0.417| -0.061| -0.114| -0.099| 0.175| 1.000| ] --- ### Heatmap Heatmap is just the correlation matrix in colors. .pull-left[ <!-- --> ] -- .pull-right[ <!-- --> ] --- class: center middle inverse # Limitations of correlation --- ## Relationship might not be linear Correlation coefficient tells us nothing about the shape of an underlying relationship. --- ### Anscombe's quartet <!-- --> -- This is why you should plot your data! ??? We can see that Pearson's r is the same. But Spearman's rho considers monotonicity. --- ## Correlation does not imply causation The fact that variables are associated does not mean that there is a causal relationship between them. -- In addition to one variable having an effect on another, high correlations are often the result of - a **spurious relationship** or - a **confounding variable**. ??? Perhaps one of the most repeated ideas in teaching statistics. --- ### Spurious correlation  .footnote[Source: Xkcd. Cell phones] --- ### Confounding variable #### Storks deliver babies ( `\(p = 0.008\)` ) It is sometimes said that babies are delivered by storks . A study investigated the association between the number of breeding pairs of storks and birth rate. --- Countries that have more pairs of storks tend to have higher birth rates as well.  .footnote[Matthews R. (2000). Storks Deliver Babies (p= 0.008). Teaching Statistics 22: 36–38. doi:10.1111/1467-9639.00013] ??? We can see that countries that have more storks also have a higher birth rate --- .small[ > The existence of this correlation is confirmed by performing a linear regression of the annual number of births in each country (the final column in table 1) against the number of breeding pairs of white storks (column 3). This leads to a **correlation coeffcient of `\(r = 0.62\)`**, whose statistical significance can be gauged using the standard t-test, where `\(t=r\sqrt{n-2}/\sqrt{1-r^{2}}\)` and `\(n\)` is the sample size. In our case, ** `\(n = 17\)` so that `\(t = 3.06\)`, which for `\((n - 2) = 15\)` degrees of freedom leads to a p-value of `\(0.008\)`**. ] .footnote[Matthews R. (2000). Storks Deliver Babies (p= 0.008). Teaching Statistics 22: 36–38. doi:10.1111/1467-9639.00013] --- > Why would the number of breeding pairs of storks and birth rate be associated? -- .small[ > The most plausible explanation of the observed correlation is, of course, the existence of a **confounding variable: some factor common to both birth rates and the number of breeding pairs of storks** which - like age in the reading skill/shoe-size correlation - can lead to a statistical correlation between two > variables which are not directly linked themselves. **One candidate for a potential confounding variable is land area**: readers are invited to investigate this possibility using the data in table 1. ] .footnote[Matthews R. (2000). Storks Deliver Babies (p= 0.008). Teaching Statistics 22: 36–38. doi:10.1111/1467-9639.00013] --- #### What is a counfounding/intervening variable? .pull-left[ `\(X\)` is correlated to `\(Y\)` , so we might think that `\(X\)` causes `\(Y\)` . <div id="htmlwidget-19646a5eef4bdc2a17c7" style="width:400px;height:200px;" class="grViz html-widget"></div> <script type="application/json" data-for="htmlwidget-19646a5eef4bdc2a17c7">{"x":{"diagram":"digraph {rankdir=LR; node [shape = circle] X -> Y}","config":{"engine":"dot","options":null}},"evals":[],"jsHooks":[]}</script> ] -- .pull-right[ Actually both might be caused by `\(Z\)` , which leads to the correlation between `\(X\)` and `\(Y\)`. <div id="htmlwidget-40d6a2ed3d499b33960e" style="width:400px;height:200px;" class="grViz html-widget"></div> <script type="application/json" data-for="htmlwidget-40d6a2ed3d499b33960e">{"x":{"diagram":"digraph {rankdir=LR; node [shape = circle] X -> Y [dir=none]; Z -> X; Z -> Y}","config":{"engine":"dot","options":null}},"evals":[],"jsHooks":[]}</script> ] --- class: middle  .footnote[Source: Xkcd. Correlation] --- class: center middle inverse # Practical application --- Use the data set `Fatalities`. > Are variables normally distributed? > Are relationships between variables linear? > Which variables are associated to traffic fatalities? --- Use the data set `PSID1976`. > Are variables normally distributed? > Are relationships between variables linear? > Which variables are related to participation in the labor force in 1975? You might need to recode some variables that are not numeric. --- class: inverse ??? More correlation measures. Example data with a lot of variables.