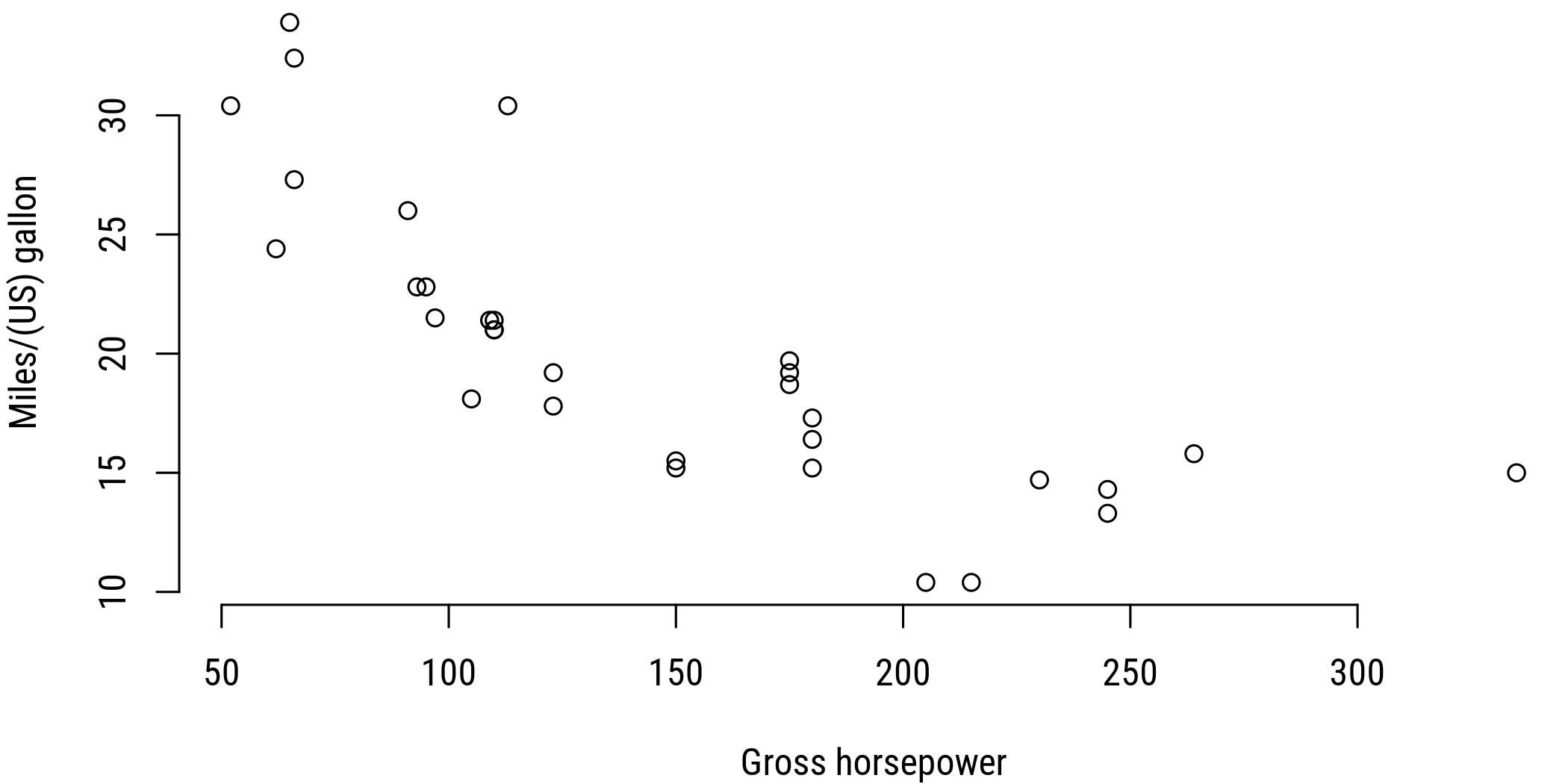

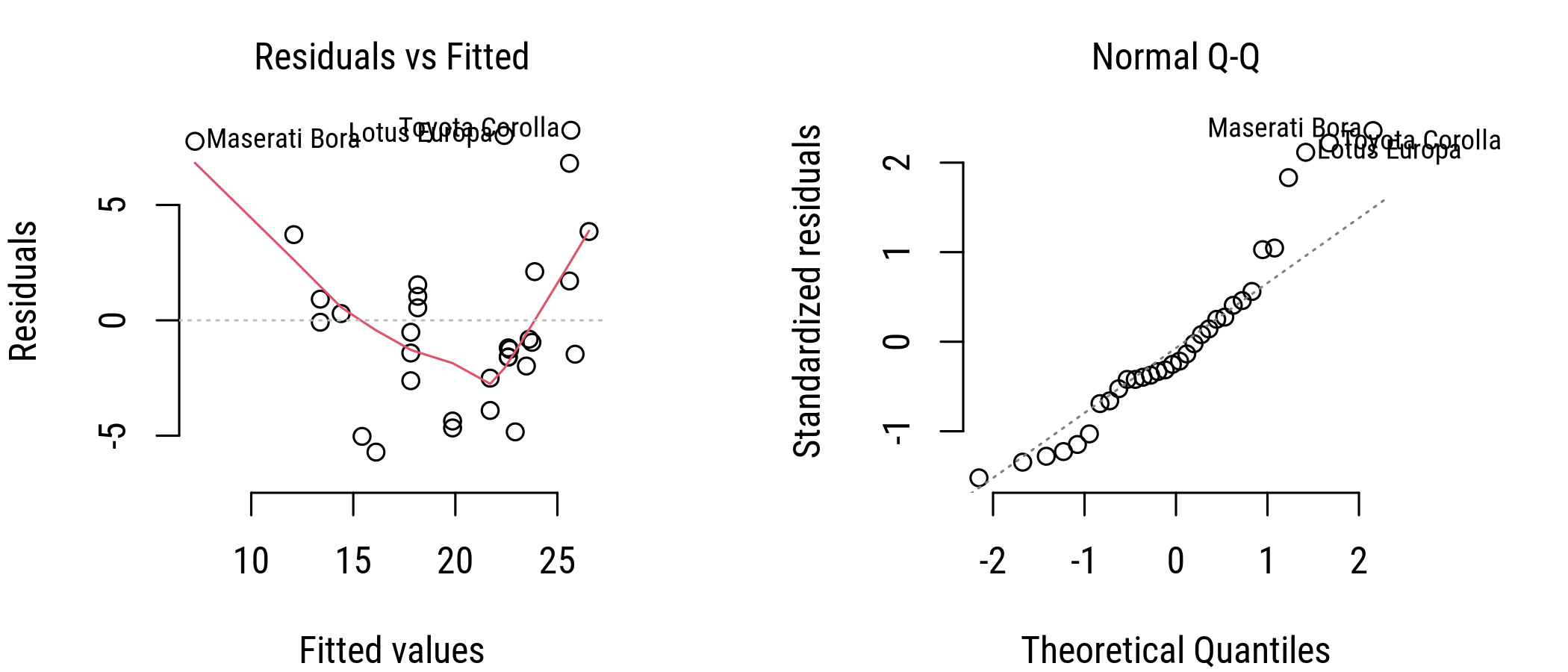

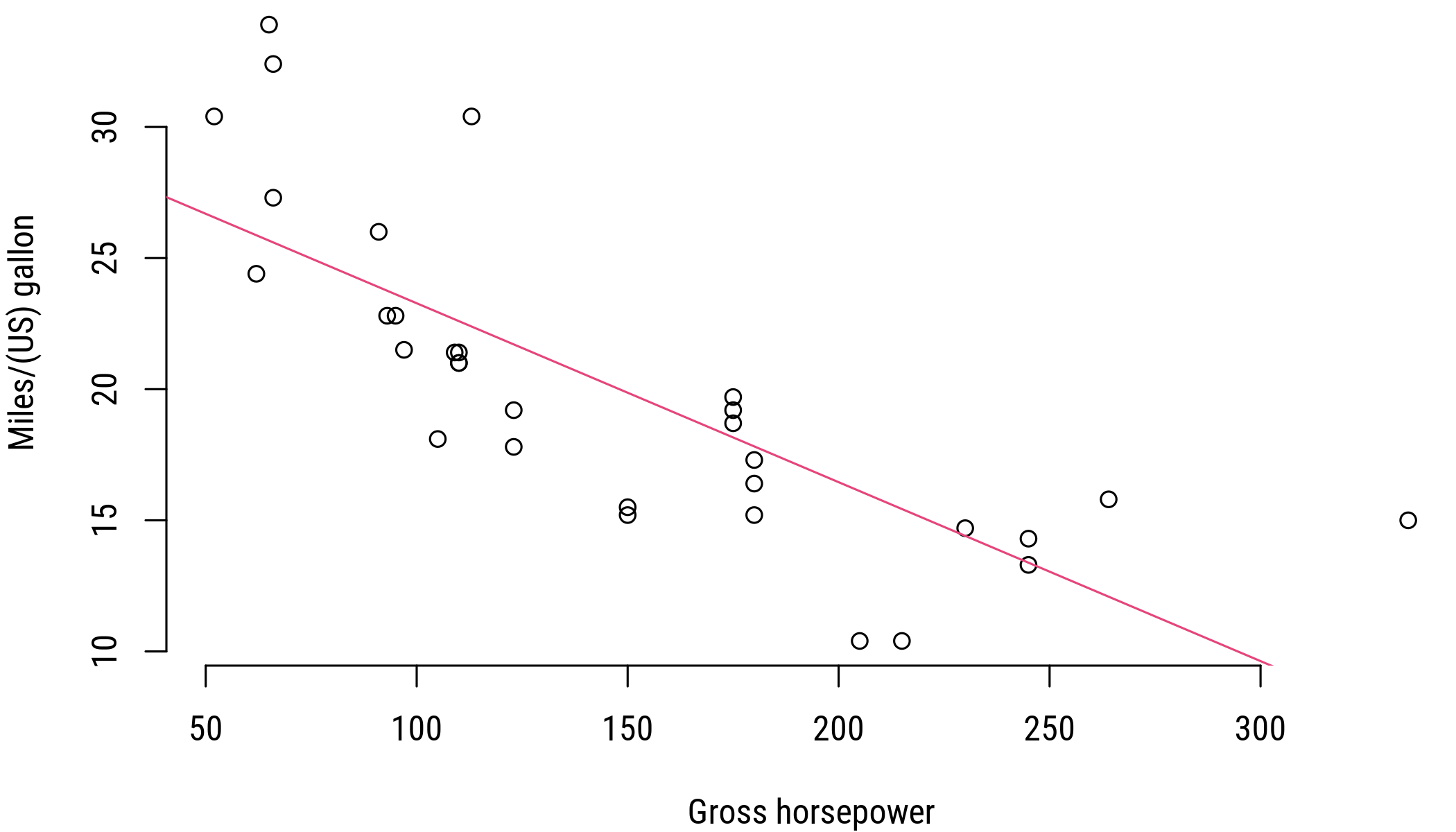

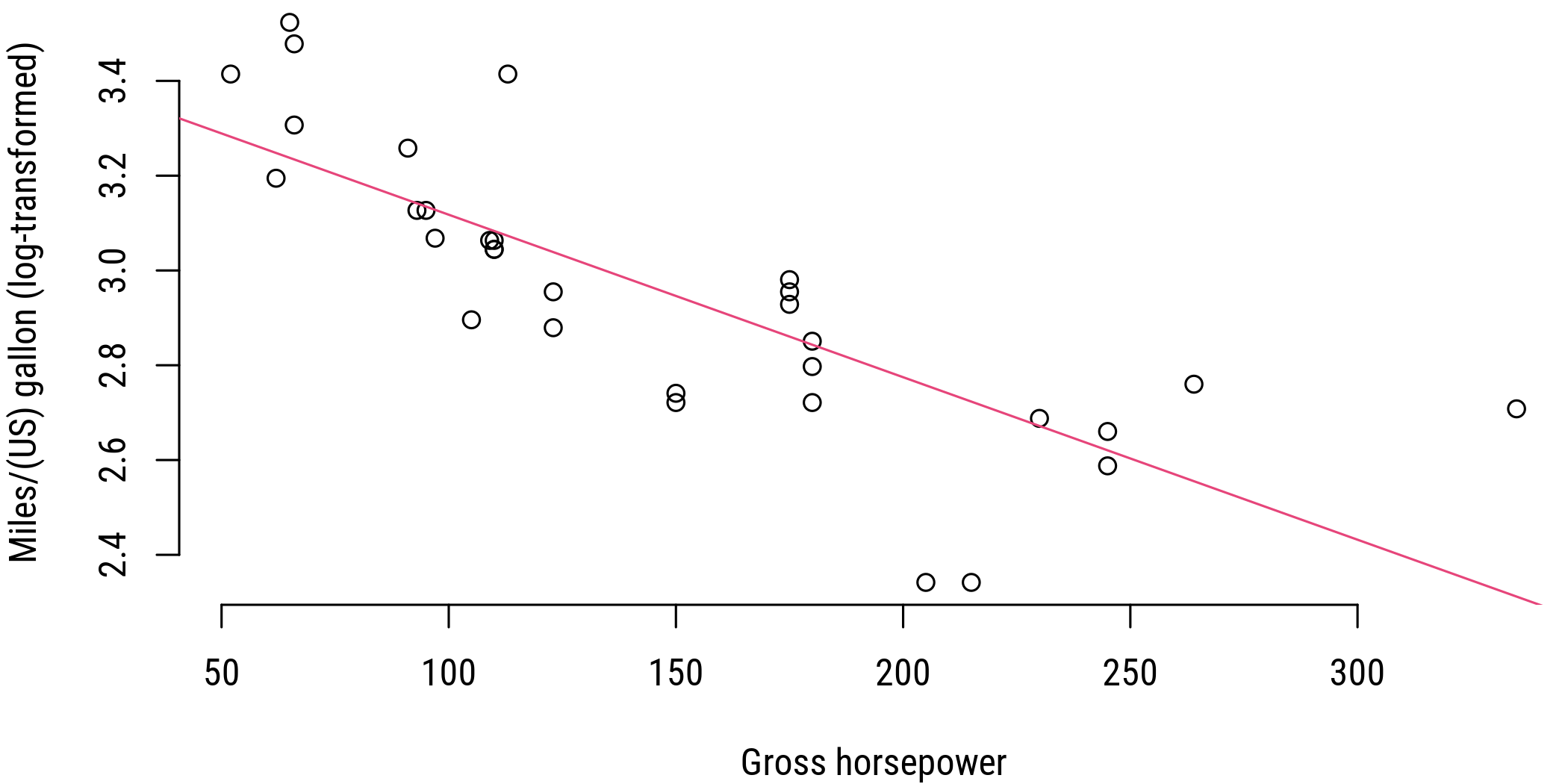

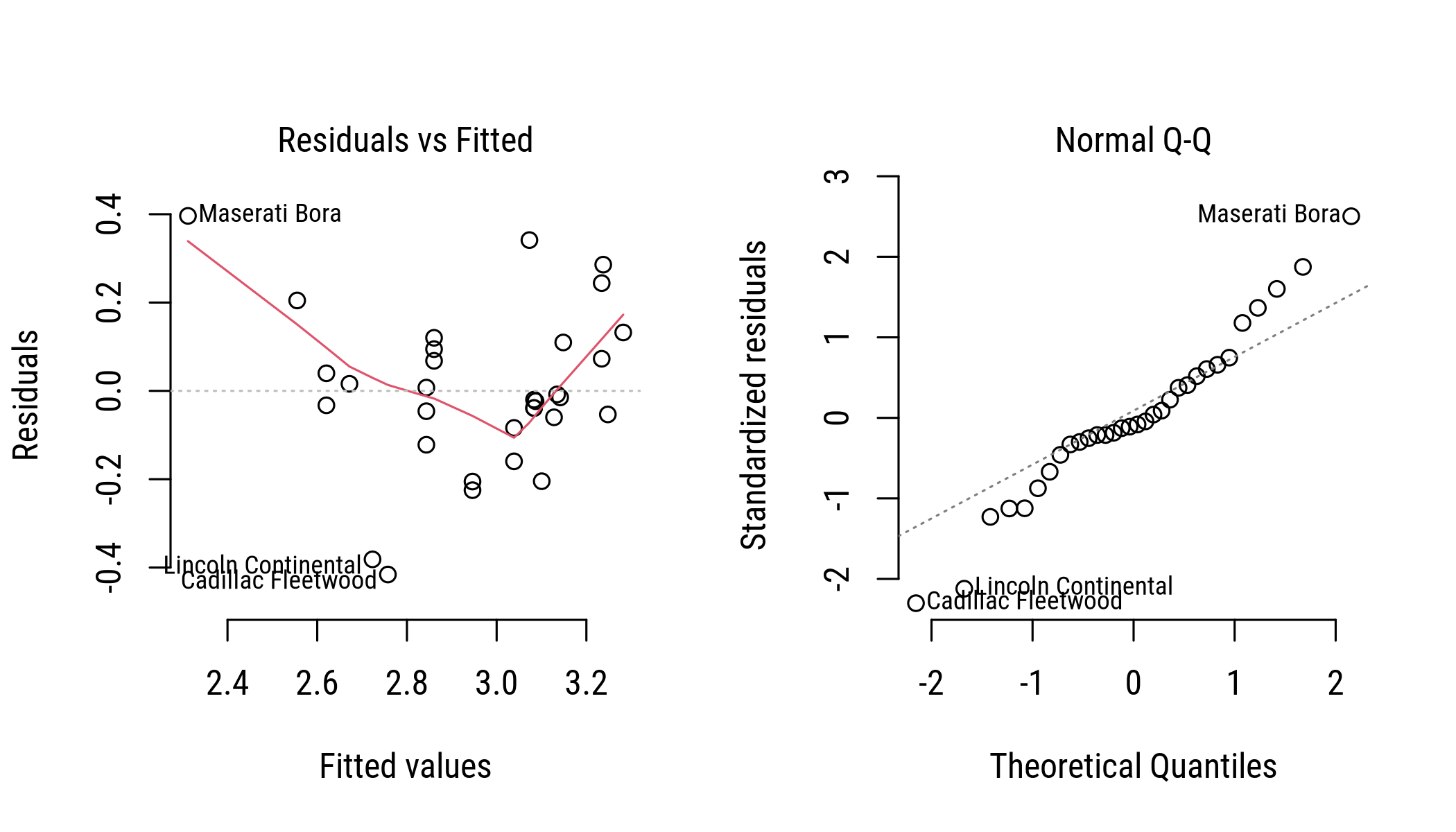

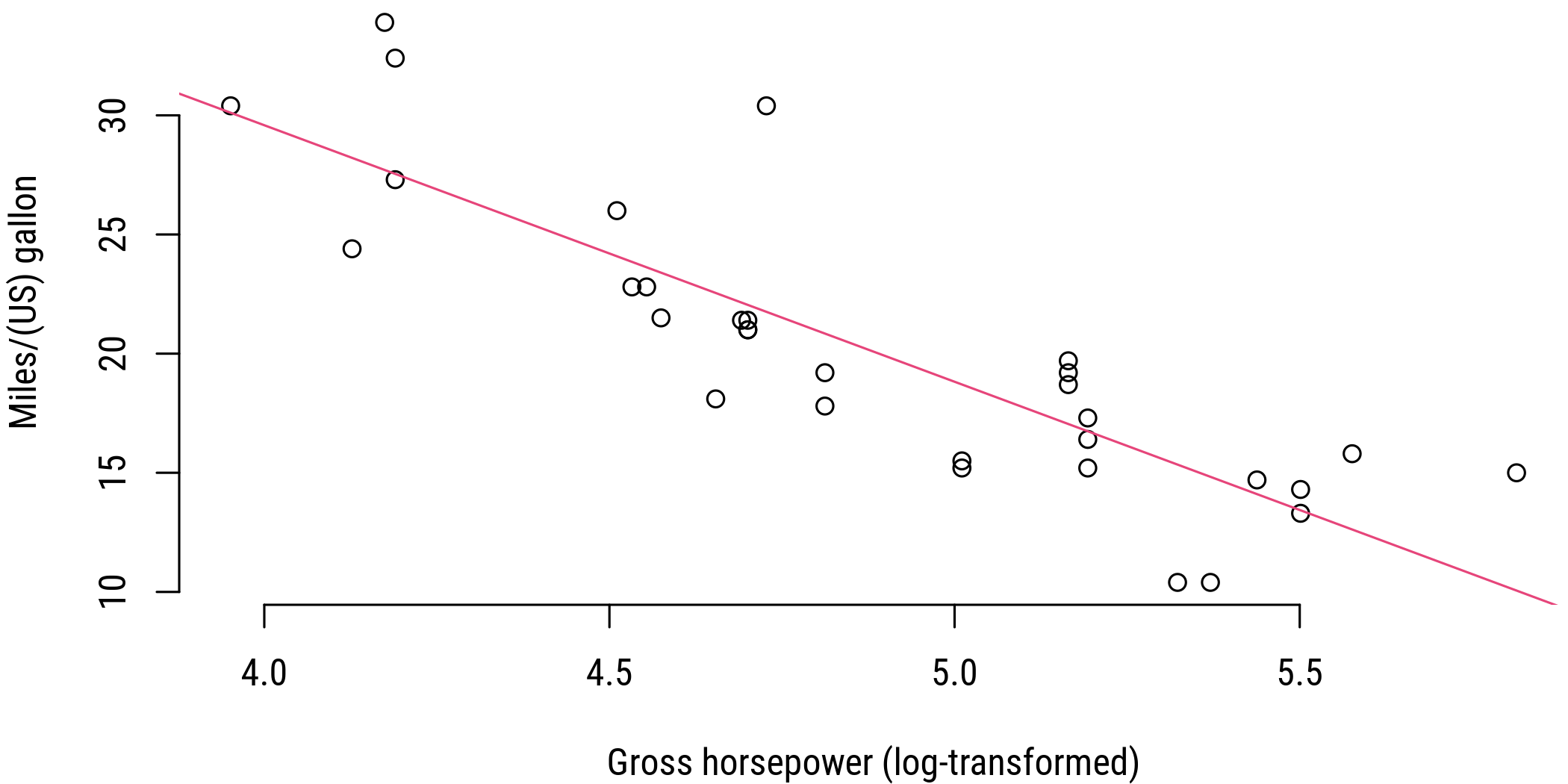

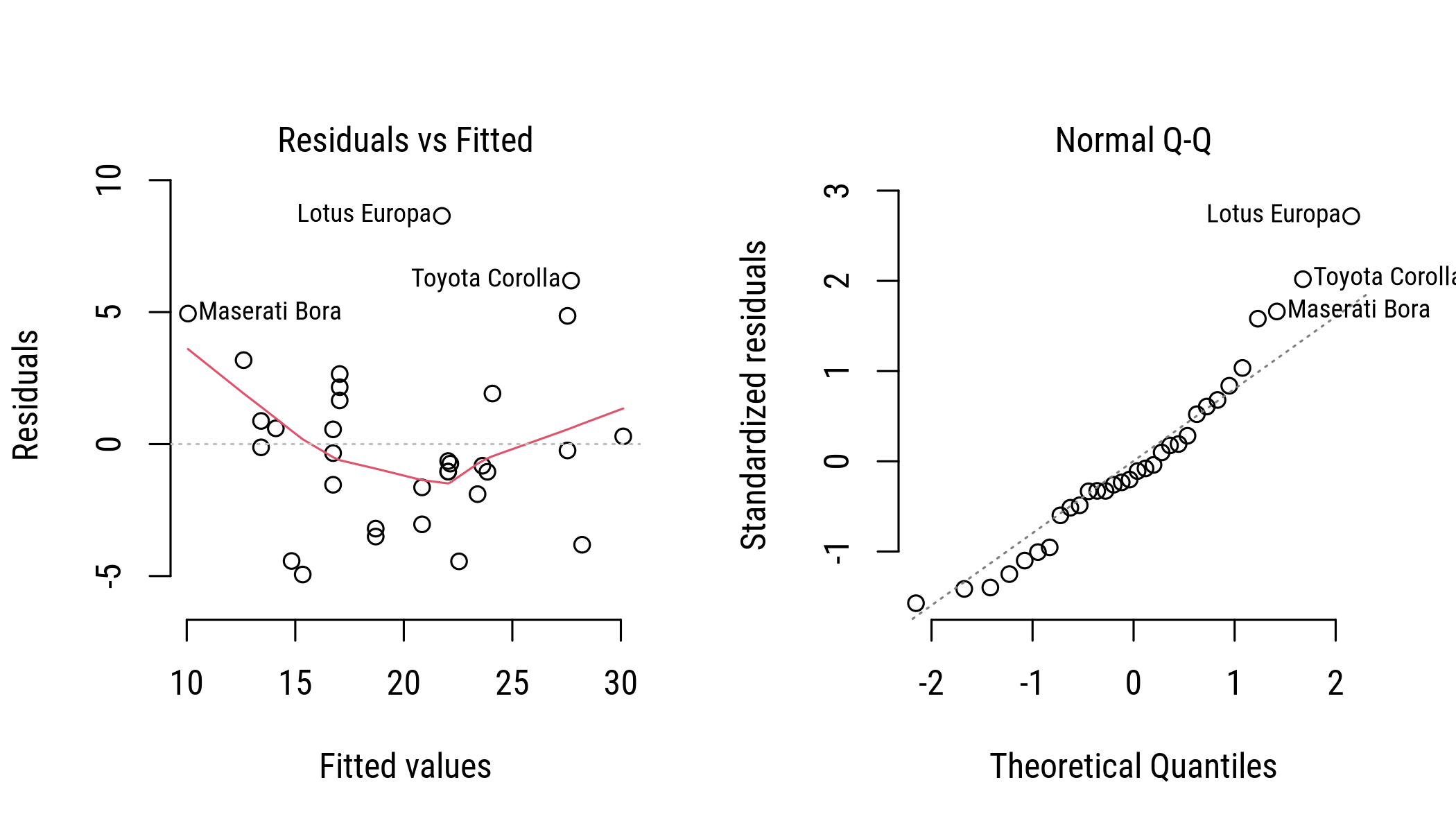

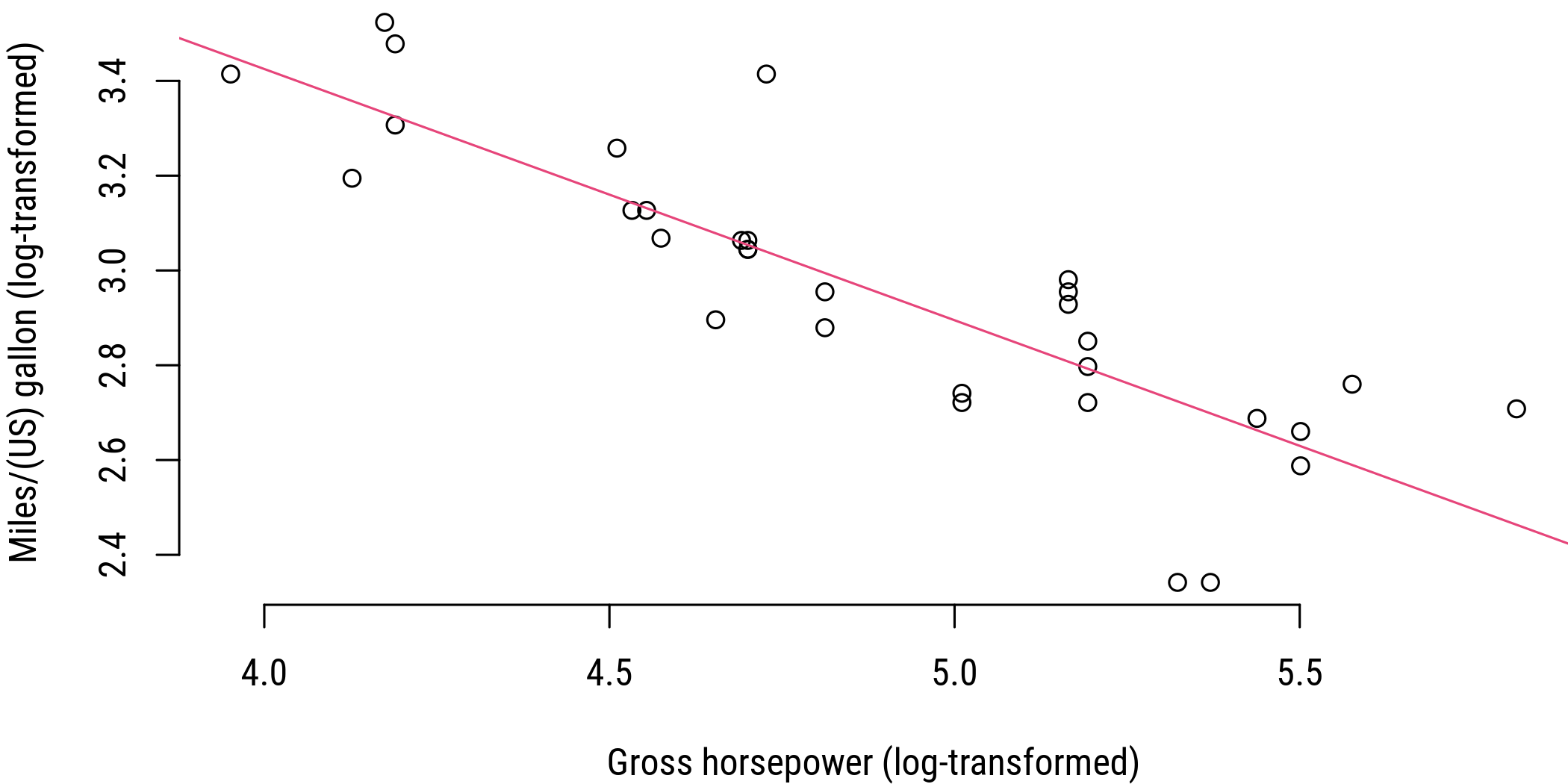

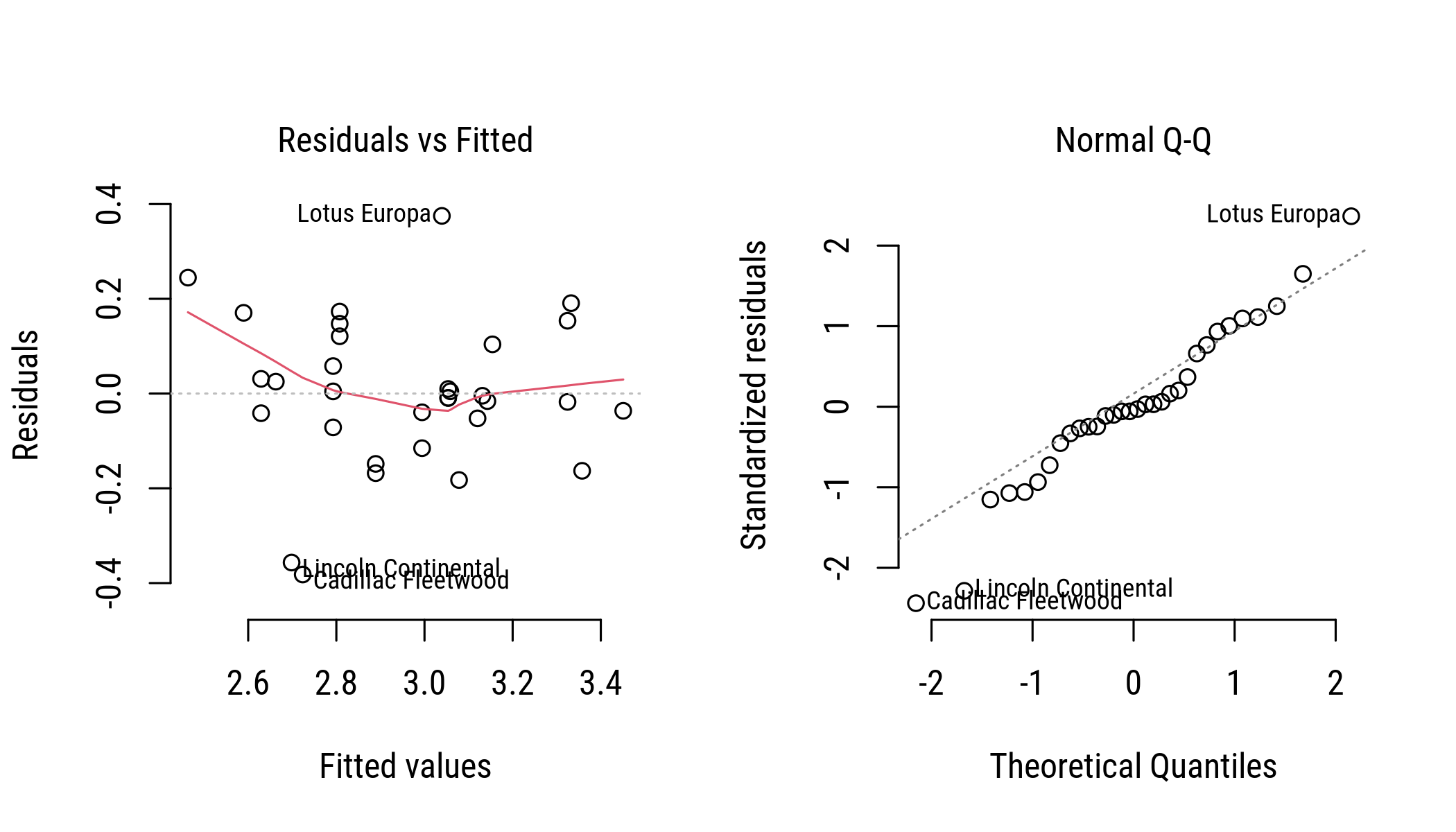

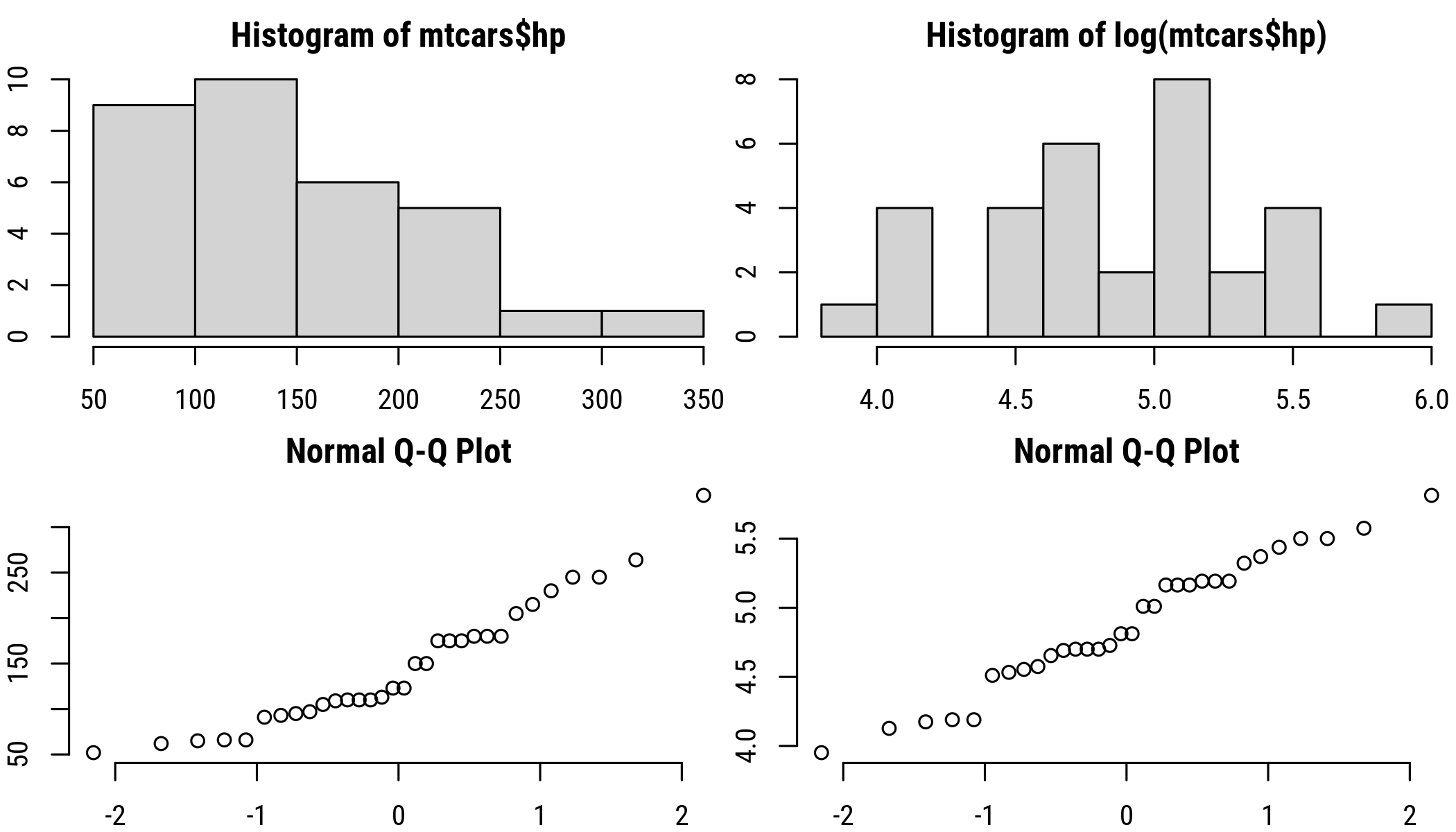

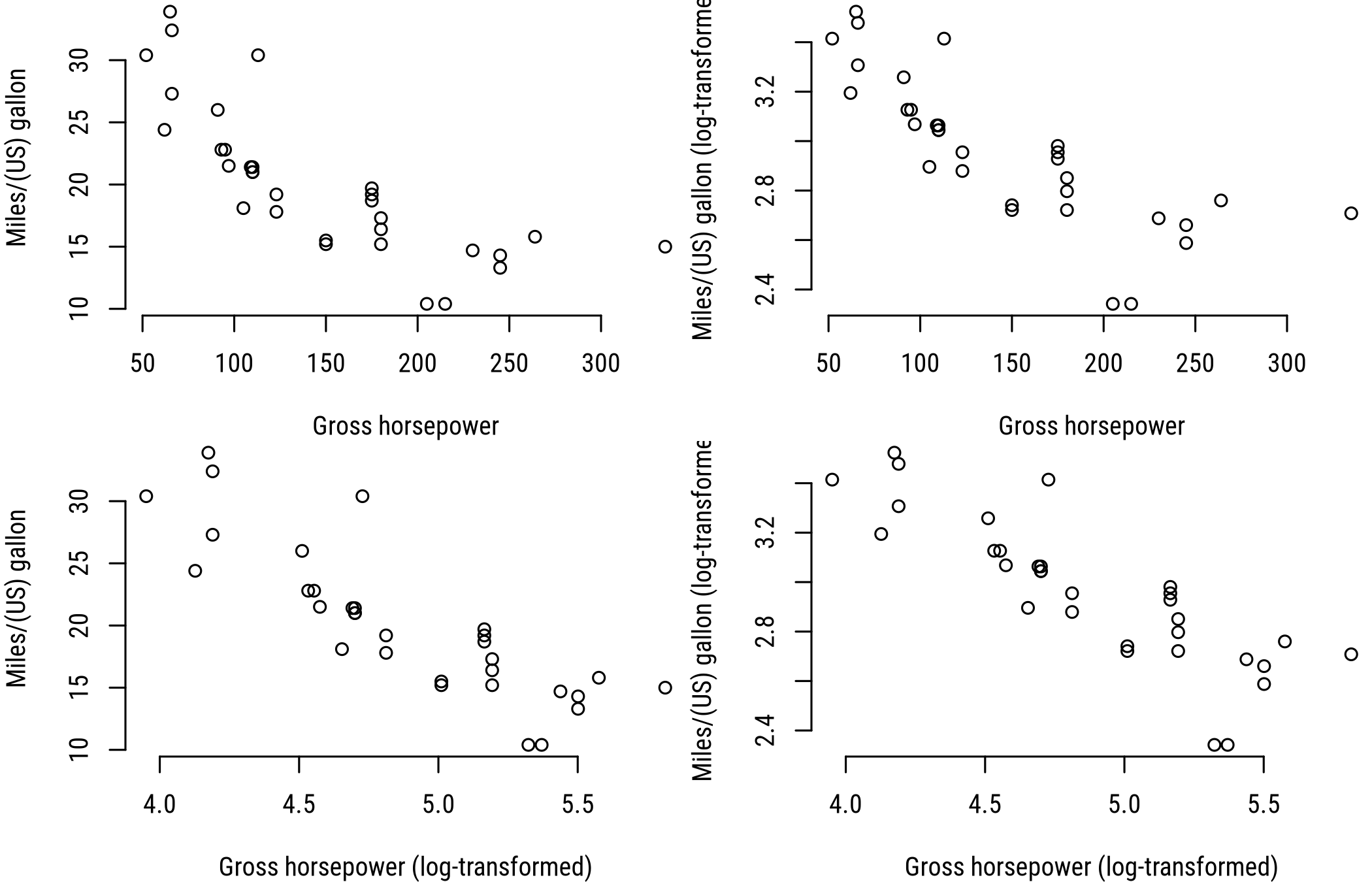

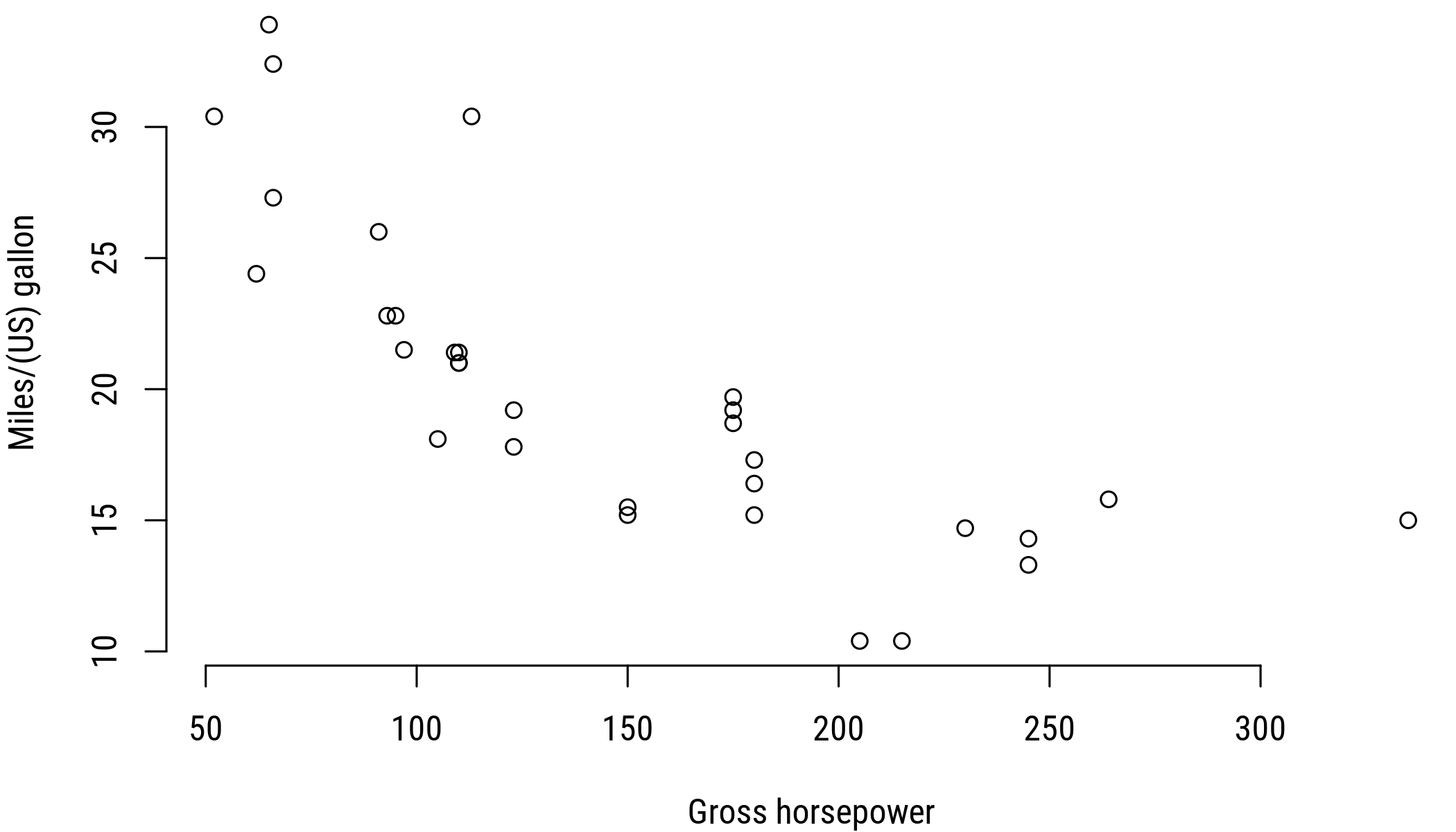

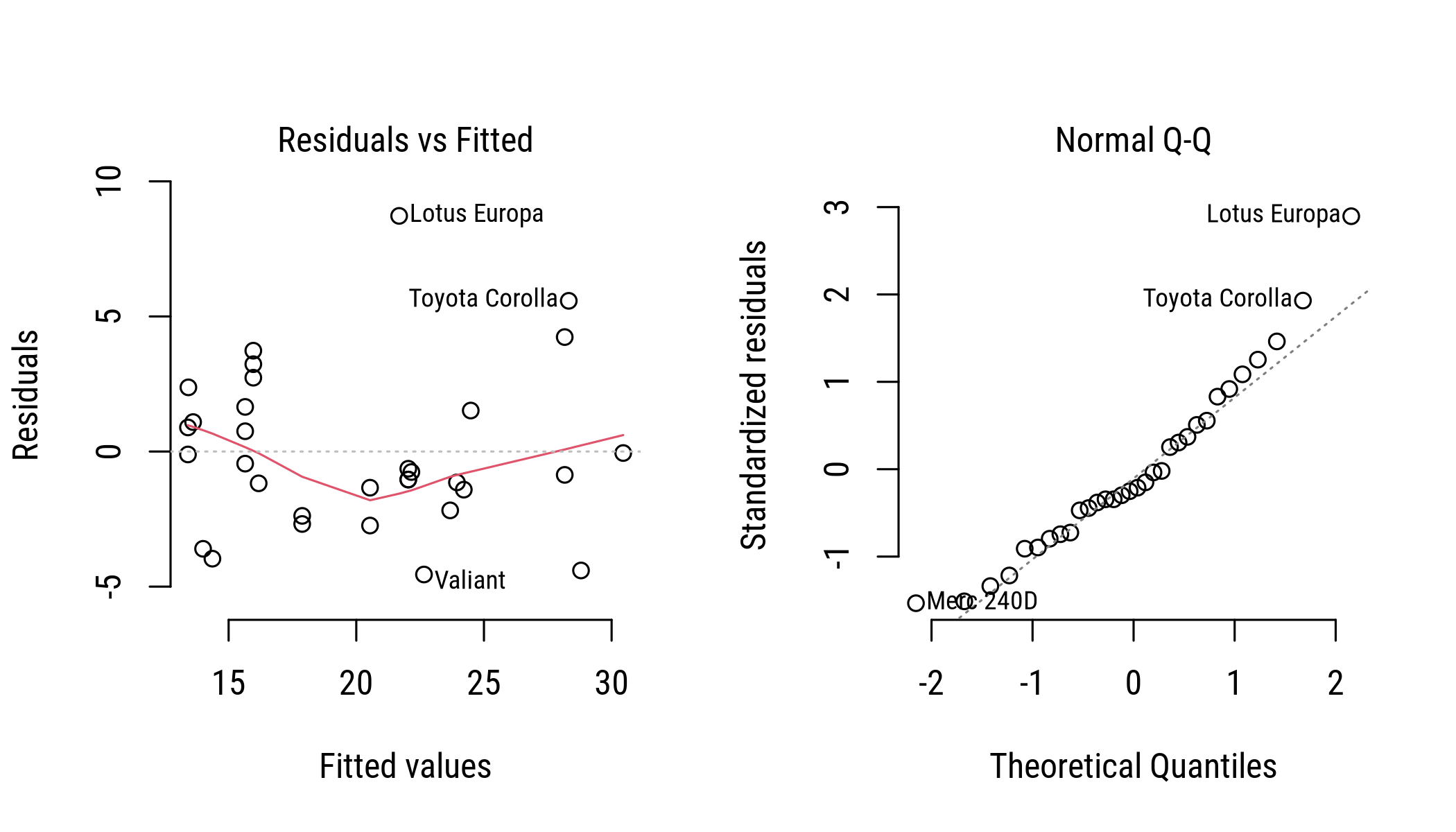

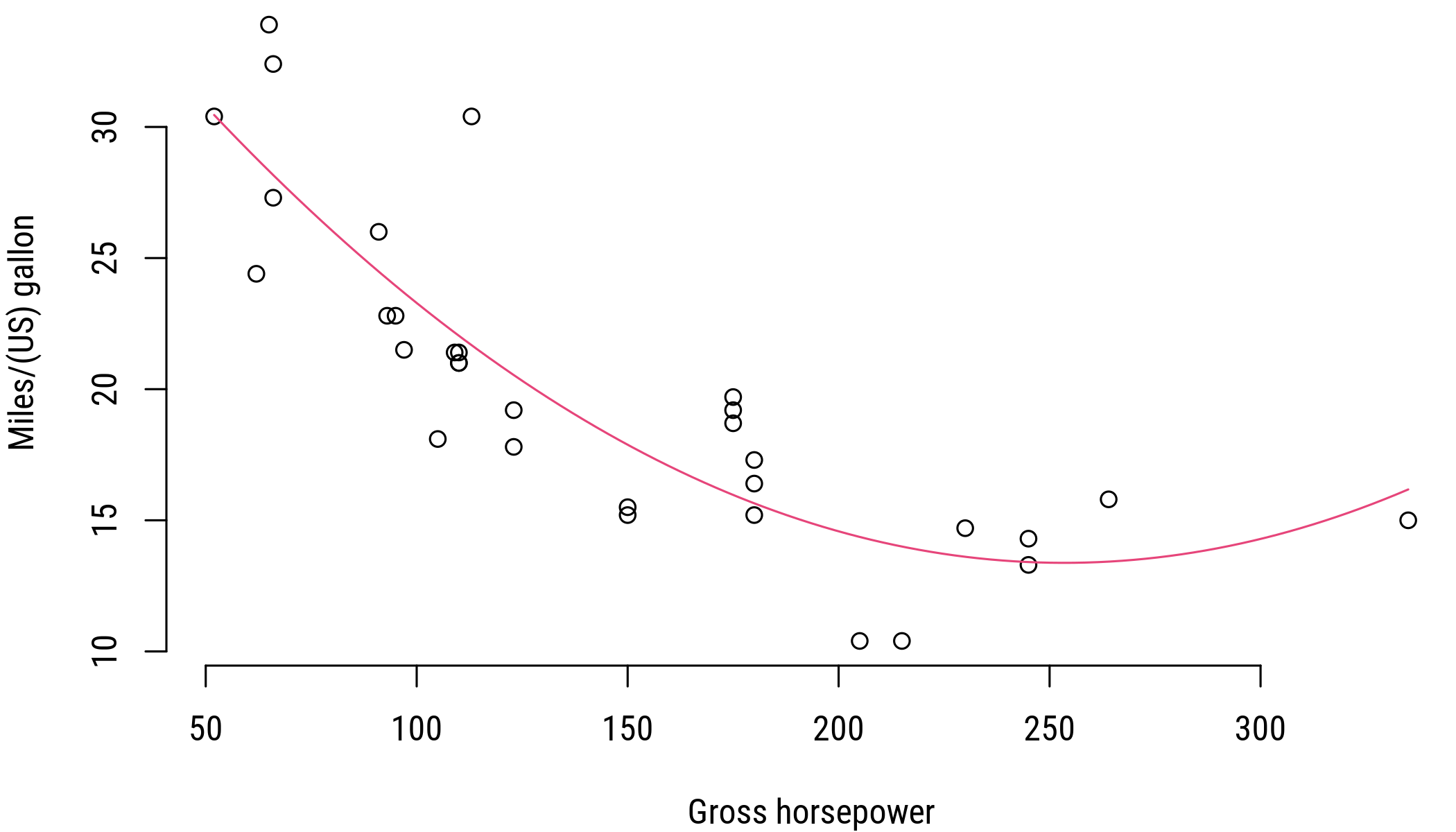

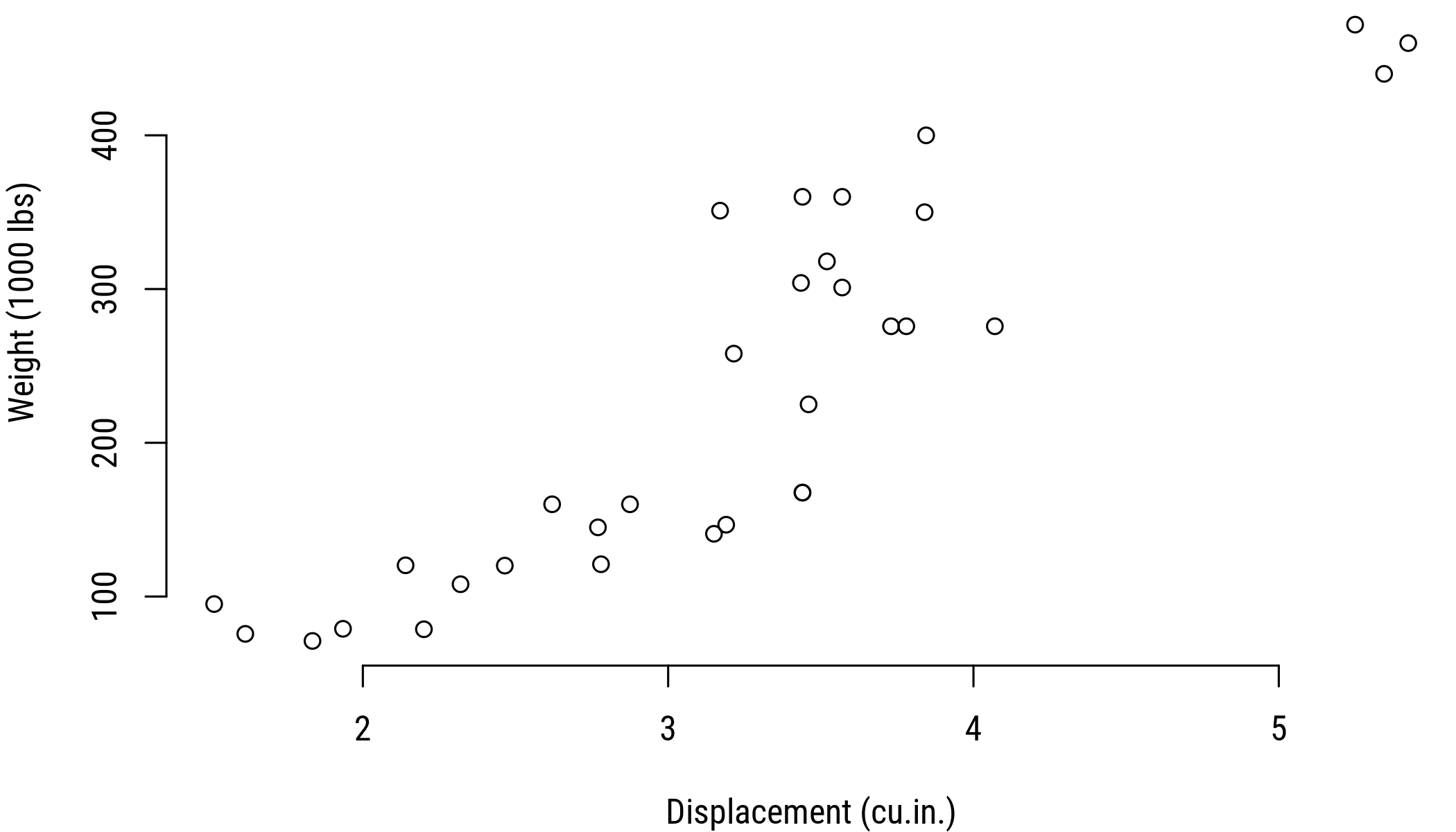

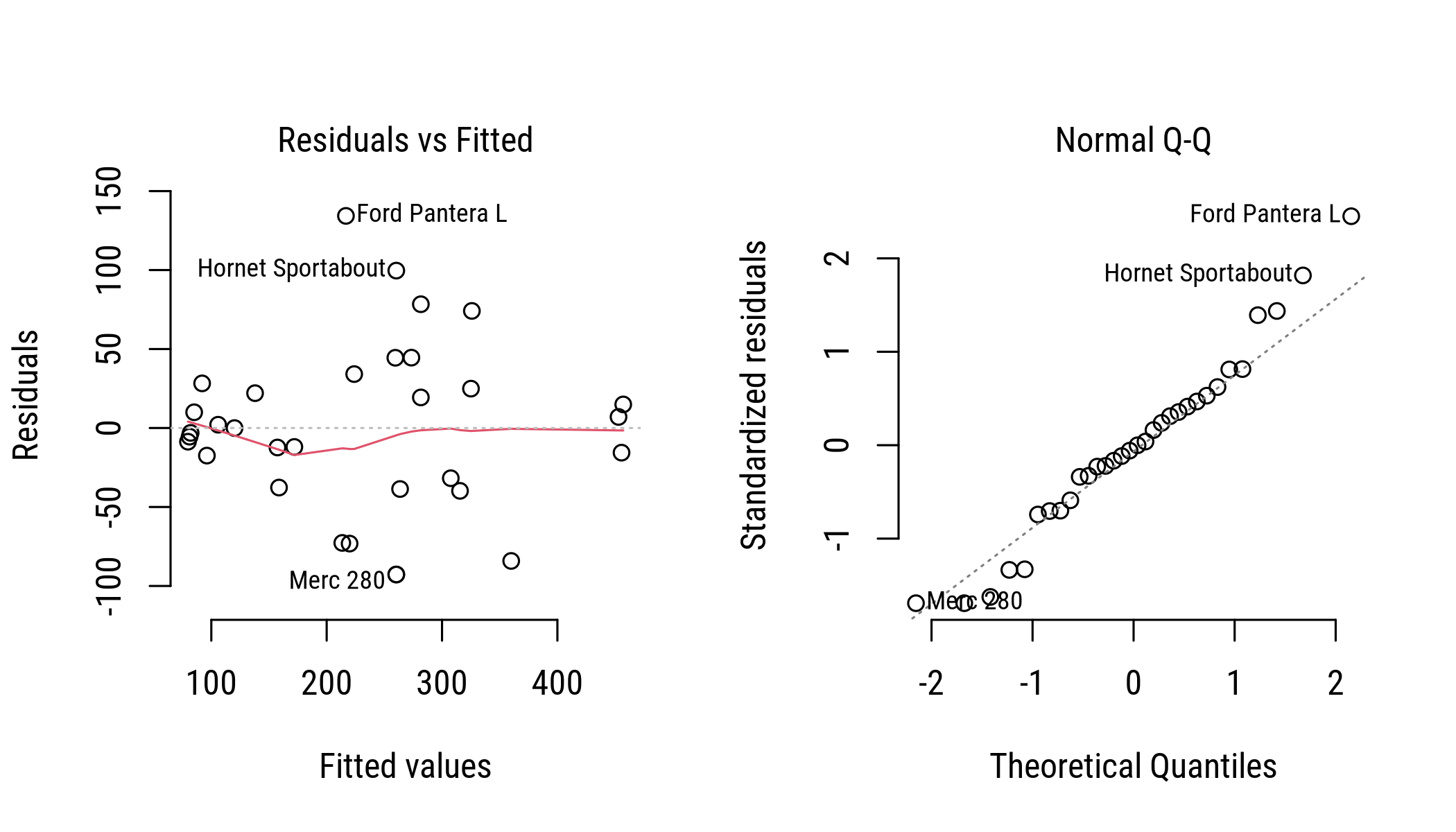

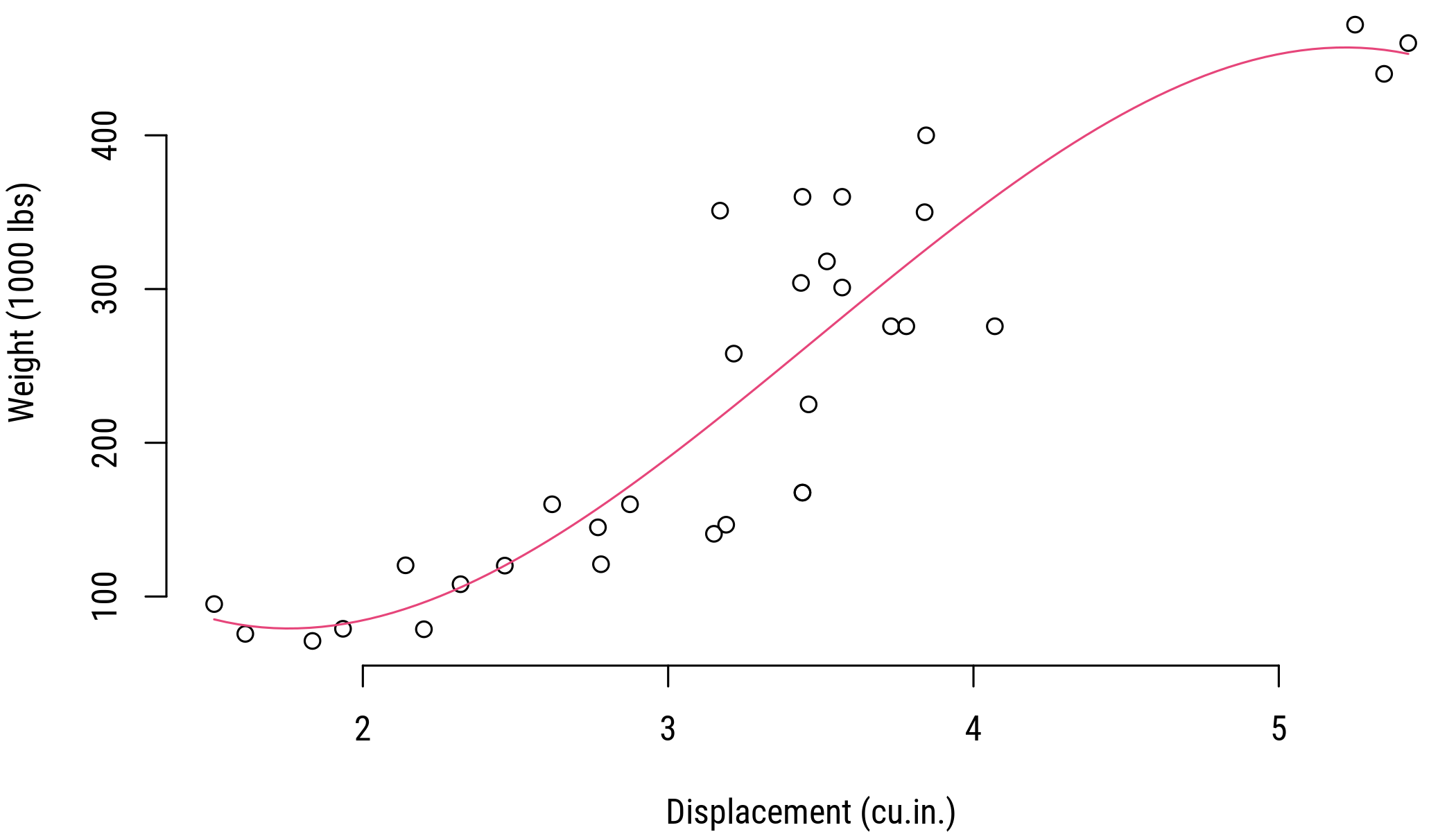

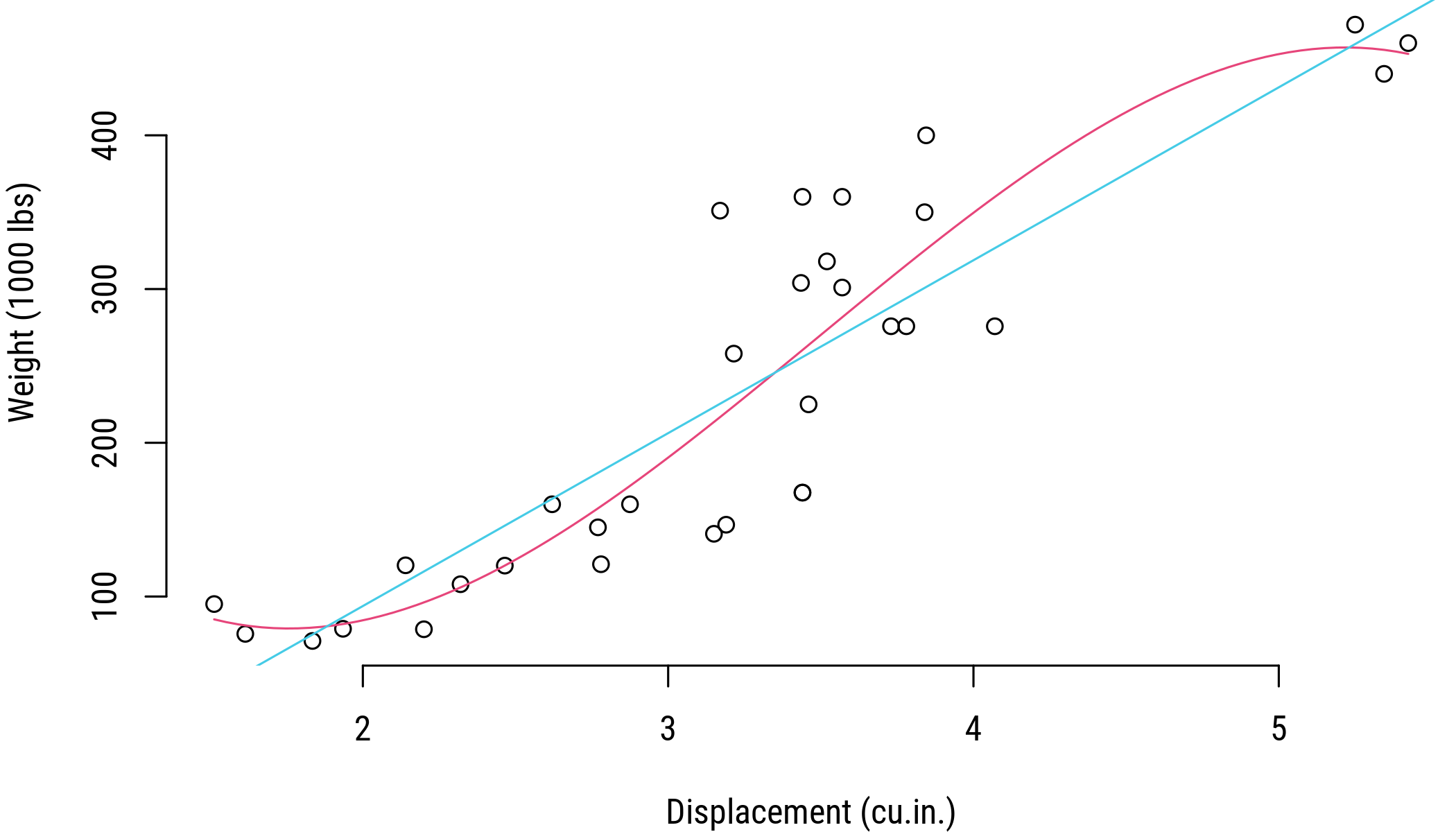

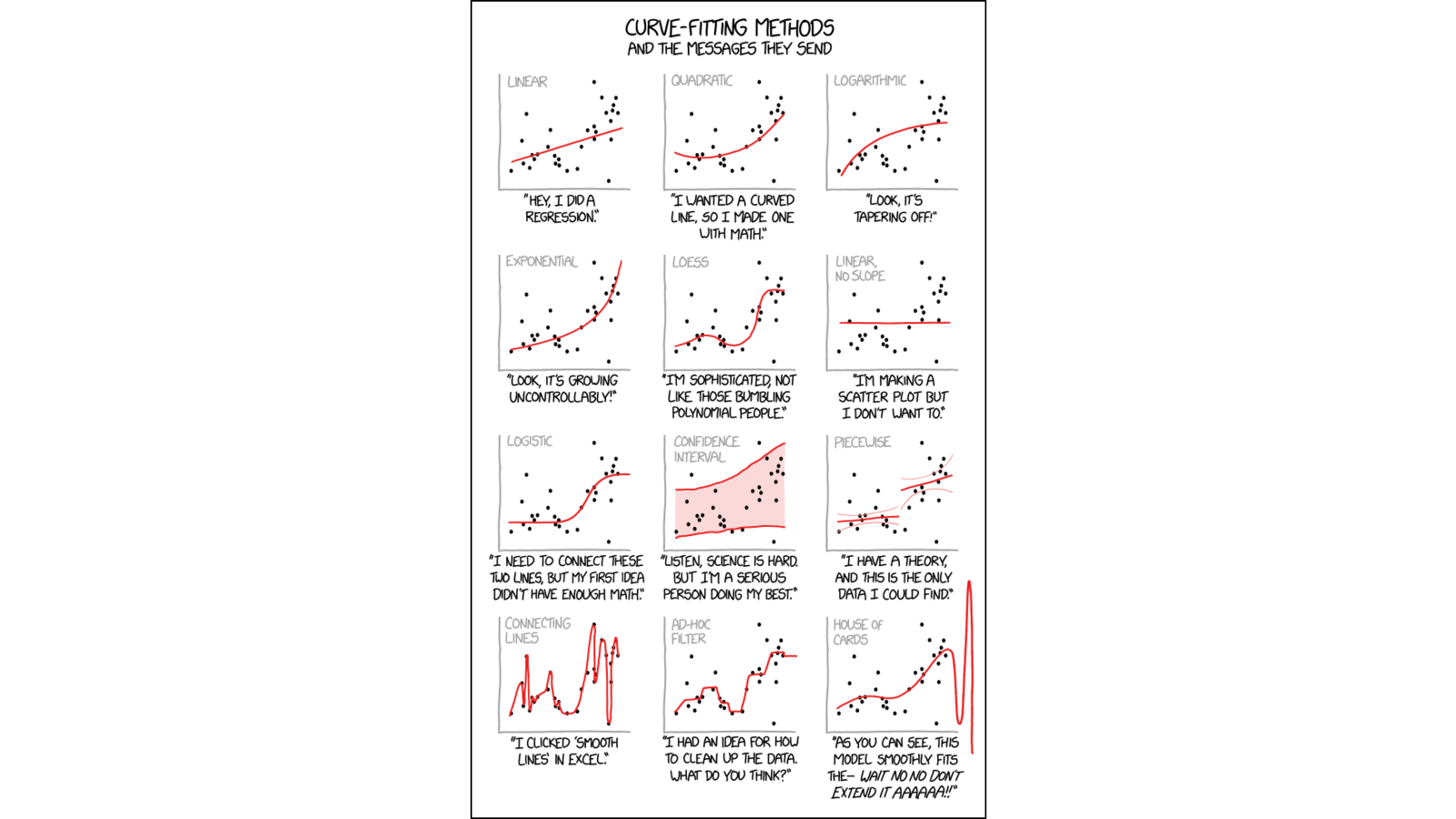

class: center, middle, inverse, title-slide # Data transformations ## Research methods ### Jüri Lillemets ### 2021-12-07 --- class: center middle clean # How to improve the functional form of an OLS model? ??? Curve fitting --- class: center middle inverse # Log-transformations --- For illustration let's use data on "Motor Trend Car Road Tests". ``` ## mpg cyl disp hp drat wt qsec vs am gear carb ## Mazda RX4 21.0 6 160 110 3.90 2.62 16.5 0 1 4 4 ## Mazda RX4 Wag 21.0 6 160 110 3.90 2.88 17.0 0 1 4 4 ## Datsun 710 22.8 4 108 93 3.85 2.32 18.6 1 1 4 1 ## Hornet 4 Drive 21.4 6 258 110 3.08 3.21 19.4 1 0 3 1 ## Hornet Sportabout 18.7 8 360 175 3.15 3.44 17.0 0 0 3 2 ## Valiant 18.1 6 225 105 2.76 3.46 20.2 1 0 3 1 ``` .small[ - `mpg` Miles/(US) gallon - `cyl` Number of cylinders - `disp` Displacement (cu.in.) - `hp` Gross horsepower - `drat` Rear axle ratio - `wt` Weight (1000 lbs) - `qsec` 1/4 mile time - `vs` Engine (0 = V-shaped, 1 = straight) - `am` Transmission (0 = automatic, 1 = manual) - `gear` Number of forward gears - `carb` Number of carburetors ] --- Let's try to predict miles per gallon (`mpg`) from horsepower (`hp`). `$$\text{mpg} = \alpha + \beta \text{hp} + \varepsilon$$` <!-- --> --- Here is the model. ``` ## ## Call: ## lm(formula = Formula, data = mtcars) ## ## Residuals: ## Min 1Q Median 3Q Max ## -5.712 -2.112 -0.885 1.582 8.236 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 30.0989 1.6339 18.42 < 2e-16 *** ## hp -0.0682 0.0101 -6.74 1.8e-07 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 3.86 on 30 degrees of freedom ## Multiple R-squared: 0.602, Adjusted R-squared: 0.589 ## F-statistic: 45.5 on 1 and 30 DF, p-value: 1.79e-07 ``` > Does horsepower have an effect on miles per gallon? --- Let's now see if the assumptions related to normality and constant variance of residuals are fulfilled. <!-- --> -- > Are residuals normally distributed? -- > Is the variance of residals constant? --- What is the problem? <!-- --> --- ## Log-linear model When we use log-transformation on response variable, then we have a log-linear model. `$$ln(y) = \alpha + \beta x + \varepsilon$$` --- `$$\text{ln(mpg)} = \alpha + \beta \text{hp} + \varepsilon$$` ``` ## ## Call: ## lm(formula = Formula, data = mtcars) ## ## Residuals: ## Min 1Q Median 3Q Max ## -0.4158 -0.0658 -0.0174 0.0983 0.3962 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 3.460467 0.078584 44.04 < 2e-16 *** ## hp -0.003429 0.000487 -7.05 7.9e-08 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 0.186 on 30 degrees of freedom ## Multiple R-squared: 0.623, Adjusted R-squared: 0.611 ## F-statistic: 49.6 on 1 and 30 DF, p-value: 7.85e-08 ``` --- <!-- --> -- `\(ln(mpg_{i}) = 3.46 + -0.003 * hp_{i} + \varepsilon_{i}\)` When `hp` increases by 1 unit, `mpg` increases by `\(-0.003 \times 100 = -0.343\)` percent. --- What about assumptions? <!-- --> --- ## Linear-log When one or more predictors are used in logged form, then the model is referred to as a linear-log model: `$$y = \alpha + \beta ln(x) + \varepsilon$$` --- `$$\text{mpg} = \alpha + \beta \text{ln(hp)} + \varepsilon$$` ``` ## ## Call: ## lm(formula = Formula, data = mtcars) ## ## Residuals: ## Min 1Q Median 3Q Max ## -4.943 -1.705 -0.493 1.719 8.646 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 72.64 6.00 12.10 4.6e-13 *** ## log(hp) -10.76 1.22 -8.79 8.4e-10 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 3.24 on 30 degrees of freedom ## Multiple R-squared: 0.72, Adjusted R-squared: 0.711 ## F-statistic: 77.3 on 1 and 30 DF, p-value: 8.39e-10 ``` --- <!-- --> -- `\(mpg_{i} = 72.64 + -10.764 * ln(hp_{i}) + \varepsilon_{i}\)` When `hp` increases by 1 per cent, `mpg` increases by `\(-10.764 / 100 = -0.108\)` units. --- How well the model fits data now? <!-- --> --- ## Log-log When either of the previous transformations have not improved the fit of a linear model, we can also transform both response and predictor(s) as follows: `$$ln(y) = \alpha + \beta ln(x) + \varepsilon$$` --- `$$\text{ln(mpg)} = \alpha + \beta \text{ln(hp)} + \varepsilon$$` ``` ## ## Call: ## lm(formula = Formula, data = mtcars) ## ## Residuals: ## Min 1Q Median 3Q Max ## -0.3819 -0.0571 -0.0069 0.1082 0.3750 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 5.545 0.299 18.54 < 2e-16 *** ## log(hp) -0.530 0.061 -8.69 1.1e-09 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 0.161 on 30 degrees of freedom ## Multiple R-squared: 0.716, Adjusted R-squared: 0.706 ## F-statistic: 75.5 on 1 and 30 DF, p-value: 1.08e-09 ``` --- <!-- --> `\(ln(mpg_{i}) = 5.545 + -0.53 * ln(hp_{i}) + \varepsilon_{i}\)` When `hp` increases by 1 per cent, `mpg` increases by **-0.53 percent**. --- Are the assumptions still satisfied? <!-- --> --- Why did transforming the predictor (`hp`) improve model fit? <!-- --> --- We should apply log-transformatins to variables that are that are log-normally distributed. <!-- --> --- class: center middle inverse # Polynomials --- Introducing polynomials to a linear regression means including additional predictor(s) at power of `\(h\)`: `\(y = \alpha + \beta_{1} x_1 + ... + \beta_{h} x_1^h + \varepsilon,\)` where `\(h\)` is called the degree of polynomial. For a quadratic relationship `\(k = 2\)` and for a cubic relationship `\(k = 3\)`. --- Let's try to solve the problem now with polynomial regression. <!-- --> -- There seems to be a quadratic relationship. --- `$$\text{mpg} = \alpha + \beta_1 \text{hp} + \beta_2 \text{hp}^2+ \varepsilon$$` ``` ## ## Call: ## lm(formula = Formula, data = mtcars) ## ## Residuals: ## Min 1Q Median 3Q Max ## -4.551 -1.603 -0.698 1.551 8.721 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 4.04e+01 2.74e+00 14.74 5.2e-15 *** ## hp -2.13e-01 3.49e-02 -6.11 1.2e-06 *** ## I(hp^2) 4.21e-04 9.84e-05 4.27 0.00019 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 3.08 on 29 degrees of freedom ## Multiple R-squared: 0.756, Adjusted R-squared: 0.739 ## F-statistic: 45 on 2 and 29 DF, p-value: 1.3e-09 ``` --- Interpretation: `$$\text{mpg} = 40.409 + -0.213 \text{hp} + 4.208\times 10^{-4} \text{hp}^2 + \varepsilon$$` We can't really interpret the coefficients for `\(hp\)` and `\(hp^2\)` anymore. There is a trade off between description and prediction, bias and variance. --- Diagnostics <!-- --> --- Why is the fit that good? <!-- --> ??? Still linear in parameters! --- What if the relationship is more complex? What if a regression line with two curves seems to fit best? <!-- --> --- Let's try to fit a 3rd degree polynomial, i.e. model the relationship as cubic. `$$\text{disp} = \alpha + \beta_1 \text{wt} + \beta_2 \text{wt}^2 + \beta_3 \text{wt}^3 + \varepsilon$$` ``` ## ## Call: ## lm(formula = Formula, data = mtcars) ## ## Residuals: ## Min 1Q Median 3Q Max ## -92.75 -33.27 -1.57 25.75 134.30 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 470.66 325.38 1.45 0.159 ## wt -501.79 322.86 -1.55 0.131 ## I(wt^2) 190.84 98.97 1.93 0.064 . ## I(wt^3) -18.24 9.41 -1.94 0.063 . ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 56.3 on 28 degrees of freedom ## Multiple R-squared: 0.814, Adjusted R-squared: 0.794 ## F-statistic: 40.7 on 3 and 28 DF, p-value: 2.42e-10 ``` --- Diagnostics <!-- --> --- <!-- --> --- Is the polynomial model statistically significantly better than a model without polynomials? <!-- --> --- ### Ramsey RESET test We can test if the difference of residuals in two models is statistically significant or not. If there is no difference, we should prefer the simpler model. ``` ## Analysis of Variance Table ## ## Model 1: disp ~ wt ## Model 2: disp ~ wt + I(wt^2) + I(wt^3) ## Res.Df RSS Df Sum of Sq F Pr(>F) ## 1 30 100709 ## 2 28 88788 2 11921 1.88 0.17 ``` `\(H_0: RSS_1 = RSS_2\)` `\(H_1: RSS_1 \neq RSS_2.\)` -- > Should we prefer the model with polynomials? ---  .footnote[Xkcd. Curve fitting] --- class: center middle inverse # Practical application --- Use the data set `Cigarette`. Estimate number of packs per capita smoked (`packpc`) using average excise taxes (`taxs`) as a predictor. > Are the assumptions of normality and equality of residuals satisfied? -- > Which variable(s) could be logged to improve the normality of residuals? Explore the distribution of variables. -- > Could we use polynomials? -- > Do higher taxes have an effect on smoking? What is the effect? --- class: inverse