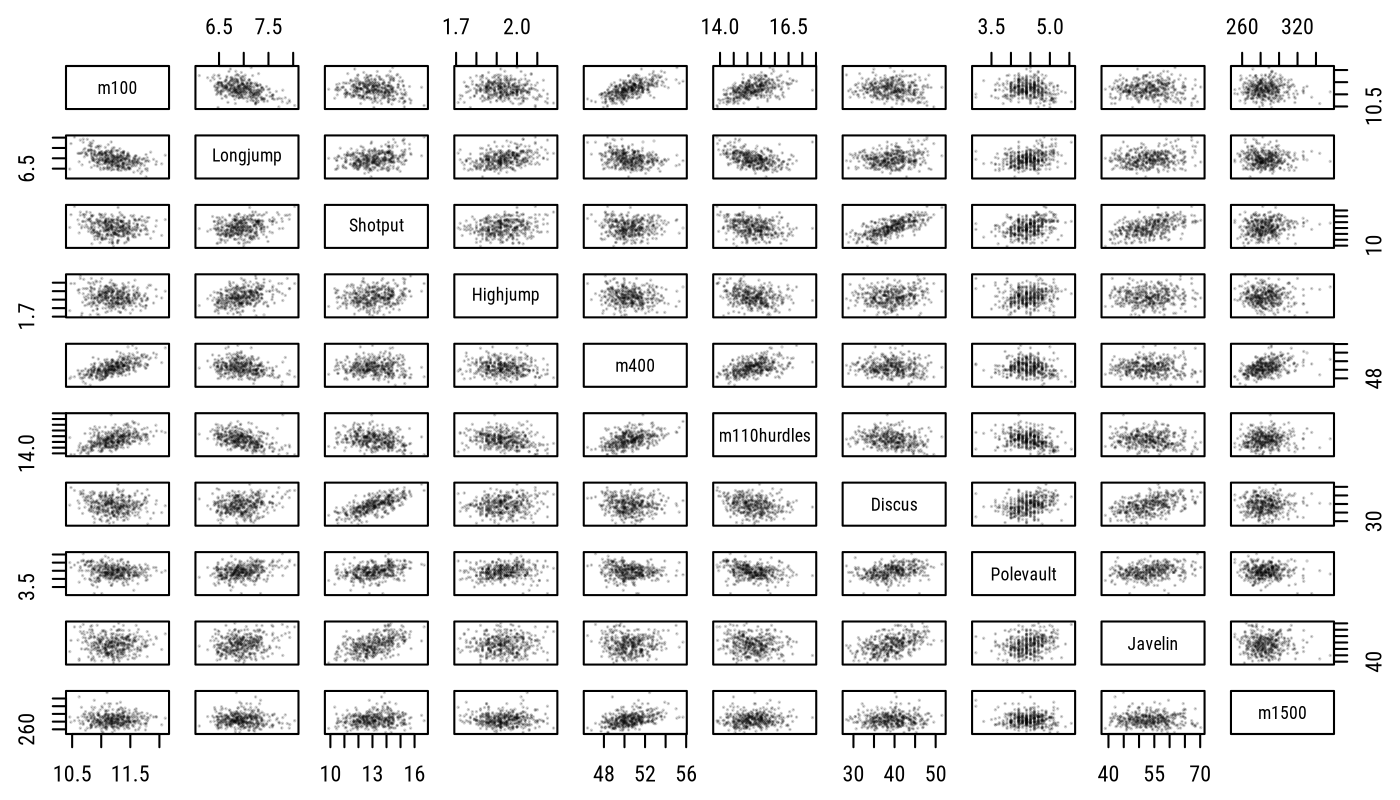

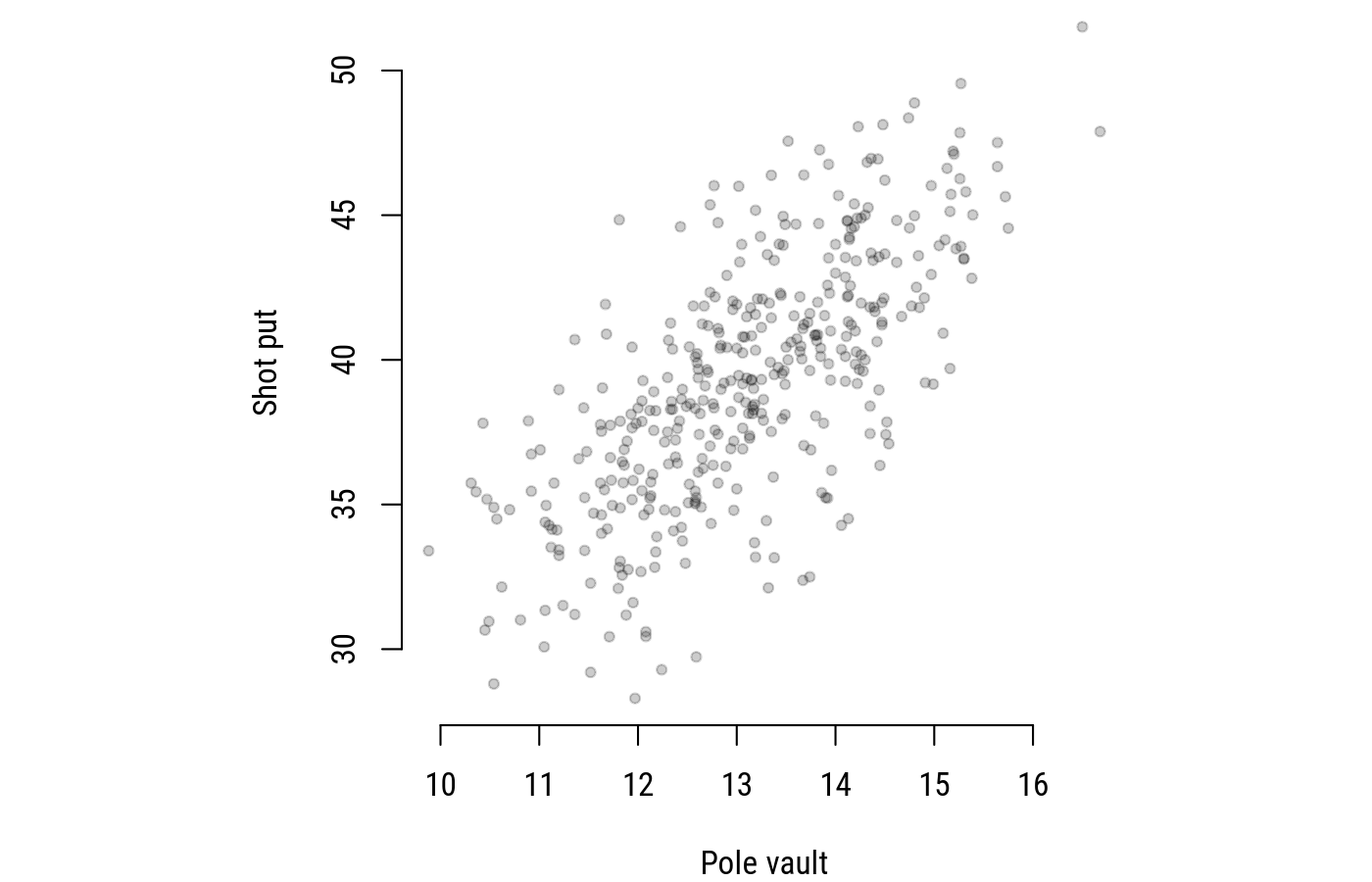

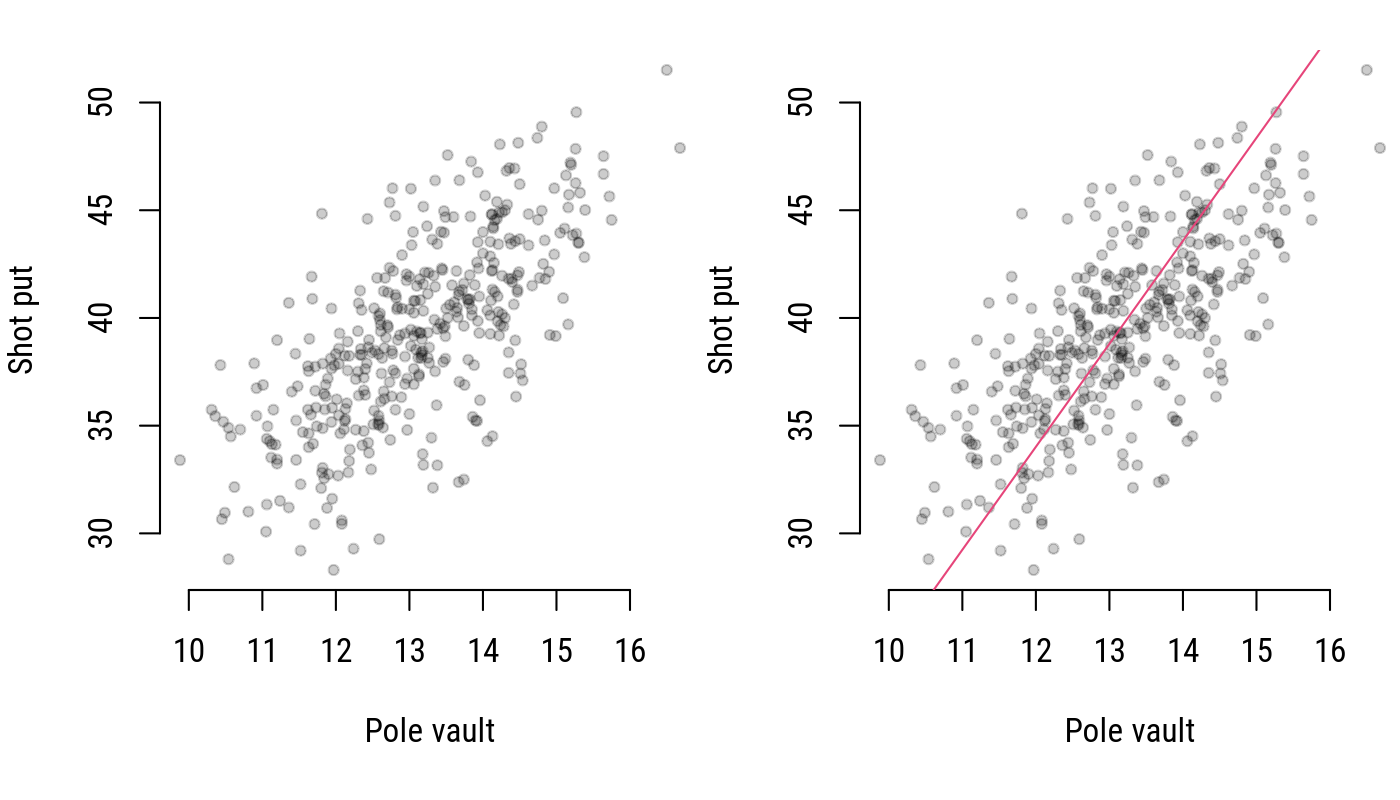

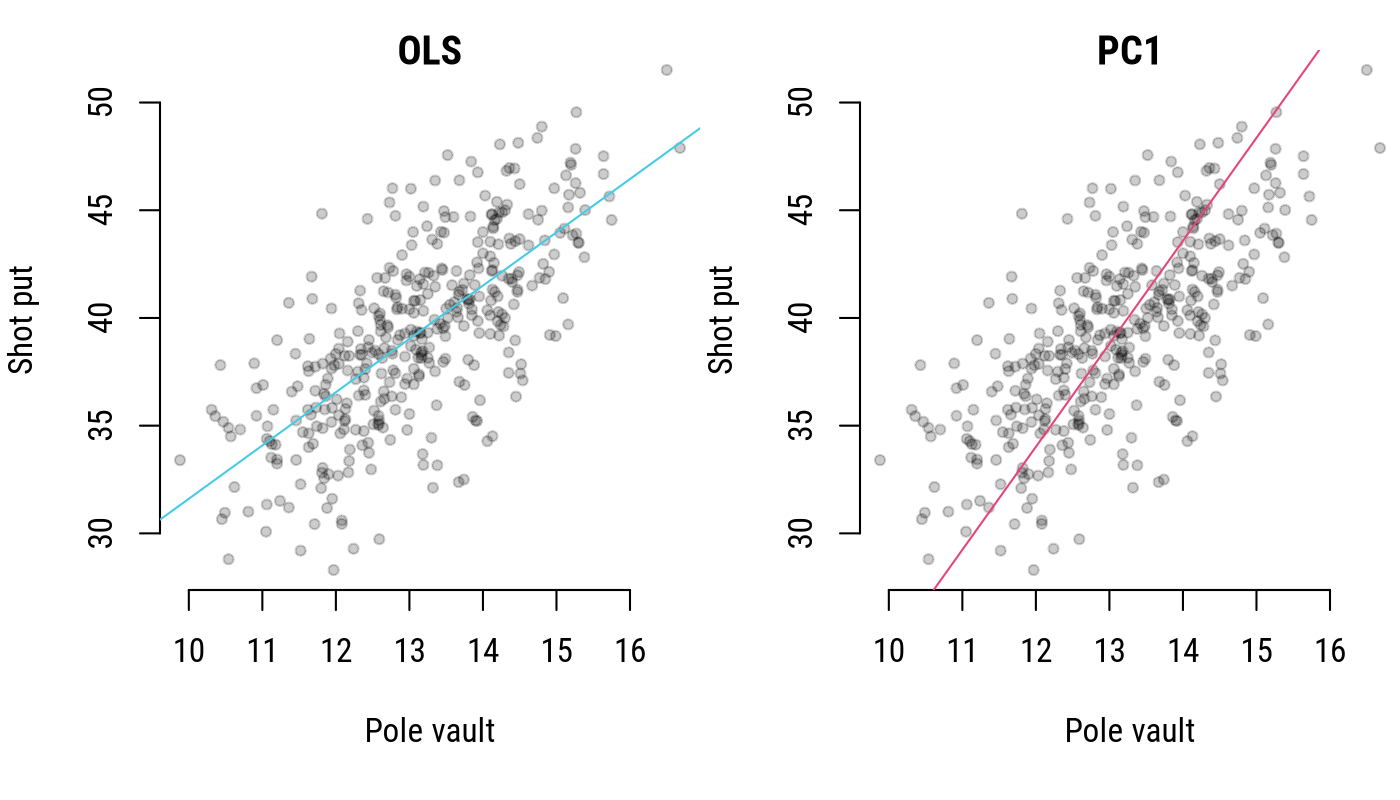

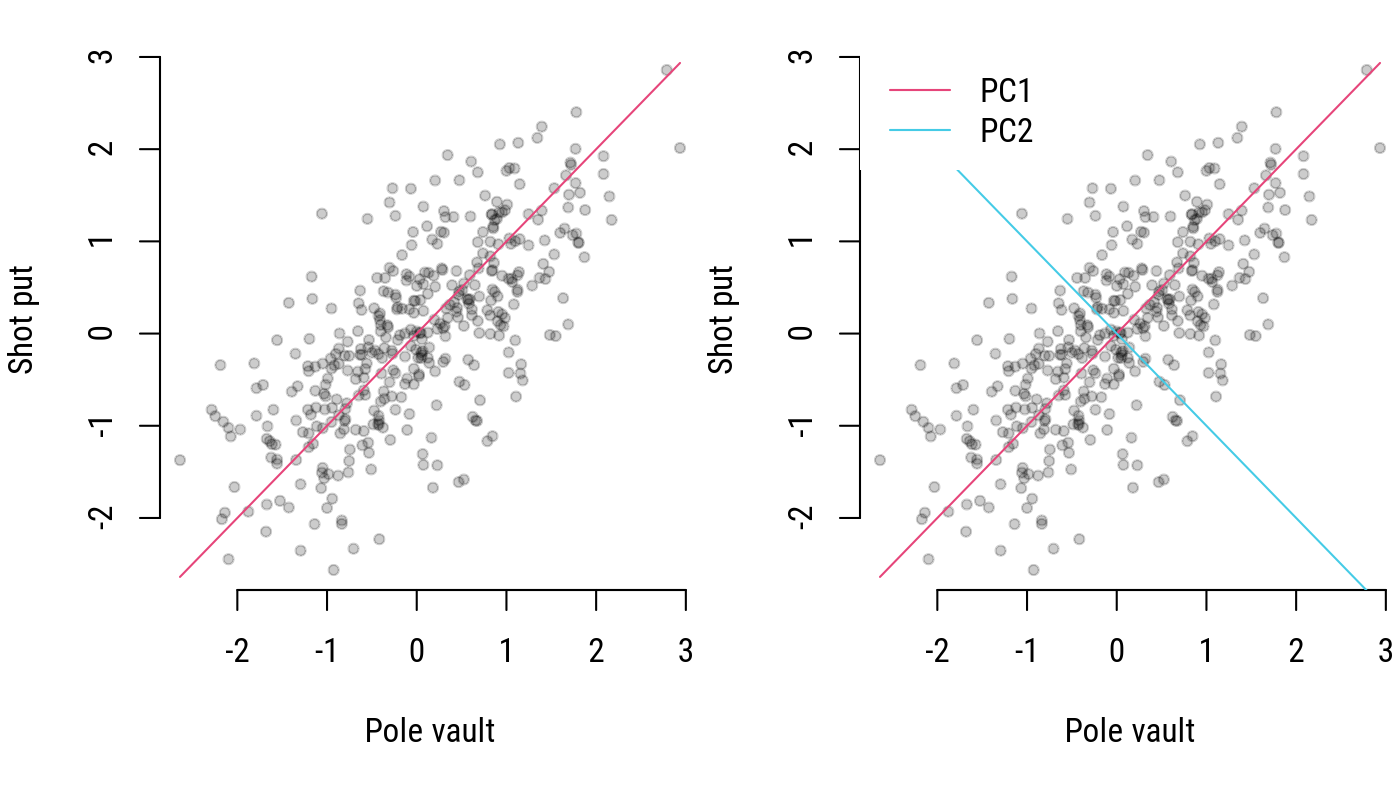

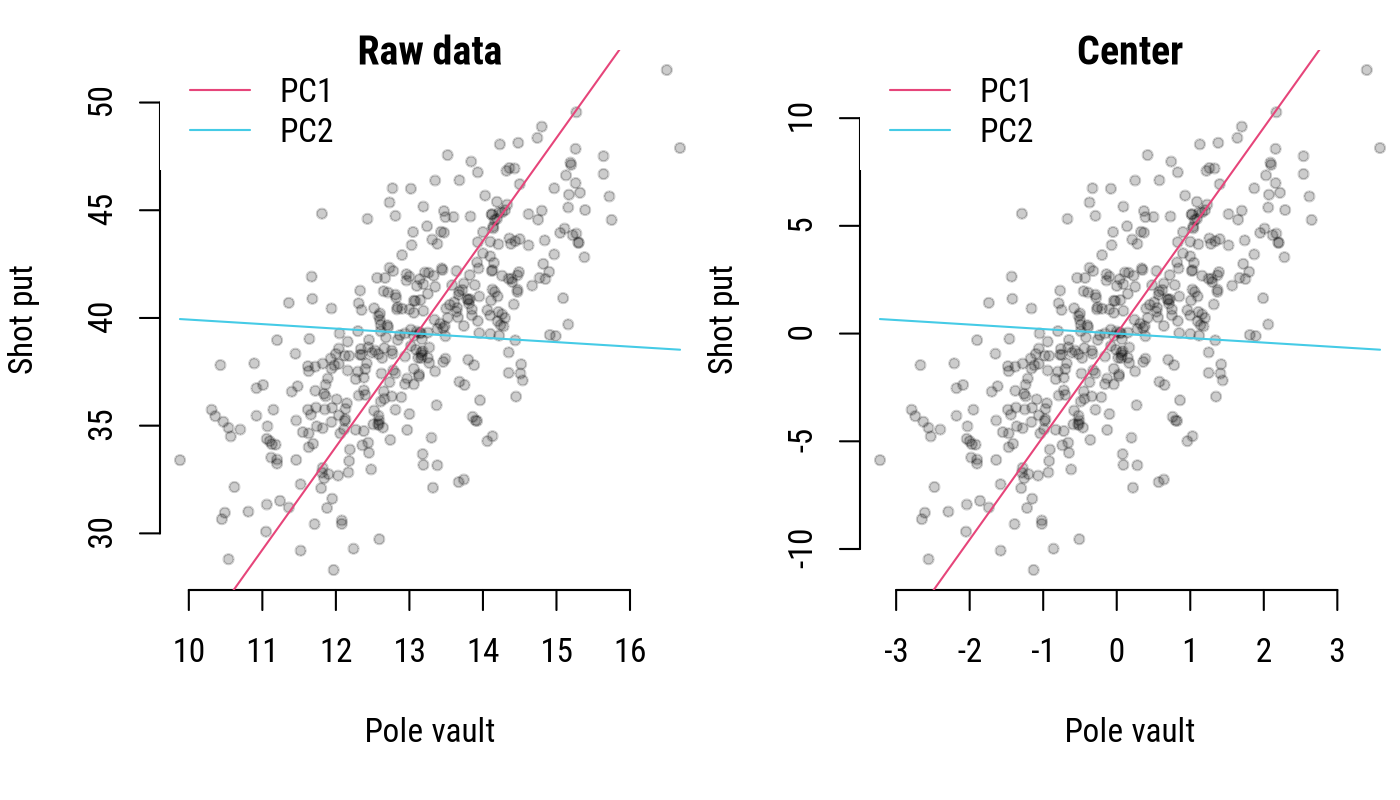

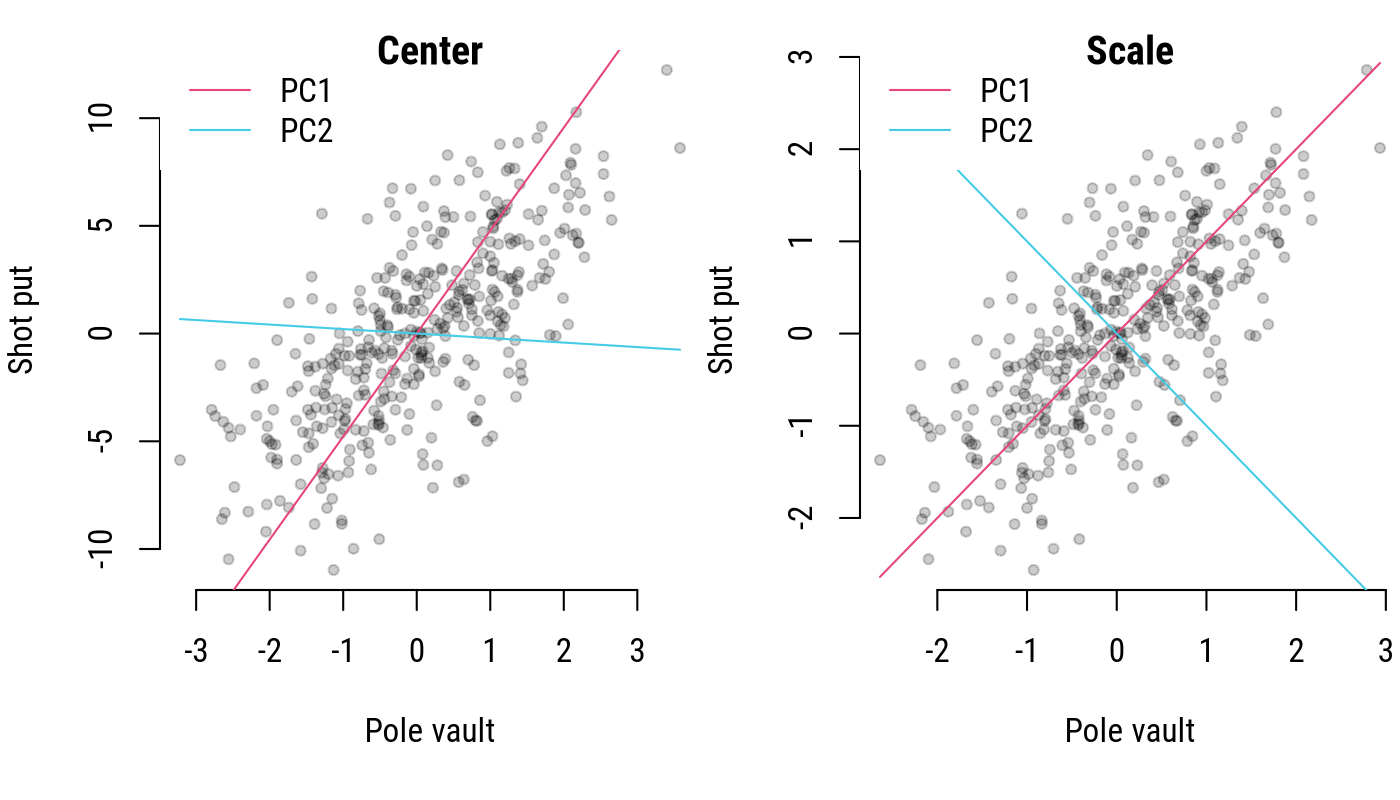

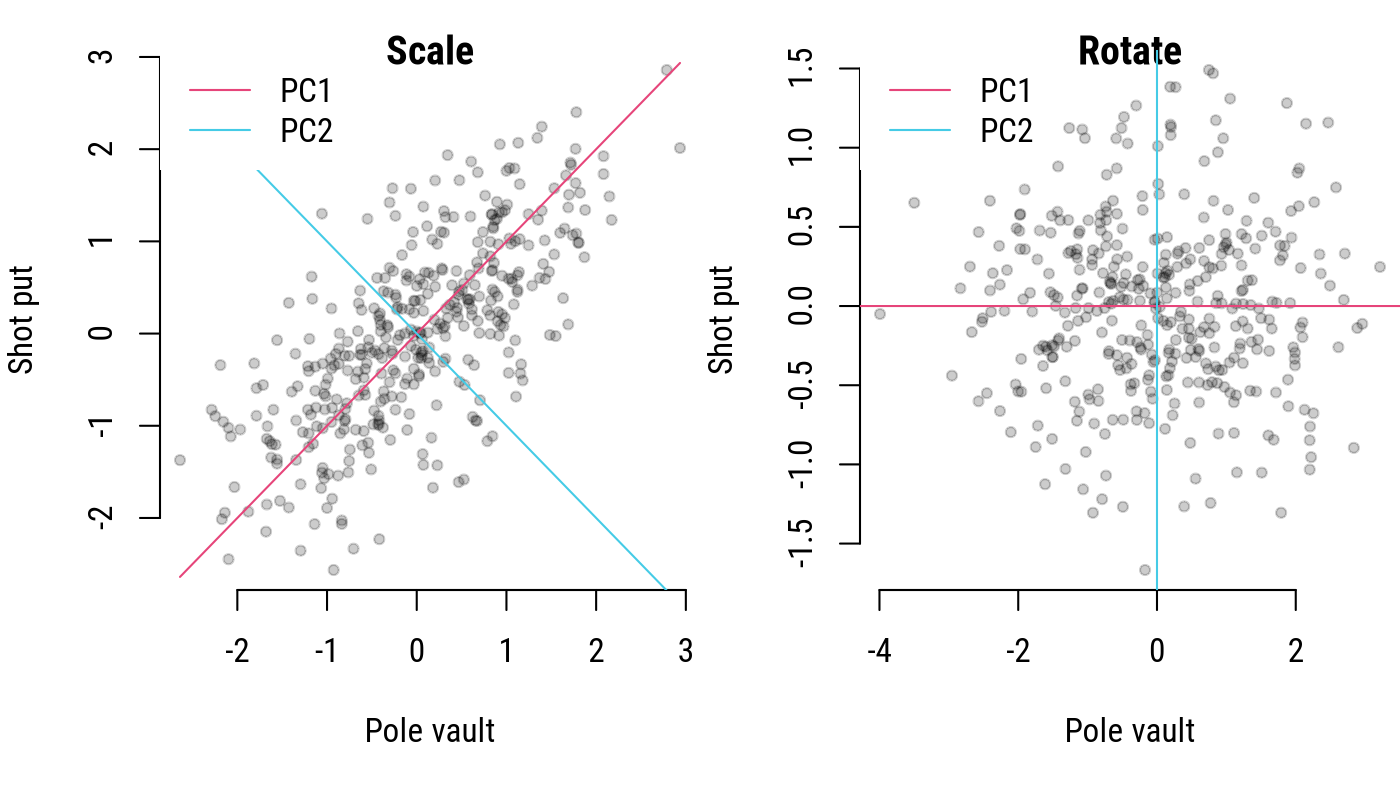

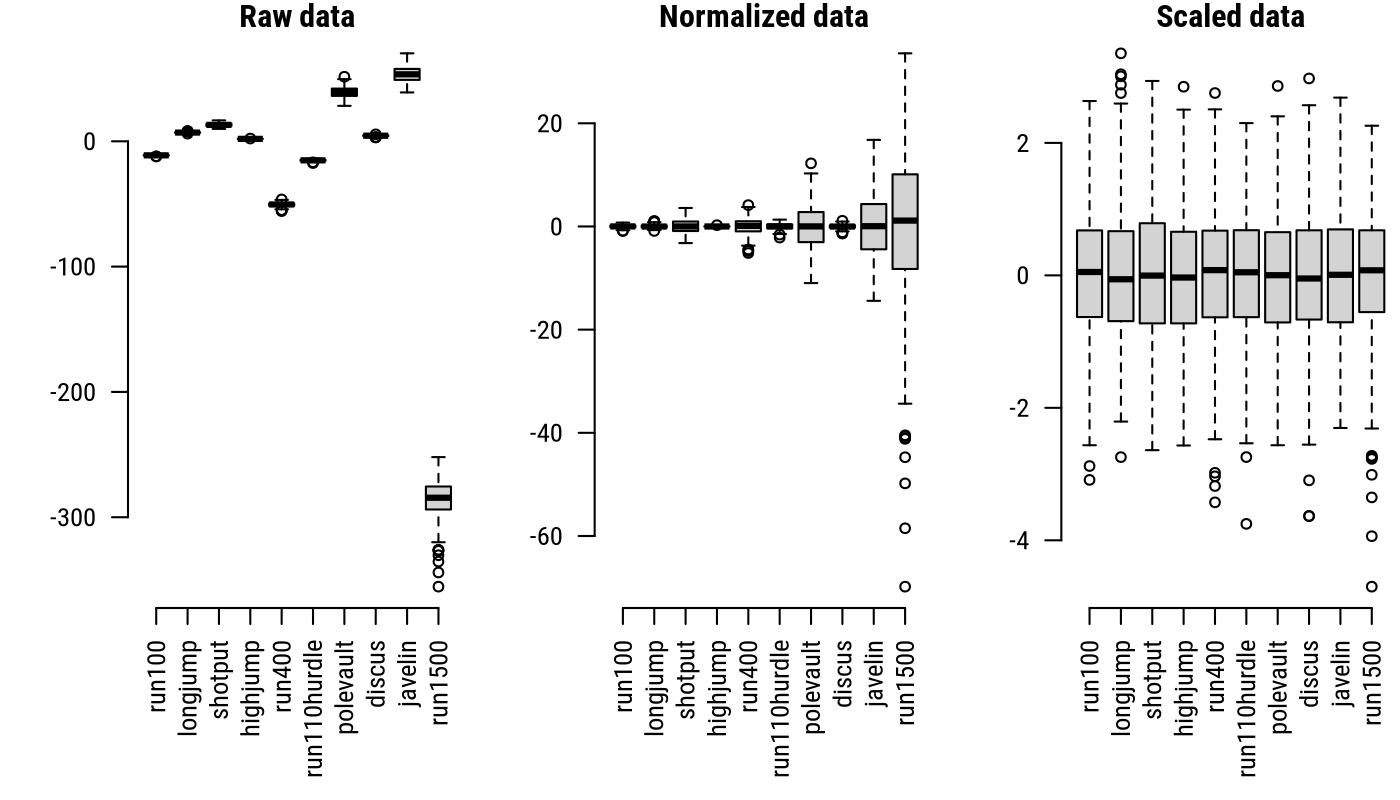

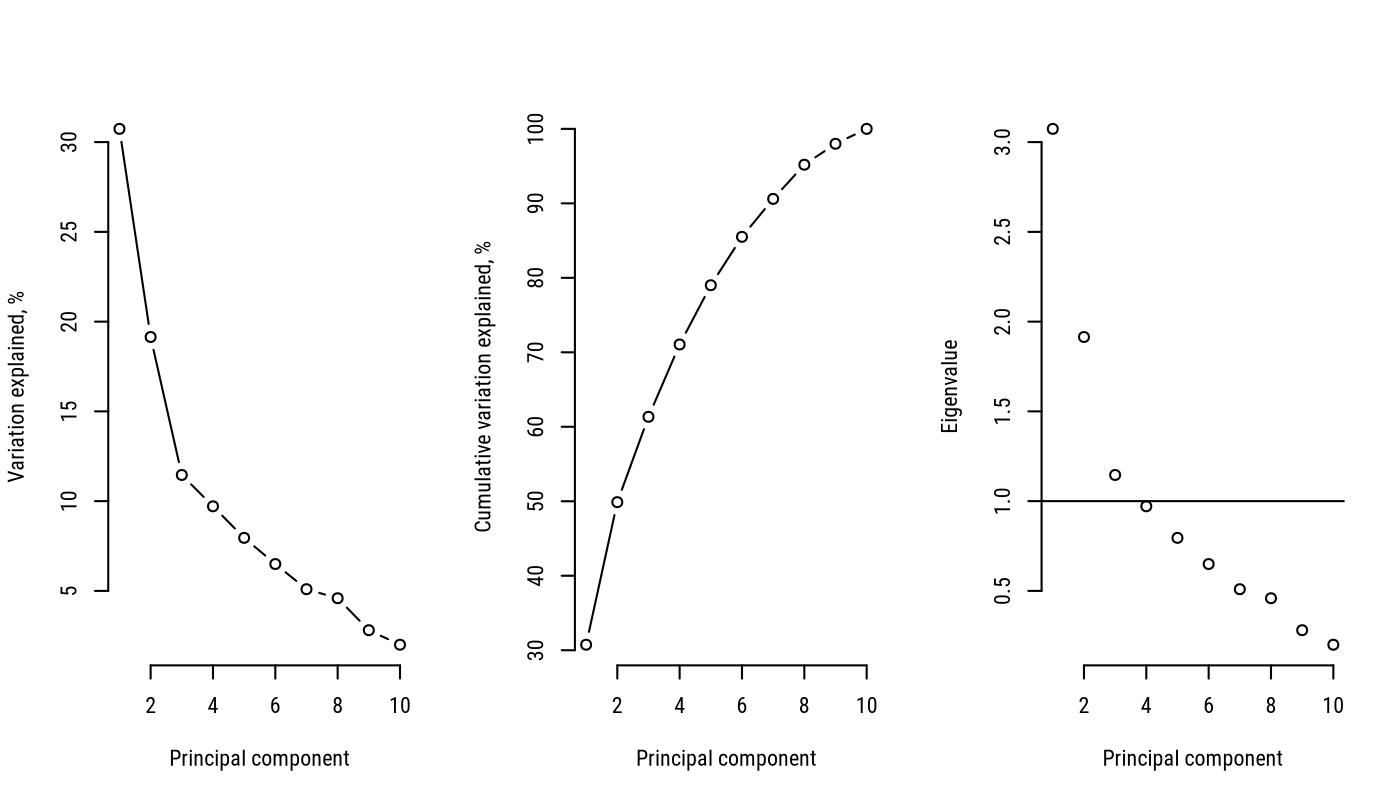

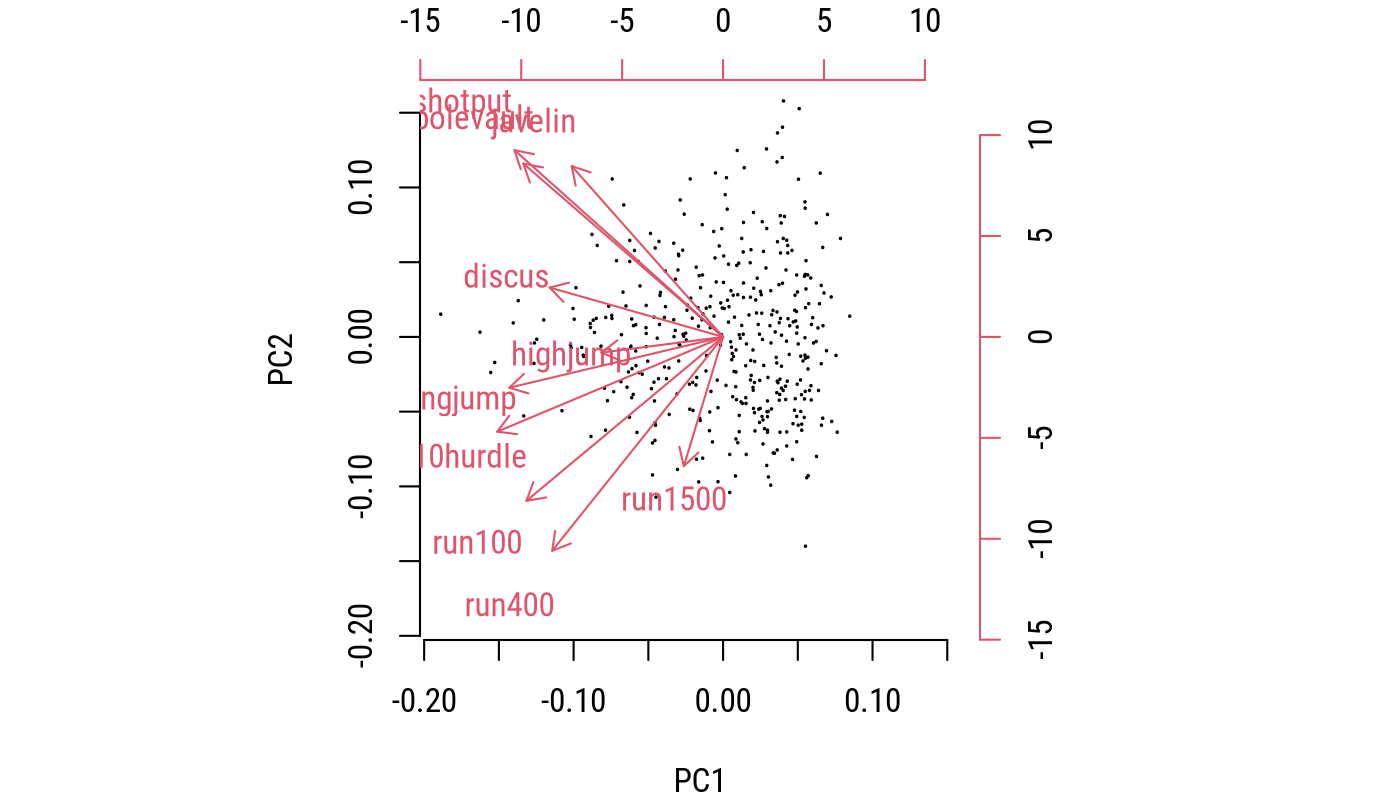

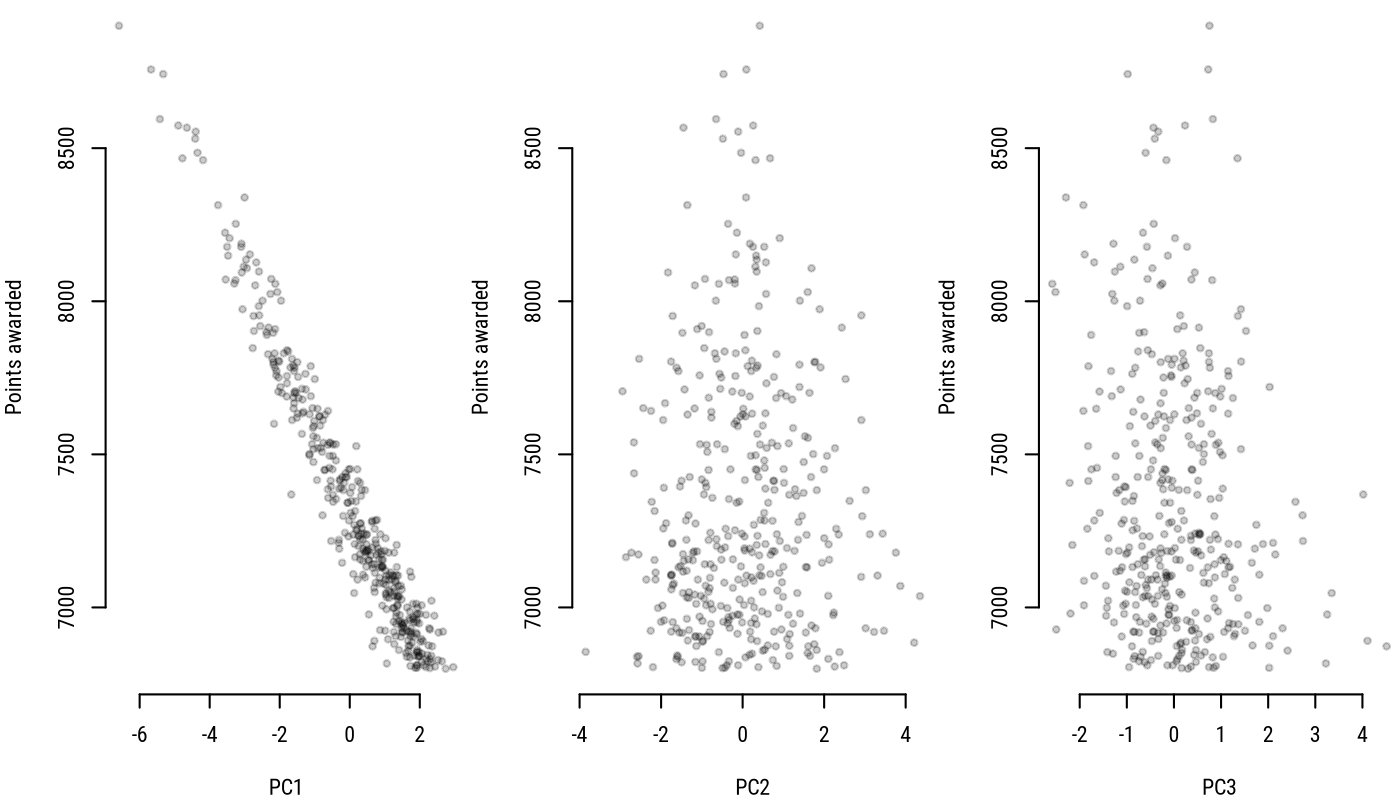

class: center, middle, inverse, title-slide # Principal component analysis ## Research methods ### Jüri Lillemets ### 2021-12-13 --- class: center middle clean # How to summarize variables? --- class: center middle inverse # Intuition --- ## Multivariate statistics In general, we do not test any hypotheses nor estimate statistical significance. There are no response or predictor variables. Response variable is not predicted from a model. Instead we examine the overall **structure of data to find patterns**. *Principal component analysis* (*PCA*) is one of the methods in the field of multivariate statistics. --- ## Principal component analysis The aim is to **summarize continuous variables** into one, few or several new variables. This is done by determining a set of standardized **linear combinations** that would explain a maximum amount of variation in data. A **dimensionality reduction** technique. The maximum number of PCs we can determine is `\(min(p, n - 1)\)`. --- Let's use data on top performances in the *Decathlon* from 1985 to 2006 and examine results for each event in 2000. -- Below are the first rows in the data set. .smaller[ | | m100| Longjump| Shotput| Highjump| m400| m110hurdles| Discus| Polevault| Javelin| m1500| |:----------------|----:|--------:|-------:|--------:|----:|-----------:|------:|---------:|-------:|-----:| |Tomas Dvorak | 10.5| 8.03| 16.7| 2.09| 48.4| 13.9| 47.9| 4.85| 67.2| 282| |Roman Sebrle | 10.6| 7.88| 15.2| 2.15| 49.0| 14.0| 47.2| 4.75| 67.2| 275| |Erki Nool | 10.7| 7.78| 14.1| 1.97| 47.2| 14.4| 44.2| 5.55| 69.1| 276| |Chris Huffins | 10.5| 7.71| 15.3| 2.09| 48.3| 13.9| 49.5| 4.70| 56.6| 279| |Aleksandr Yurkov | 10.7| 7.93| 15.3| 2.03| 49.7| 14.6| 47.9| 5.15| 58.9| 272| |Dean Macey | 10.8| 7.77| 14.6| 2.09| 46.4| 14.5| 43.4| 4.80| 60.4| 263| |Attila Zsivoczky | 10.6| 7.24| 15.7| 2.18| 48.1| 14.9| 45.6| 4.65| 63.6| 263| |Frank Busemann | 10.8| 7.92| 14.5| 2.03| 48.9| 14.7| 43.7| 5.05| 62.8| 263| |Stefan Schmid | 10.8| 7.59| 14.1| 2.01| 49.0| 14.2| 44.2| 5.06| 67.6| 272| |Thomas Pappas | 10.7| 7.41| 15.3| 2.14| 48.9| 14.3| 45.8| 5.10| 63.5| 300| ] -- Why would we want to summarize these variables? --- Variables are linearly correlated. .smaller[ | | m100| Longjump| Shotput| Highjump| m400| m110hurdles| Discus| Polevault| Javelin| m1500| |:-----------|------:|--------:|-------:|--------:|------:|-----------:|------:|---------:|-------:|------:| |m100 | 1.000| -0.440| -0.130| -0.097| 0.644| 0.493| -0.130| -0.154| -0.010| -0.018| |Longjump | -0.440| 1.000| 0.269| 0.355| -0.259| -0.412| 0.186| 0.295| 0.199| -0.044| |Shotput | -0.130| 0.269| 1.000| 0.171| -0.023| -0.268| 0.705| 0.311| 0.493| 0.131| |Highjump | -0.097| 0.355| 0.171| 1.000| -0.105| -0.240| 0.137| 0.100| 0.031| -0.008| |m400 | 0.644| -0.259| -0.023| -0.105| 1.000| 0.454| -0.068| -0.132| 0.004| 0.418| |m110hurdles | 0.493| -0.412| -0.268| -0.240| 0.454| 1.000| -0.239| -0.293| -0.125| 0.086| |Discus | -0.130| 0.186| 0.705| 0.137| -0.068| -0.239| 1.000| 0.300| 0.455| 0.033| |Polevault | -0.154| 0.295| 0.311| 0.100| -0.132| -0.293| 0.300| 1.000| 0.249| -0.074| |Javelin | -0.010| 0.199| 0.493| 0.031| 0.004| -0.125| 0.455| 0.249| 1.000| -0.031| |m1500 | -0.018| -0.044| 0.131| -0.008| 0.418| 0.086| 0.033| -0.074| -0.031| 1.000| ] --- <!-- --> -- > How could we summarise all of this variation into a single score? --- ### Linear combinations Each linear combination is called a principal component (PC). A `\(j\)`th PC can be expressed as linear combination `\(\xi_j\)` as: `$$\xi_j = b_{j1}x_1 + b_{j2}x_2+ ... a_{jp}x_p,$$` where `\(b_{jp}\)` is a weight of variable `\(x_p\)` of a particular `\(\xi_j\)`. .smaller[ | | m100| Longjump| Shotput| Highjump| m400| m110hurdles| Discus| Polevault| Javelin| m1500| |:----------------|----:|--------:|-------:|--------:|----:|-----------:|------:|---------:|-------:|-----:| |Tomas Dvorak | 10.5| 8.03| 16.7| 2.09| 48.4| 13.9| 47.9| 4.85| 67.2| 282| |Roman Sebrle | 10.6| 7.88| 15.2| 2.15| 49.0| 14.0| 47.2| 4.75| 67.2| 275| |Erki Nool | 10.7| 7.78| 14.1| 1.97| 47.2| 14.4| 44.2| 5.55| 69.1| 276| |Chris Huffins | 10.5| 7.71| 15.3| 2.09| 48.3| 13.9| 49.5| 4.70| 56.6| 279| |Aleksandr Yurkov | 10.7| 7.93| 15.3| 2.03| 49.7| 14.6| 47.9| 5.15| 58.9| 272| |Dean Macey | 10.8| 7.77| 14.6| 2.09| 46.4| 14.5| 43.4| 4.80| 60.4| 263| ] ??? PCs are thus essentially weighted sum of variables. We need to give each sports event some weight. --- ## Estimation of PCs We can think of the estimation as a process starting from estimation of first PC. The first PC is chosen so that 1. it follows the direction of largest variance in data cloud and 2. it is a line with smallest orthogonal distance to all data points. Each following PC is chosen so that it is orthogonal to preceding PC. --- Suppose we have two variables: <!-- --> --- The line that maximizes variation and has smallest orthogonal distance to data points is the first PC. <!-- --> -- > What does this line remind you of? --- PCA is somewhat similar to least squares estimation. <!-- --> OLS minimizes residuals with respect to response, PC1 minimizes orthogonal distances to data points. ??? Except that in PCA we consider variation in all variables (not just the response) --- Each following PC is orthogonal to the first PC. <!-- --> Each PC explains the variation unexplained by previous PC as much as possible. --- The process involves transforming data points to a new coordinate system: 1. centering data points, 2. scaling the axes so that they would be equal, 3. rotating axes into the direction of a PC. --- ### Center <!-- --> We subtract the mean from all variables so that mean value of each PC is 0. --- ### Scale <!-- --> We divide each variable by its standard deviation so that PCs have similar scales relative to variables. --- ### Rotate <!-- --> We rotate the data so that each PC represents an axis. --- How do we know how exactly to scale and rotate axes? We use some parameters derived from data: - for scaling we use *eigenvalues* and - for rotating we use *eigenvectors*. --- ## Calculation There are two methods for calculating eigenvalues and eigenvectors from data, depending on whether we use 1. covariance or correlation matrix `\(\Sigma\)` of data matrix `\(X : n \times p\)` or 2. the raw data matrix `\(X : n \times p\)`. --- ### Spectral decomposition This calculation uses correlation or covariance matrix `\(\Sigma\)`. Because `\(\Sigma = V D V^T\)`, we can find eigenvalues from `\(D\)` and corresponding eigenvectors from `\(V\)`. Computationally more simple. --- ### Singular value decomposition This calculation uses data matrix with raw values `\(X : n \times p\)`. Because `\(X = UDV^T\)`, we can find eigenvalues from `\(D\)` and corresponding eigenvectors from `\(U\)`. Numerically more stable. --- Because PCA involves maximizing variation, variables with higher variance are given more importance. Consider scaling variables ( `\(x\prime = (x - \mu) / \sigma\)` ) prior to estimation. <!-- --> --- ## Number of PCs Aside from theoretical considerations, there also several possible criteria for determining the number of estimated PCs: 1. choose a threshold of variation in data that PCs should cumulatively explain, e.g. 50%; 2. use PCs that have eigenvalue above average (one); 3. use PCs that are above an "elbow" in scree plot. --- The choice can be made by looking at scree plots. <!-- --> --- class: center middle inverse # Interpretation --- ## Variation explained The proportion of total *variation explained* by a `\(j\)`th PC can be found from its eigenvalues: it is the proportion of sum of eigenvalues, i.e. `\(\lambda_j / \Sigma^p_{j=1} \lambda\)`. Total variation explained by PCs illustrates how much information is preserved when summarizing data into a particular number of PCs. -- | | PC1| PC2| PC3| PC4| PC5| PC6| PC7| PC8| PC9| PC10| |:-----------------------|---:|---:|---:|---:|---:|---:|---:|---:|---:|----:| |Variation explained, % | 31| 19| 11| 10| 8| 6| 5| 5| 3| 2| |Cumulative variation, % | 31| 50| 61| 71| 79| 86| 91| 95| 98| 100| -- > How much of total variation in data do the first two PCs explain? --- ## Loadings Variables "load" PCs and thus each variable has a particular *loading* on each PC. These can be understood as *weights*. .smaller[ | | PC1| PC2| PC3| PC4| PC5| PC6| PC7| PC8| PC9| PC10| |:------------|------:|------:|------:|------:|------:|------:|------:|------:|------:|------:| |run100 | -0.349| -0.369| 0.158| -0.443| 0.070| -0.107| -0.250| -0.227| -0.098| 0.617| |longjump | -0.379| -0.114| 0.301| 0.203| -0.170| -0.574| -0.248| 0.488| -0.054| -0.224| |shotput | -0.370| 0.420| -0.019| -0.098| 0.202| 0.164| -0.135| 0.192| 0.735| 0.114| |highjump | -0.216| -0.035| 0.459| 0.689| 0.268| 0.196| -0.004| -0.384| -0.017| 0.092| |run400 | -0.303| -0.481| -0.264| -0.128| 0.202| 0.088| -0.143| -0.302| 0.174| -0.632| |run110hurdle | -0.401| -0.213| 0.102| -0.094| -0.050| 0.241| 0.793| 0.285| -0.061| 0.016| |polevault | -0.354| 0.390| -0.148| -0.095| 0.264| 0.327| -0.257| 0.170| -0.638| -0.114| |discus | -0.308| 0.111| -0.138| 0.122| -0.849| 0.244| -0.131| -0.249| 0.012| 0.010| |javelin | -0.269| 0.384| -0.295| 0.016| 0.099| -0.601| 0.348| -0.440| -0.077| 0.016| |run1500 | -0.070| -0.291| -0.682| 0.478| 0.101| -0.009| -0.060| 0.263| 0.014| 0.366| ] -- > How would you describe these PCs? ??? Loadings can be used to give a theoretical meaning to each PC. This is creative. --- ### Scores Principal component scores are the original variables weighted by PCs. Recall the representation of linear combinations `\(\xi_j = b_{j1}x_1 + b_{j2}x_2+ ... a_{jp}x_p\)`. .smaller[ | | PC1| PC2| PC3| PC4| PC5| PC6| PC7| PC8| PC9| PC10| |:----------------|-----:|------:|------:|-----:|------:|------:|------:|------:|------:|------:| |Tomas Dvorak | -6.59| 0.420| 0.754| 0.278| 0.705| -1.065| -0.114| 0.340| 0.389| 0.218| |Roman Sebrle | -5.67| 0.090| 0.727| 1.223| 0.852| -1.027| 0.229| -0.056| -0.424| 0.516| |Erki Nool | -5.33| -0.469| -0.982| 0.112| -1.545| -1.310| -0.220| -0.901| -0.275| -0.513| |Chris Huffins | -5.42| -0.651| 0.825| 0.094| 0.968| 0.341| -0.442| 0.533| -0.536| 0.434| |Aleksandr Yurkov | -4.90| 0.259| 0.236| 0.704| -0.580| -0.530| -1.142| 0.848| -0.348| 0.562| |Dean Macey | -4.65| -1.451| -0.435| 1.306| 0.611| -0.658| -0.681| -0.211| 0.353| -0.644| |Attila Zsivoczky | -4.40| -0.108| -0.332| 1.450| 1.731| 0.116| -0.752| -1.239| 0.487| 0.961| |Frank Busemann | -4.41| -0.484| -0.407| 1.187| -0.492| -1.367| -0.828| 0.307| -0.080| 0.324| |Stefan Schmid | -4.35| -0.037| -0.601| 0.592| -0.480| -1.039| 0.519| -0.316| -0.439| 0.226| |Thomas Pappas | -4.78| 0.673| 1.348| 0.300| 0.009| 0.235| 0.087| -1.294| 0.045| 0.083| ] --- ## Biplot <!-- --> -- > How would you interpret these first two PCs? --- How are our results related to actual points given to athletes? <!-- --> ??? It seems like technical skills are given higher importance. Methodology for total points calculation is from 1985. --- class: center middle inverse # Practical application --- Use the data set `UN98`. > Are variables linearly correlated? > Summarize the social indicators into a single index. What are the weights of these variables? > Use the index to describe social situation in world regins. --- class: inverse