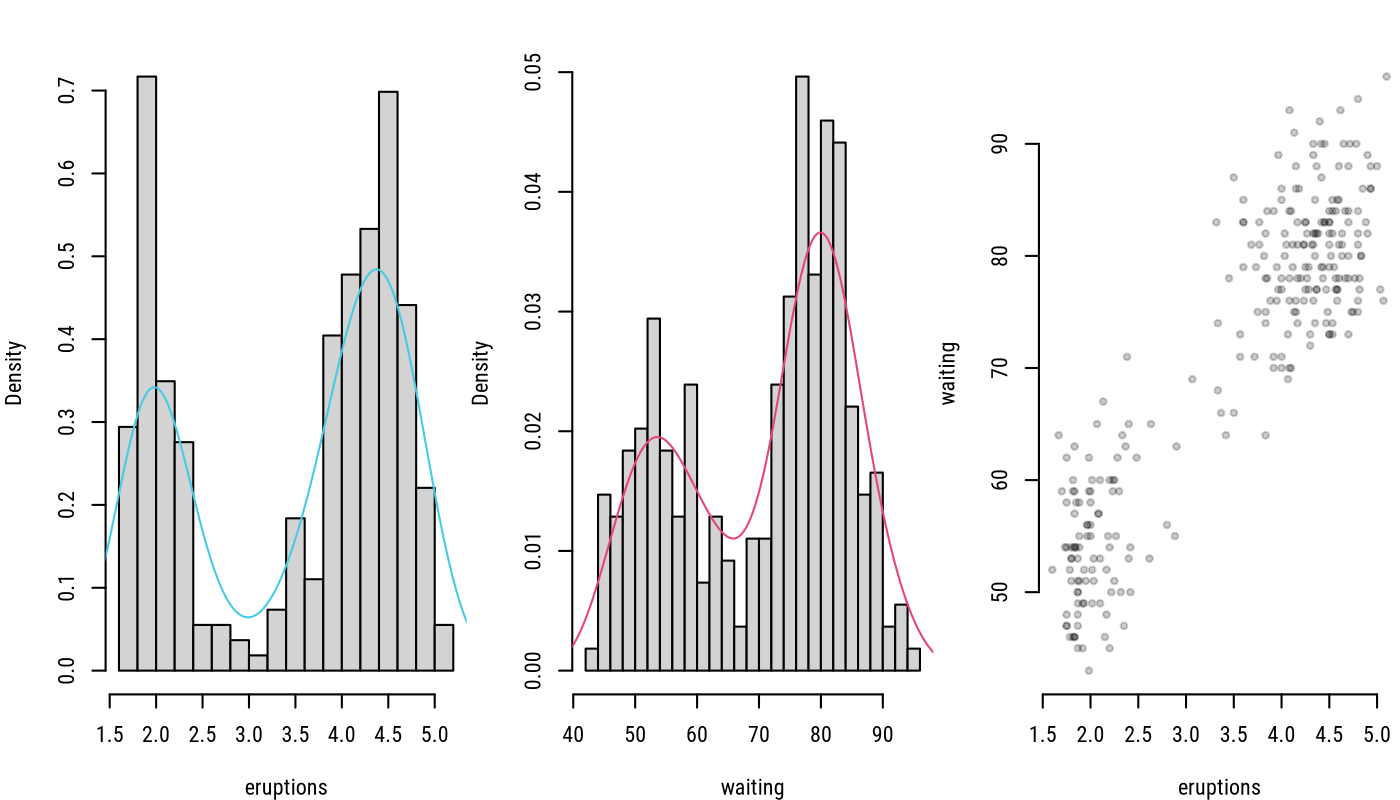

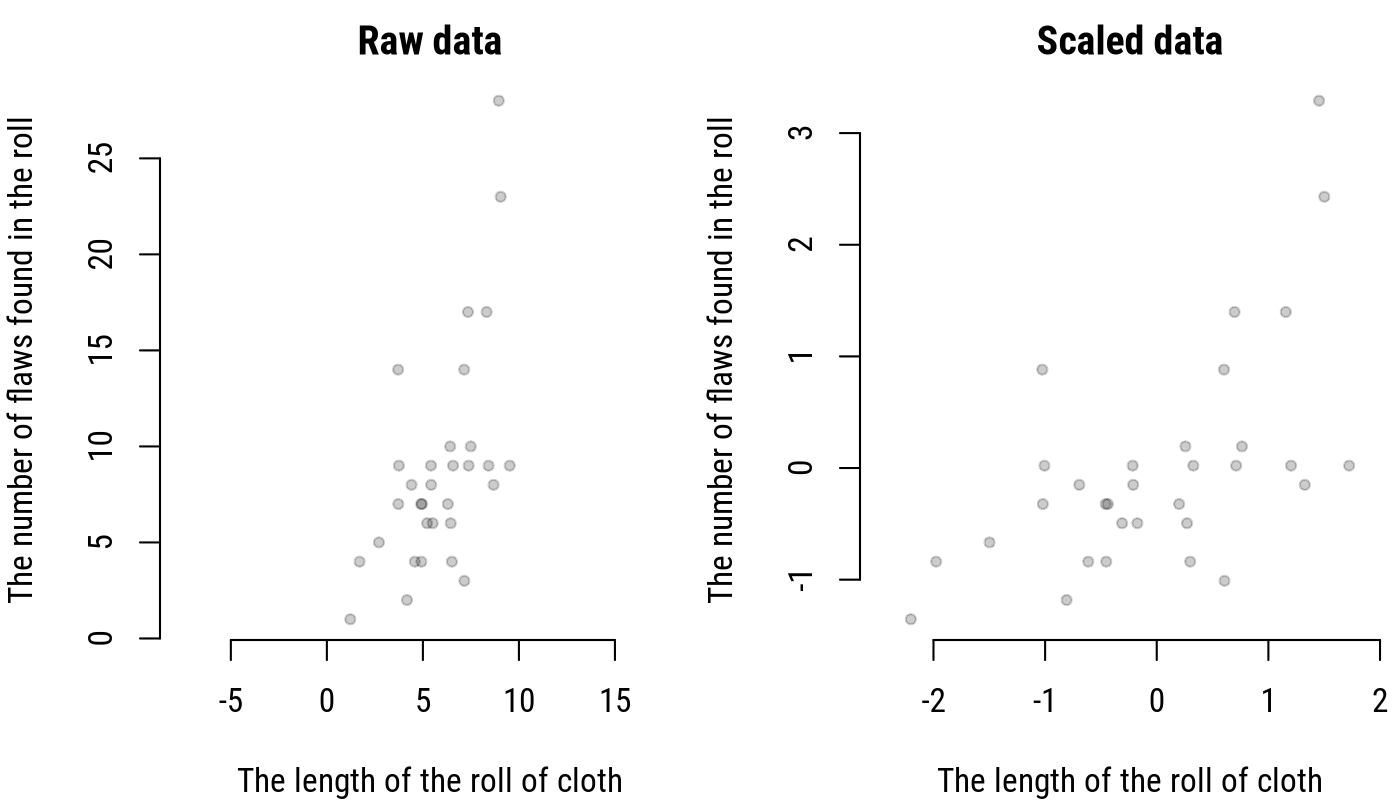

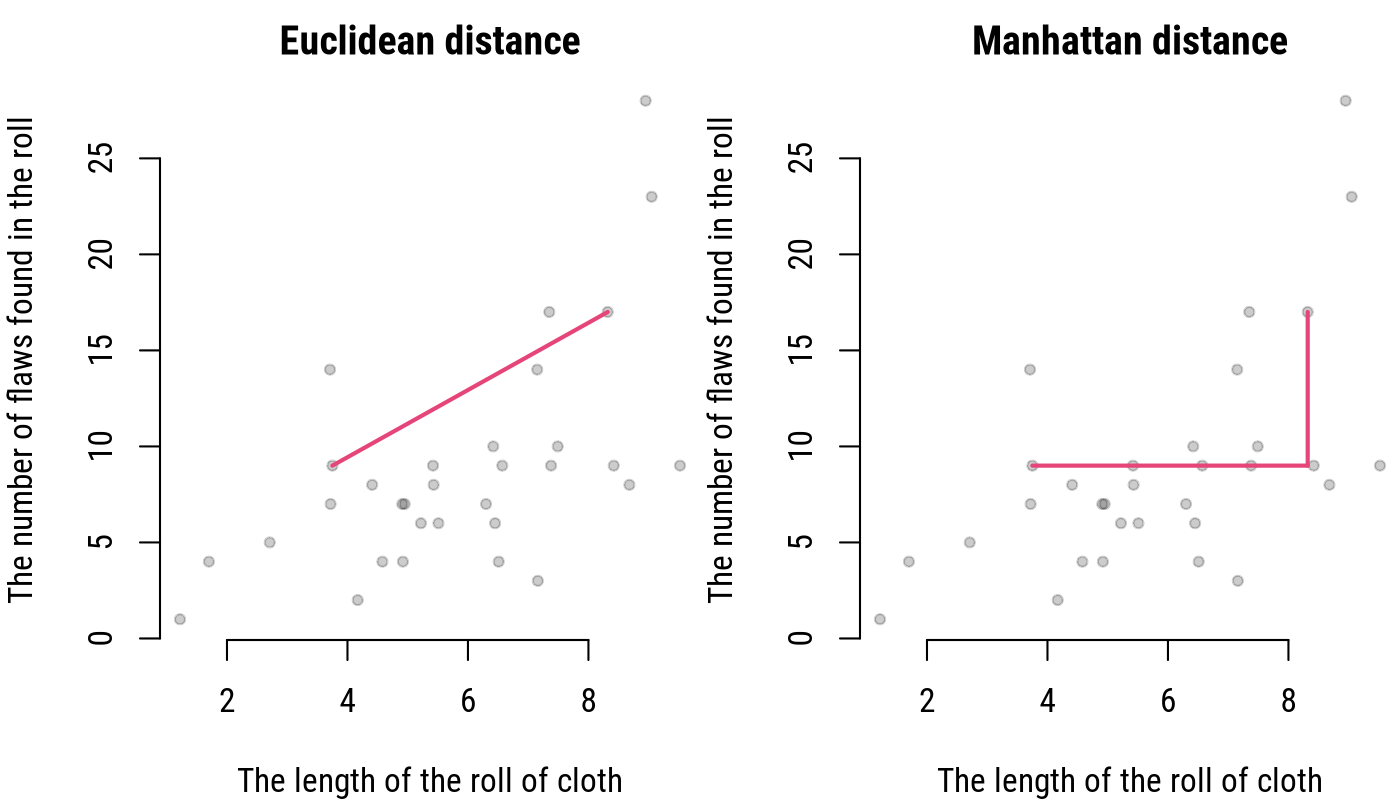

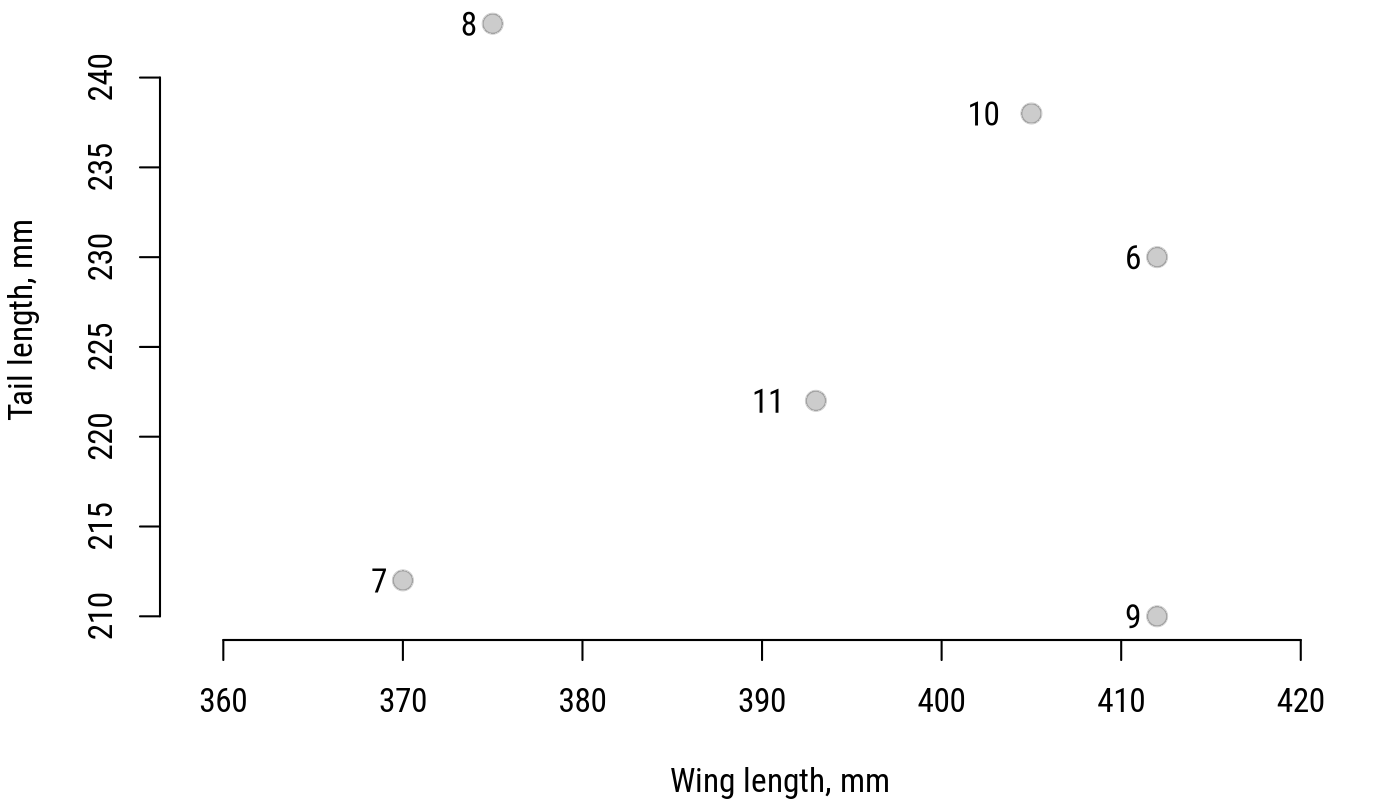

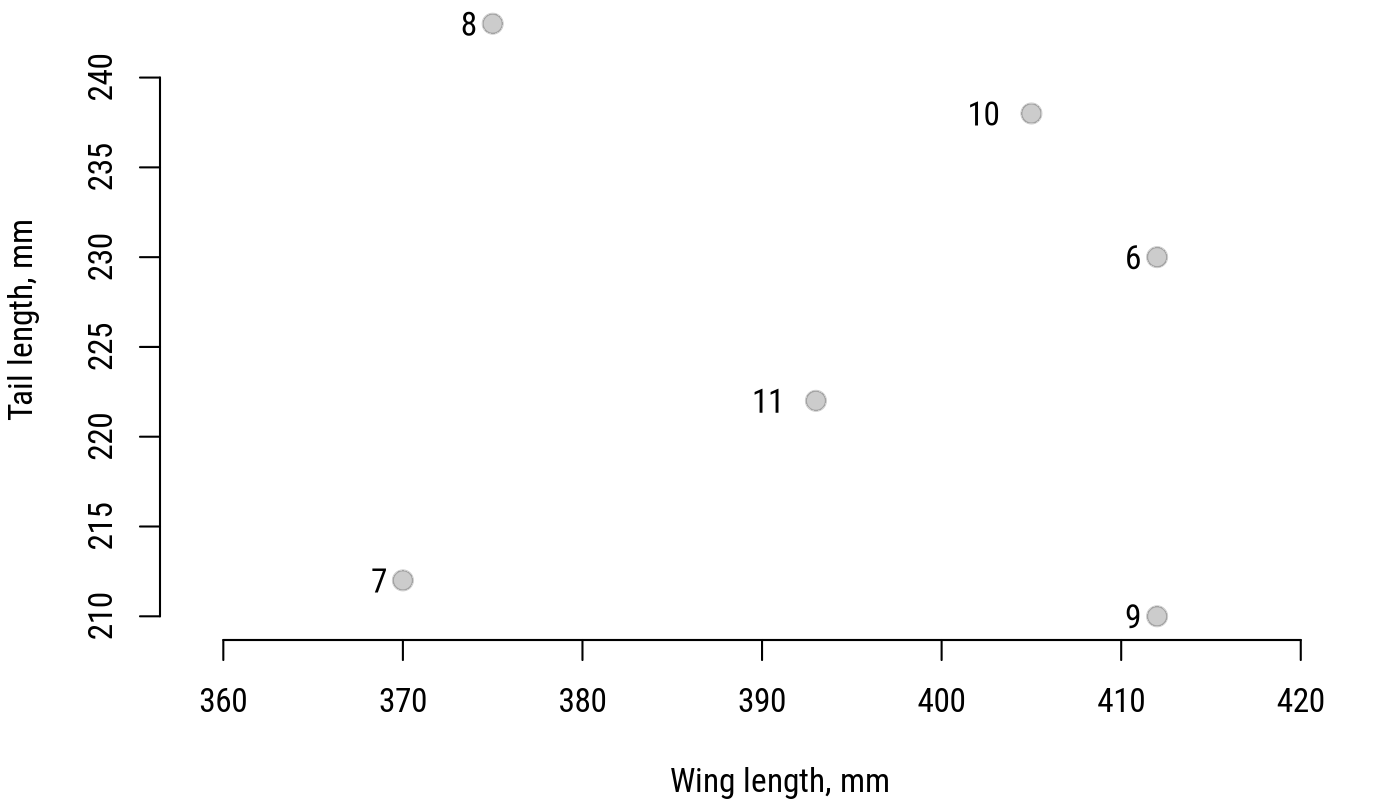

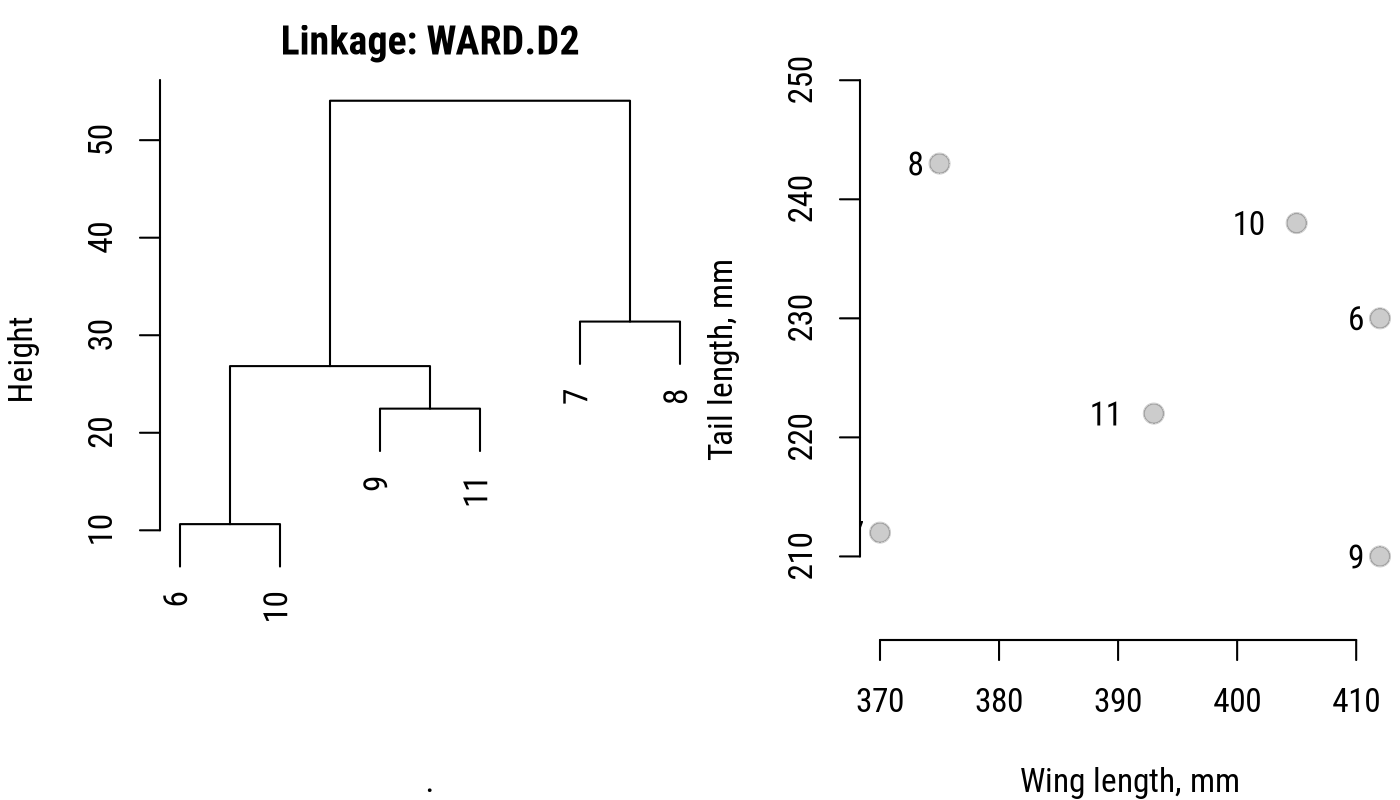

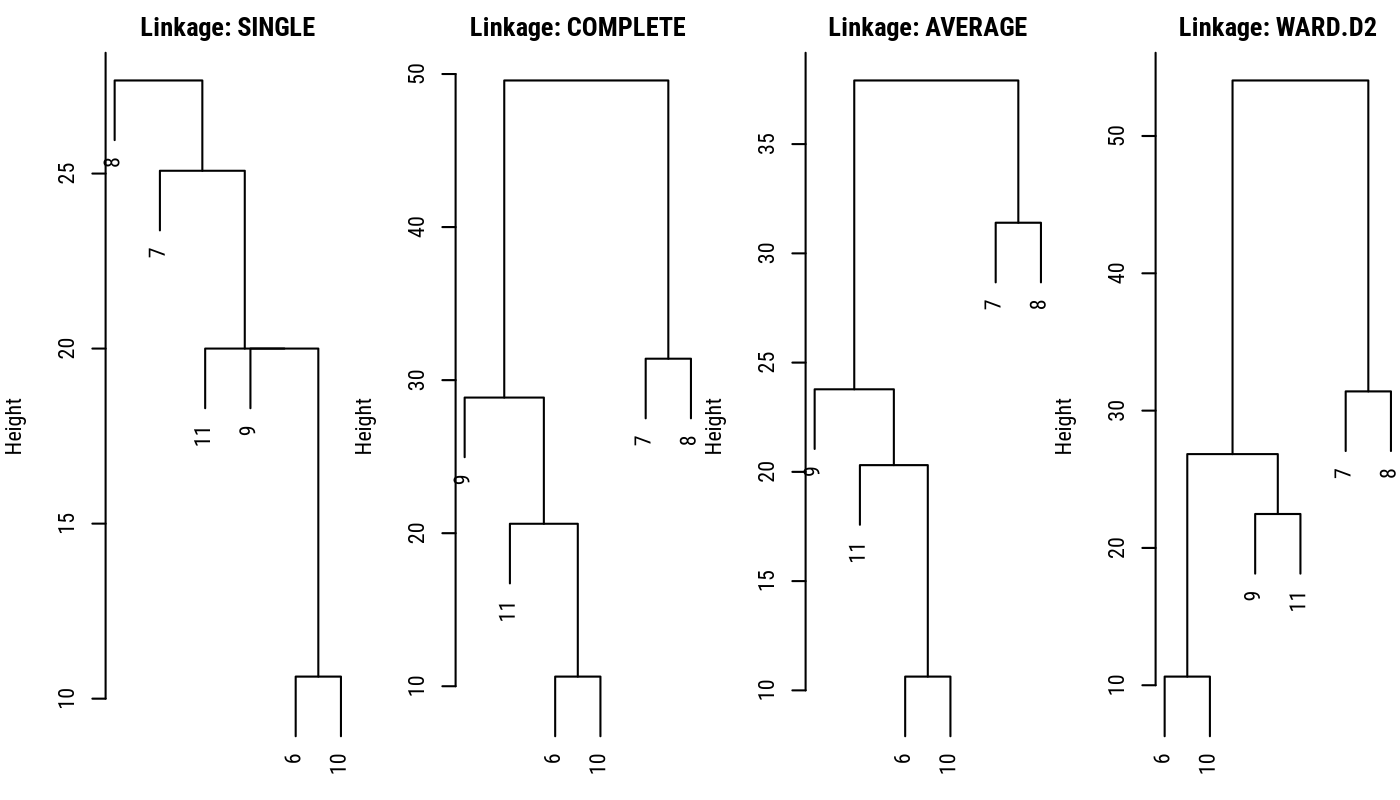

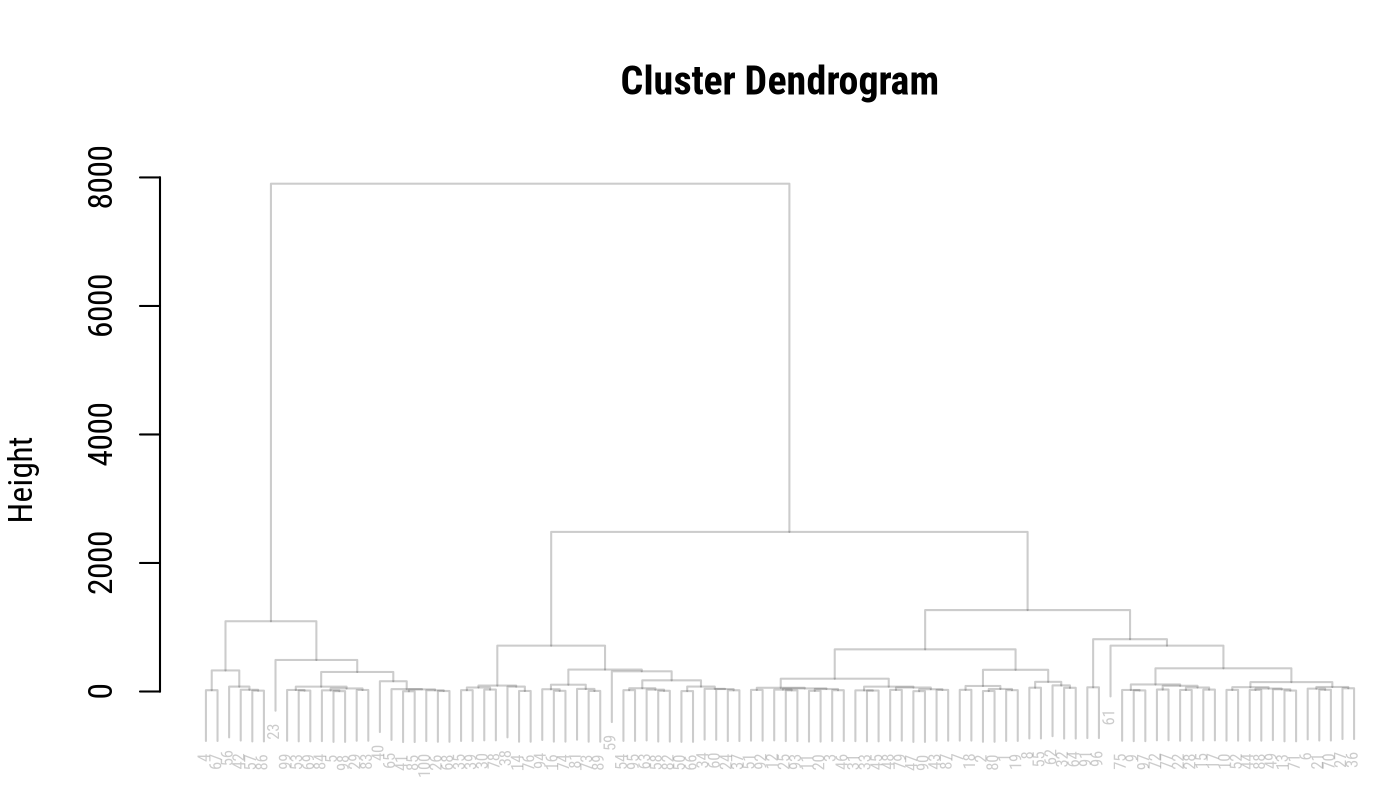

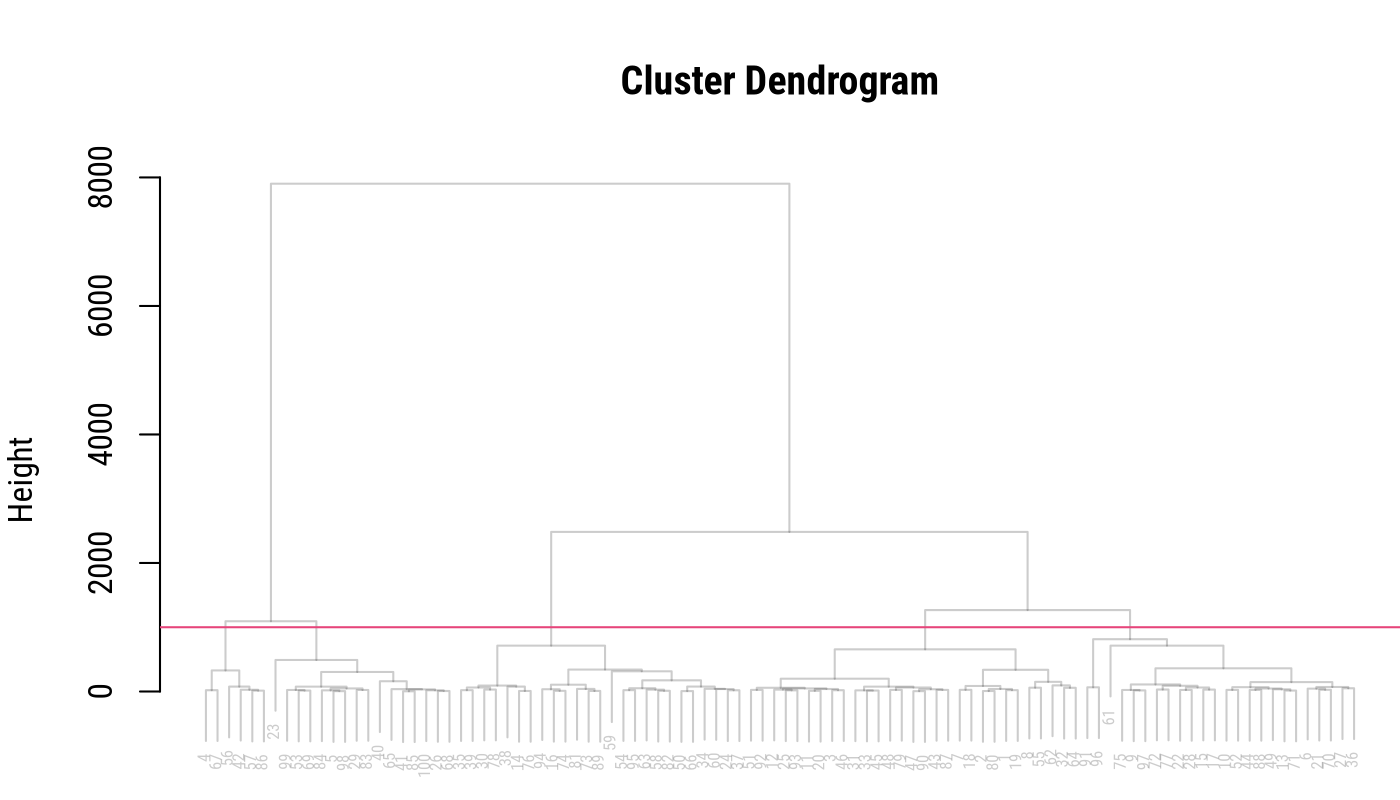

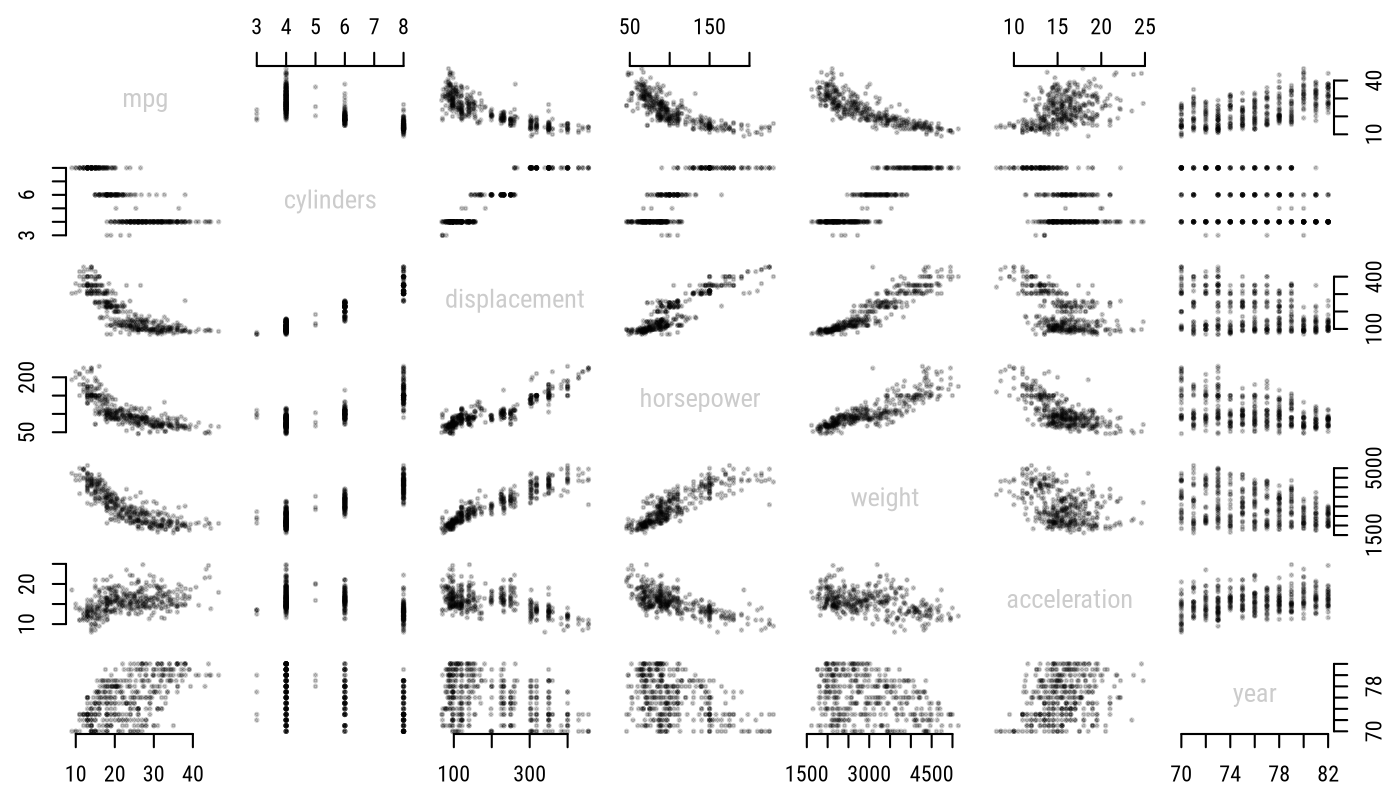

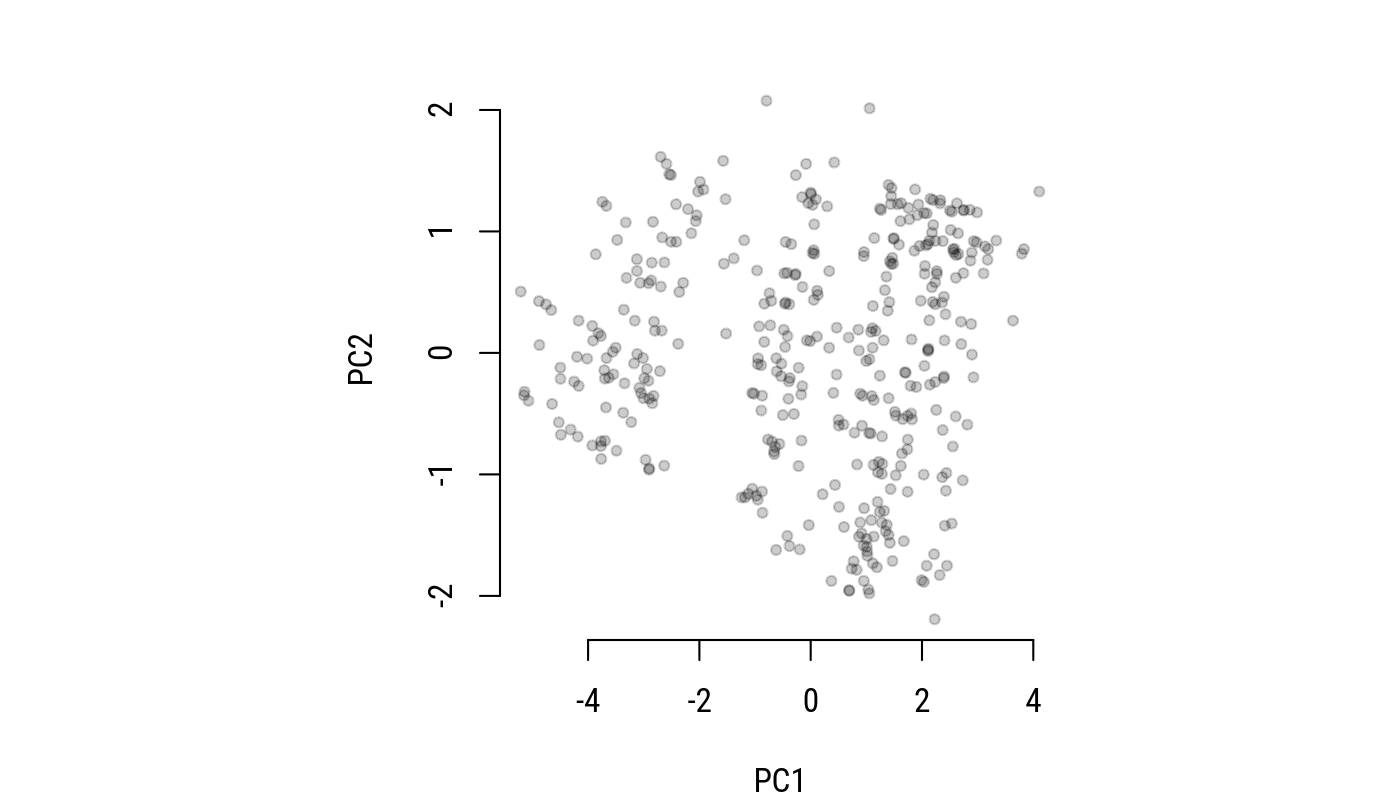

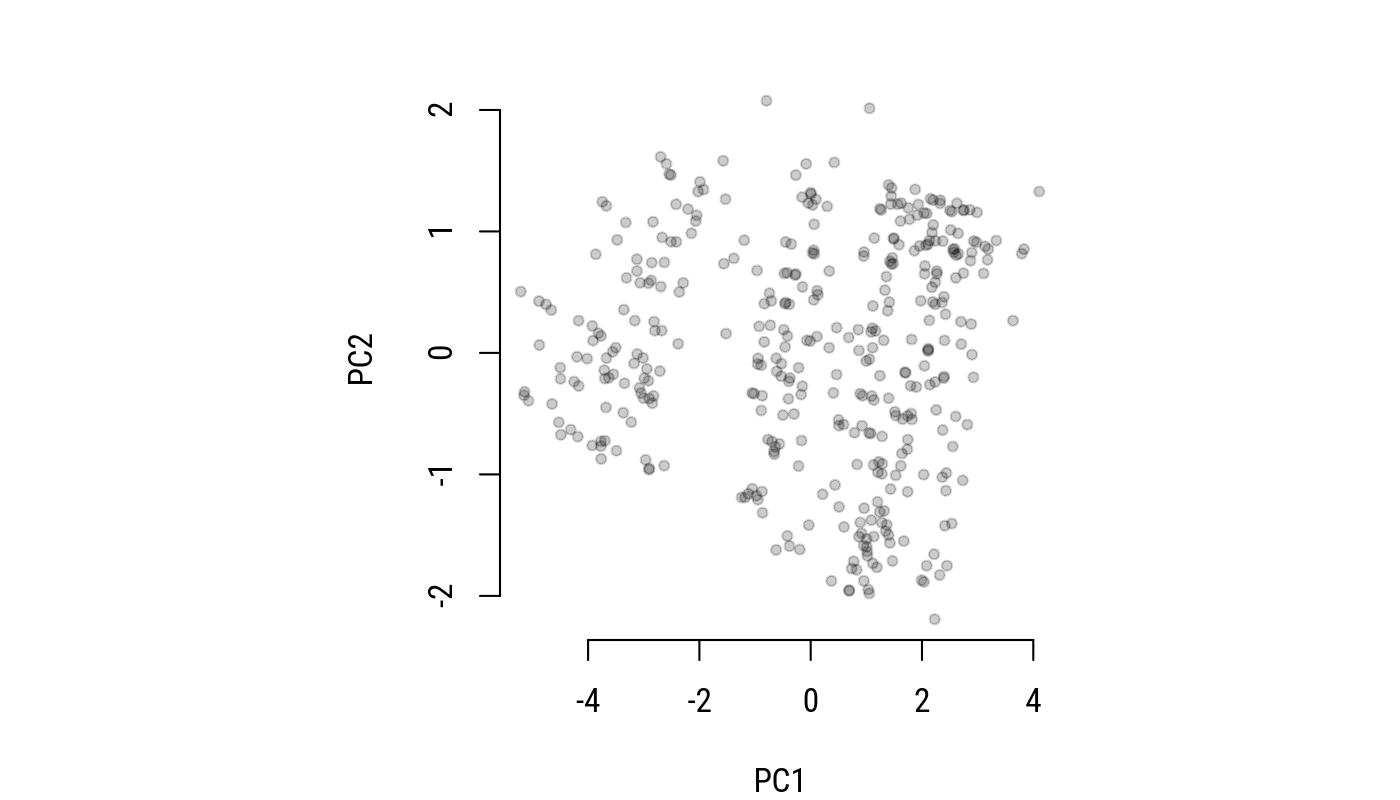

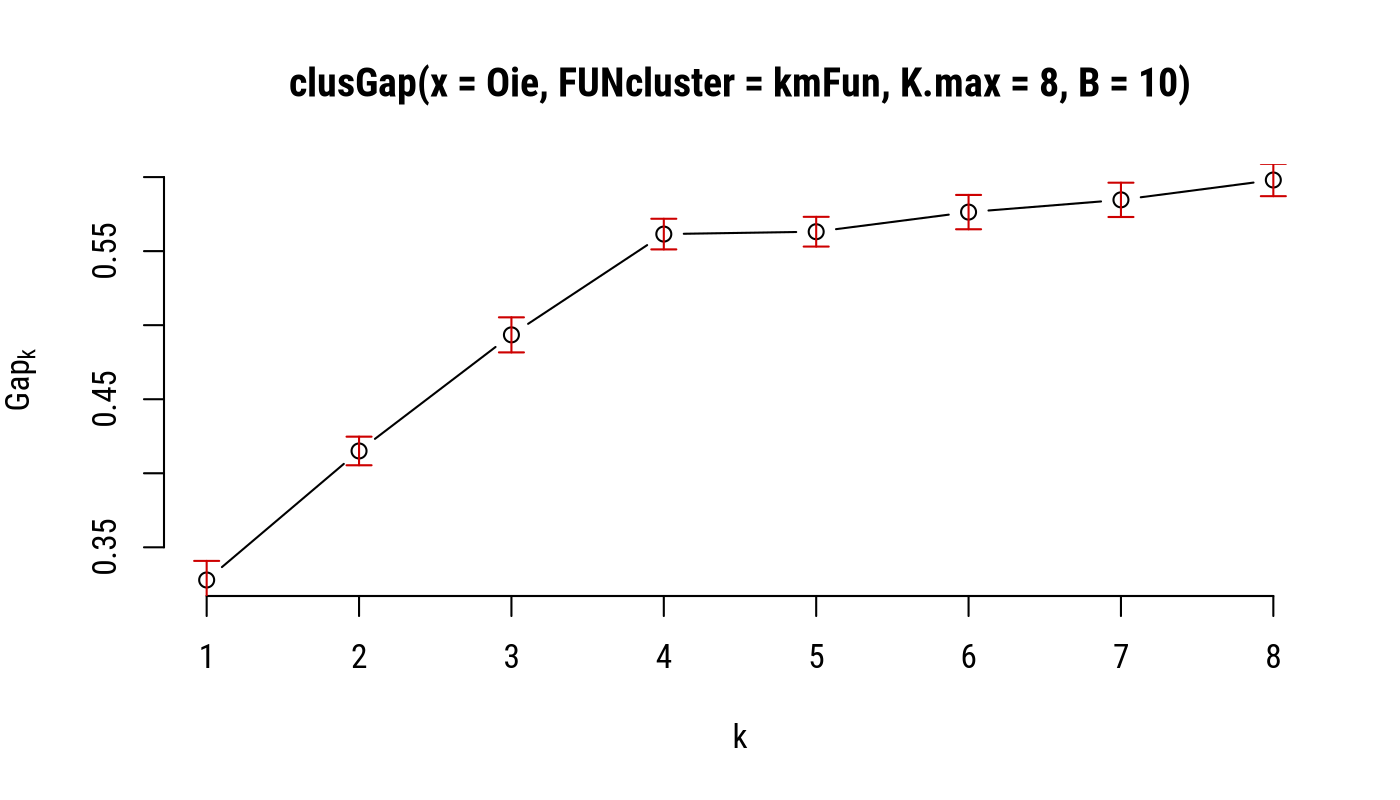

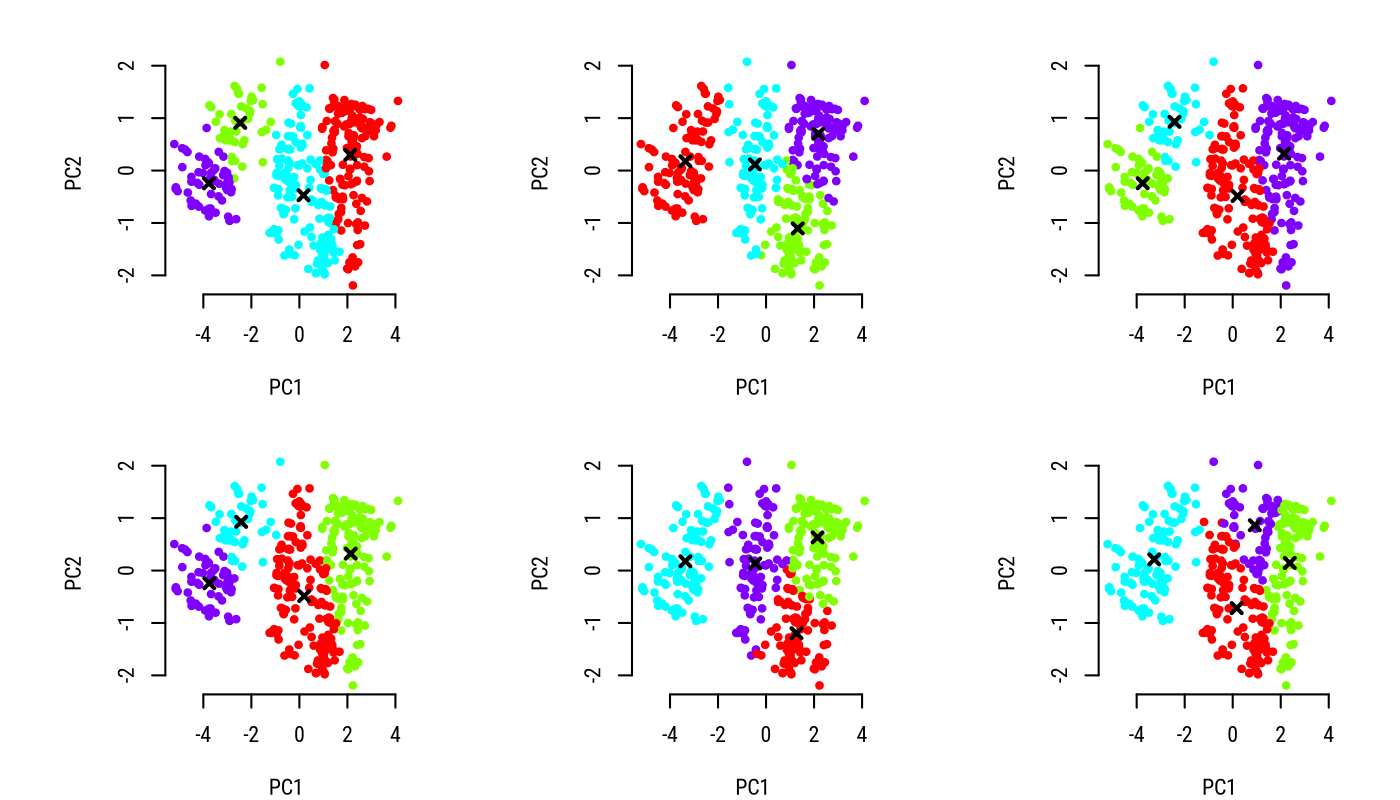

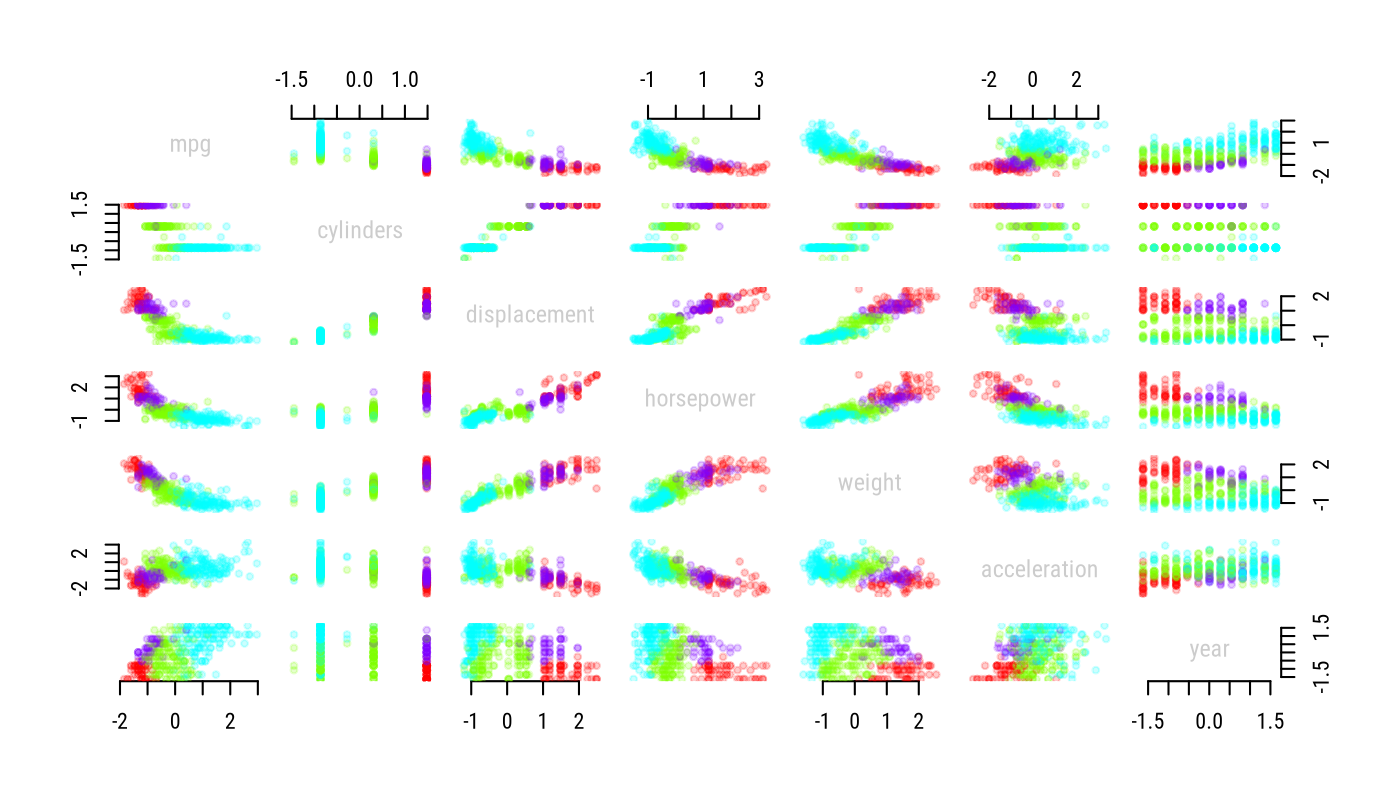

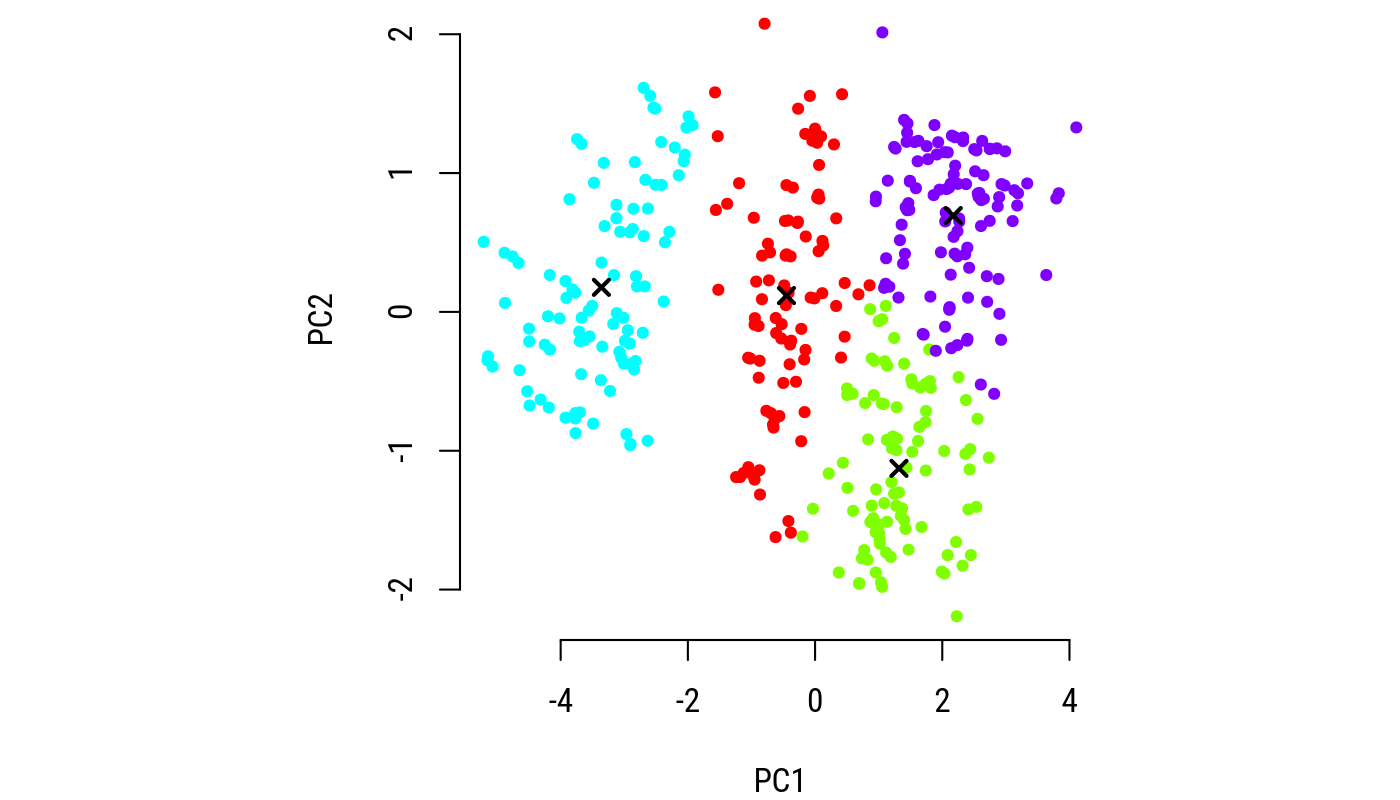

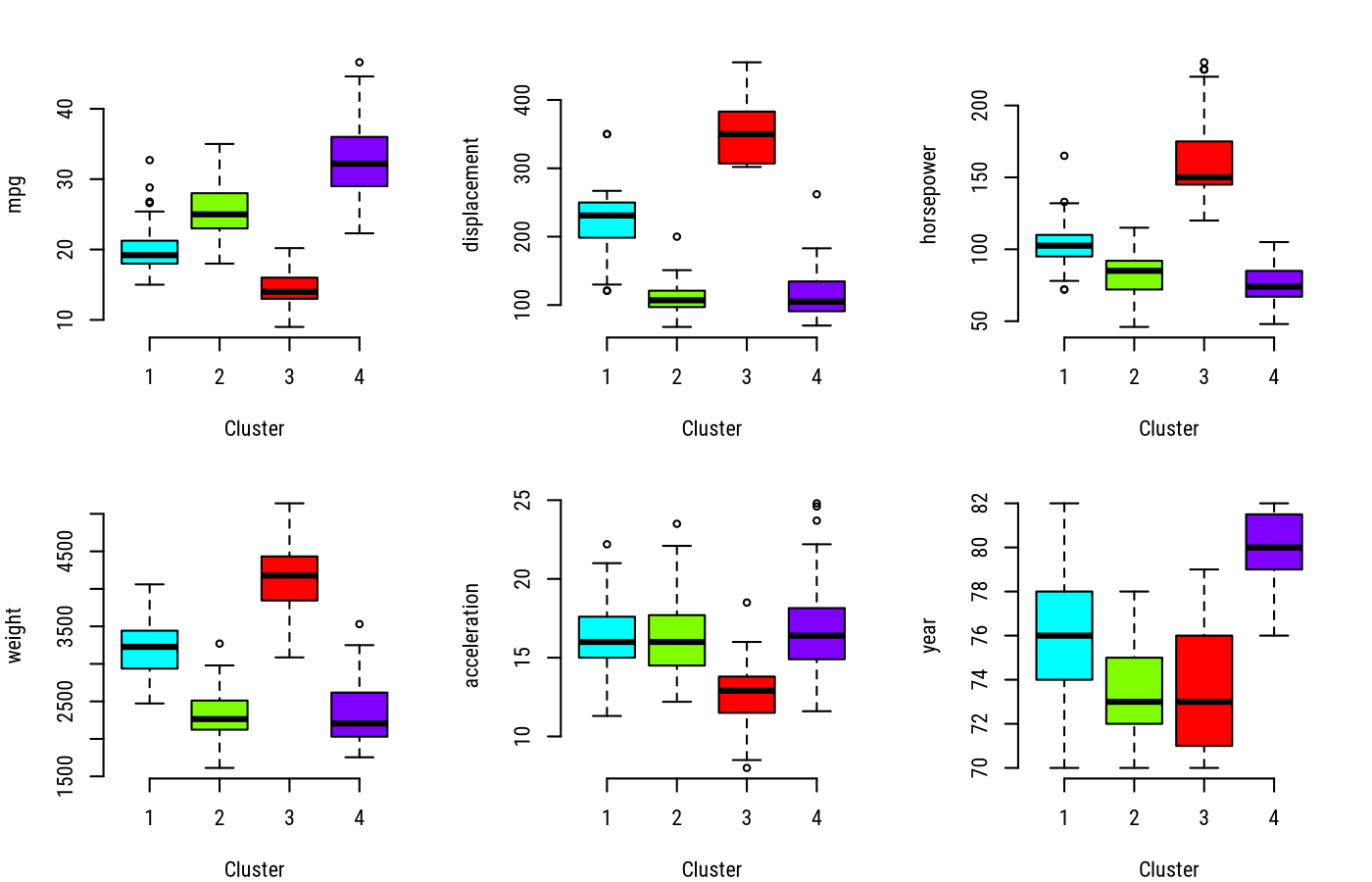

class: center, middle, inverse, title-slide # Cluster analysis ## Research methods ### Jüri Lillemets ### 2021-12-15 --- class: center middle clean # How to group objects? --- class: center middle inverse # What is clustering? --- ## The idea behind clustering The purpose of cluster analysis is to **categorize objects into some homogeneous groups** -- so that **objects within the same groups are more closely related than objects in different groups**. -- The catogorization is based on **similarities between objects** according to a set of variables. --- There are various methods for categorizing objects: - classification, - **clustering**, - model-based (e.g. mixture models) methods, - **distance-based (combinatorial) methods**, - **hierarchical clustering**, - **partitioning (K-means clustering)**. --- ### Clustering and classification In clustering we do not have any information on possible existing classes and we can not compare clusters to classes. In classification we already know the classes and use that information to determine classification rules. -- We used classification after estimating a logistic regression model. --- ### Model- and distance-based clustering Model-based methods assume that an underlying model can explain clusters in data (e.g. mixture models). Distance-based methods apply distances between objects and clusters to separate objects into clusters. --- Mixture models assume that objects follow mixture of distributions. Thus objects can be clustered by locating the densities in data. Distance-based methods use distances. <!-- --> --- ### Hierarchical clustering and partitioning In hierarchical clustering clusters are constructed **incrementally** according to similarities between objects and clusters. In partitioning objects are assigned to a particular number of groups and the optimal clustering is determined **iteratively**. -- While partitioning is intrinsically a *divisive* process, *hierachical clustering* can be applied *agglomeratively* as well as *divisively*. --- ## Why objects? It is more common to assign observations to clusters. However, we can cluster either observations or variables. That's why we refer to **objects** as the phenomena to be assigned to clusters. --- ## Standardization Variables with higher variances have a higher influence on how the objects are clustered. If this is not desired, variables should be standardized prior to clustering. Conversely, sometimes it might be desirable to give more weight to particular variables. --- Why should we standardize? <!-- --> -- The distances between objects are scale-dependent. --- ## Application Clustering can be applied in practice for various purposes. Marketing and sales - find homogeneous groups of customers so that promotional campaigns could be addresses more accurately and thus more efficiently. Medicine - cluster patients with similar symptoms or predispositions for treatment or discovery of risk. Finance - categorize enterprises into different types based on some financial or other characteristics. Biology - assign plants to species depending on characteristics they share. -- We can cluster anything we want. --- ## Distance measures The assignment of objects into groups should be such that observations are more similar within groups than between groups. -- .pull-left[ We need to somehow measure the distances between all objects. ] .pull-right.small[ | Length| Flaws| |------:|-----:| | 1.22| 1| | 1.70| 4| | 2.71| 5| | 3.71| 14| | 3.72| 7| | 3.75| 9| | 4.17| 2| | 4.41| 8| | 4.58| 4| | 4.91| 7| ] --- ### Distance matrix For data matrix `\(X : n \times p\)` with `\(n\)` observations and `\(p\)` variables the distances `\(d\)` between objects `\(i\)` and `\(j\)` can be described as a *proximity matrix* or a *distance or matrix* `\(D : n \times n\)` where `\(d_{ij} = d(x_i,x_j)\)`. -- We usually need to calculate this. --- A distance matrix contains pairwise distances between all objects. | 1| 2| 3| 4| 5| 6| 7| 8| 9| 10| |-----:|-----:|----:|-----:|----:|----:|-----:|----:|-----:|----:| | 0.00| 3.04| 4.27| 13.24| 6.50| 8.39| 3.12| 7.69| 4.50| 7.04| | 3.04| 0.00| 1.42| 10.20| 3.62| 5.40| 3.18| 4.83| 2.88| 4.39| | 4.27| 1.42| 0.00| 9.05| 2.24| 4.13| 3.34| 3.45| 2.12| 2.97| | 13.24| 10.20| 9.05| 0.00| 7.00| 5.00| 12.01| 6.04| 10.04| 7.10| | 6.50| 3.62| 2.24| 7.00| 0.00| 2.00| 5.02| 1.22| 3.12| 1.19| | 8.39| 5.40| 4.13| 5.00| 2.00| 0.00| 7.01| 1.20| 5.07| 2.31| | 3.12| 3.18| 3.34| 12.01| 5.02| 7.01| 0.00| 6.00| 2.04| 5.05| | 7.69| 4.83| 3.45| 6.04| 1.22| 1.20| 6.00| 0.00| 4.00| 1.12| | 4.50| 2.88| 2.12| 10.04| 3.12| 5.07| 2.04| 4.00| 0.00| 3.02| | 7.04| 4.39| 2.97| 7.10| 1.19| 2.31| 5.05| 1.12| 3.02| 0.00| --- We look at the most common measures for continuous variables, the Euclidean and Manhattan distances: `$$d_{Euclidean}(x_i,x_j) = [\sum^p_{k=1}(x_{ik} - x_{jk})^2]^{1/2},$$` `$$d_{Manhattan}(x_i,x_j) = \sum^p_{k=1} |x_{ik} - x_{jk}|.$$` -- Distance measures for ordinal and nominal variables also exist but are not explained here. --- Example data on the number of flaws in cloth for 32 pieces of cloth. <!-- --> --- class: center middle inverse # Hierarchical clustering --- Clusters are constructed **incrementally**. This can be done - **divisive**ly ("top-down") where we begin with a single cluster and divide it into smaller clusters and eventually into objects or - **agglomerative**ly ("bottom-up") where we start from combining objects into clusters and eventually have a single cluster. We will explore the agglomerative hierarchical clustering (*Agnes* - agglomerative nesting). --- The example data we use contains on 908 measurements on three hawk species. .small[ | | Wing| Weight| Culmen| Hallux| Tail| StandardTail| Tarsus| WingPitFat| KeelFat| Crop| |:---|----:|------:|------:|------:|----:|------------:|------:|----------:|-------:|----:| |899 | 200| 185| 12.8| 15.2| 158| 166| NA| NA| 4.0| 1.00| |900 | 360| 1325| 26.2| 30.6| 224| 230| NA| NA| 4.0| 0.75| |901 | 366| 945| 25.3| 27.2| 199| 205| NA| NA| 2.0| 0.00| |902 | 402| 1350| 28.7| 31.0| 219| 214| NA| NA| 3.0| 0.00| |903 | 366| 805| 23.5| 25.7| 217| 222| NA| NA| 1.5| 0.25| |904 | 380| 1525| 26.0| 27.6| 224| 227| NA| NA| 3.0| 0.00| |905 | 190| 175| 12.7| 15.4| 150| 153| NA| NA| 4.0| 0.00| |906 | 360| 790| 21.9| 27.6| 211| 215| NA| NA| 2.0| 0.00| |907 | 369| 860| 25.2| 28.0| 207| 210| NA| NA| 2.0| 0.00| |908 | 199| 1290| 28.7| 32.1| 222| 226| NA| NA| 1.0| 0.00| ] --- We will use the following variables to cluster the hawks. - `Wing` Length (in mm) of primary wing feather from tip to wrist it attaches to - `Weight` Body weight (in gm) - `Culmen` Length (in mm) of the upper bill from the tip to where it bumps into the fleshy part of the bird - `Hallux` Length (in mm) of the killing talon - `Tail` Measurement (in mm) related to the length of the tail (invented at the MacBride Raptor Center) - `StandardTail` Standard measurement of tail length (in mm) - `Tarsus` Length of the basic foot bone (in mm) - `WingPitFat` Amount of fat in the wing pit - `KeelFat` Amount of fat on the breastbone (measured by feel - `Crop` Amount of material in the crop, coded from 1=full to 0=empty --- ## Process Agglomerative hierarchical clustering has the following steps. 1. Initial number of clusters is `\(n\)`, so each cluster contains one object. 2. Calculate distance matrix `\(D\)` that expresses pairwise distances between clusters (objects). 3. Find the smallest distance and merge the objects with smallest distance into a single cluster. 4. Calculate a new distance matrix `\(D\)` that now includes distance between the new cluster and all other clusters using a linkage method (see below). 5. Repeat the previous two steps until all objects are in a single cluster. --- Let's illustrate the process with two variables and 5 hawks. <!-- --> --- Here's the part of the respective initial Euclidean distance matrix `\(D\)` that represent pairwise distances between hawks. | | 6| 7| 8| 9| 10| 11| |:--|----:|----:|----:|----:|----:|----:| |6 | 0.0| 45.7| 39.2| 20.0| 10.6| 20.6| |7 | 45.7| 0.0| 31.4| 42.0| 43.6| 25.1| |8 | 39.2| 31.4| 0.0| 49.6| 30.4| 27.7| |9 | 20.0| 42.0| 49.6| 0.0| 28.9| 22.5| |10 | 10.6| 43.6| 30.4| 28.9| 0.0| 20.0| |11 | 20.6| 25.1| 27.7| 22.5| 20.0| 0.0| --- What if we have more than two variables? For example three? <div id="rgl29905" style="width:800px;height:450px;" class="rglWebGL html-widget"></div> <script type="application/json" data-for="rgl29905">{"x":{"material":{"color":"#000000","alpha":1,"lit":true,"ambient":"#000000","specular":"#FFFFFF","emission":"#000000","shininess":50,"smooth":true,"front":"filled","back":"filled","size":3,"lwd":1,"fog":true,"point_antialias":false,"line_antialias":false,"texture":null,"textype":"rgb","texmipmap":false,"texminfilter":"linear","texmagfilter":"linear","texenvmap":false,"depth_mask":true,"depth_test":"less","isTransparent":false,"polygon_offset":[0,0],"margin":"","floating":false},"rootSubscene":7,"objects":{"13":{"id":13,"type":"spheres","material":{},"vertices":[[412,1090,230],[370,960,212],[375,855,243],[412,1210,210],[405,1120,238],[393,1010,222]],"colors":[[0,0,0,1]],"radii":[[6.90887784957886]],"centers":[[412,1090,230],[370,960,212],[375,855,243],[412,1210,210],[405,1120,238],[393,1010,222]],"ignoreExtent":false,"fastTransparency":true,"flags":32771},"15":{"id":15,"type":"text","material":{"lit":false,"margin":0,"floating":true,"edge":[0,1,1]},"vertices":[["NaN",4,1]],"colors":[[0,0,0,1]],"texts":[["Wing"]],"cex":[[1]],"adj":[[0.5,0.5]],"centers":[["NaN",4,1]],"family":[["sans"]],"font":[[1]],"ignoreExtent":true,"flags":33808},"16":{"id":16,"type":"text","material":{"lit":false,"margin":1,"floating":true,"edge":[1,1,1]},"vertices":[["NaN",4,1]],"colors":[[0,0,0,1]],"texts":[["Weight"]],"cex":[[1]],"adj":[[0.5,0.5]],"centers":[["NaN",4,1]],"family":[["sans"]],"font":[[1]],"ignoreExtent":true,"flags":33808},"17":{"id":17,"type":"text","material":{"lit":false,"margin":2,"floating":true,"edge":[1,1,1]},"vertices":[["NaN",4,1]],"colors":[[0,0,0,1]],"texts":[["Tail"]],"cex":[[1]],"adj":[[0.5,0.5]],"centers":[["NaN",4,1]],"family":[["sans"]],"font":[[1]],"ignoreExtent":true,"flags":33808},"11":{"id":11,"type":"light","vertices":[[0,0,1]],"colors":[[1,1,1,1],[1,1,1,1],[1,1,1,1]],"viewpoint":true,"finite":false},"10":{"id":10,"type":"background","material":{},"colors":[[0.298039227724075,0.298039227724075,0.298039227724075,1]],"centers":[[0,0,0]],"sphere":false,"fogtype":"none","fogscale":1,"flags":32768},"12":{"id":12,"type":"background","material":{"lit":false,"back":"lines"},"colors":[[1,1,1,1]],"centers":[[0,0,0]],"sphere":false,"fogtype":"none","fogscale":1,"flags":32768},"14":{"id":14,"type":"bboxdeco","material":{"front":"lines","back":"lines"},"vertices":[[370,"NA","NA"],[380,"NA","NA"],[390,"NA","NA"],[400,"NA","NA"],[410,"NA","NA"],["NA",900,"NA"],["NA",1000,"NA"],["NA",1100,"NA"],["NA",1200,"NA"],["NA","NA",210],["NA","NA",220],["NA","NA",230],["NA","NA",240]],"colors":[[0,0,0,1]],"axes":{"mode":["pretty","pretty","pretty"],"step":[10,100,10],"nticks":[5,5,5],"marklen":[15,15,15],"expand":[1.02999997138977,1.02999997138977,1.02999997138977]},"draw_front":true},"7":{"id":7,"type":"subscene","par3d":{"antialias":8,"FOV":30,"ignoreExtent":false,"listeners":7,"mouseMode":{"none":"none","left":"trackball","right":"zoom","middle":"fov","wheel":"pull"},"observer":[0,0,937.028686523438],"modelMatrix":[[4.93489456176758,0,0,-1929.54382324219],[0,0.199688091874123,5.90198755264282,-1542.97814941406],[0,-0.548638522624969,2.14814782142639,-857.114868164062],[0,0,0,1]],"projMatrix":[[3.73205089569092,0,0,0],[0,3.3827588558197,0,0],[0,0,-3.86370325088501,-3377.8798828125],[0,0,-1,0]],"skipRedraw":false,"userMatrix":[[1,0,0,0],[0,0.342020143325668,0.939692620785909,0],[0,-0.939692620785909,0.342020143325668,0],[0,0,0,1]],"userProjection":[[1,0,0,0],[0,1,0,0],[0,0,1,0],[0,0,0,1]],"scale":[4.93489456176758,0.583848893642426,6.28076410293579],"viewport":{"x":0,"y":0,"width":1,"height":1},"zoom":1,"bbox":[368.600006103516,413.399993896484,843.166687011719,1221.83337402344,208.899993896484,244.100006103516],"windowRect":[1287,37,2546,1426],"family":"sans","font":1,"cex":1,"useFreeType":true,"fontname":"/home/jrl/R/x86_64-pc-linux-gnu-library/4.1/rgl/fonts/FreeSans.ttf","maxClipPlanes":8,"glVersion":4.6,"activeSubscene":0},"embeddings":{"viewport":"replace","projection":"replace","model":"replace","mouse":"replace"},"objects":[12,14,13,15,16,17,11],"subscenes":[],"flags":34067}},"crosstalk":{"key":[],"group":[],"id":[],"options":[]},"width":800,"height":450,"context":{"shiny":false,"rmarkdown":"_output.yml"},"players":[],"webGLoptions":{"preserveDrawingBuffer":true}},"evals":[],"jsHooks":[]}</script> --- ## Linkage methods How to calculate distance between cluster and a point? - single linkage: `\(d_{IJ,k} = min(d_{i,K}, d_{j,K})\)`; - complete linkage: `\(d_{IJ,k} = max(d_{I,K}, d_{J,K})\)`; - average linkage: `\(d_{IJ,k} = \sum_{i \in IJ} \sum_{k \in K} d_{ik} / (n_{ij}n_k)\)`; - Ward's method: compares the within-cluster and between-cluster squared distances. --- How to think about single, complete and average linkage? <!-- --> ??? Draw linkage results. --- Single linkage tends to link objects serially, resulting in **clusters with large diameter** where objects within a cluster are not similar. Complete linkage has the tendency to produce **clusters with small diameter** and as a result, an object can be closer to members of another cluster. Average linkage is a **compromise between the two** but is sensitive to the scale on which distances are measured. --- There is no correct linkage method.  --- ## Dendrogram The clusters in case of hierarchical clustering are estimated incrementally, resulting in a **nested structure**. This tree-shaped structure can be visualized by a **dendrogram**. Dendrogam is highly intrepretative and provides complete description of the clustering process. --- <!-- --> --- Dendrogram allows us to also illustrate differences between linkage methods. <!-- --> --- ## Number of clusters In hierarchical clustering we can decide the suitable number of clusters after the clustering procedure. The descision can be made by examining dendrogram. We can find the longest consecutive height and cut between the ends of that at the point where another heights are also the longest. --- To determine the clusters we can choose either the **height of cut** or **number of clusters**. <!-- --> --- If we would cut at 1000 then we would obtain 5 clusters. <!-- --> -- > At what height would we have to cut if we wished to obtain 3 clusters? --- Actually, the species for each Hawk is already determined. How does it coincide with our clusters? | | CH| RT| SS| |:--|--:|---:|---:| |1 | 3| 398| 1| |2 | 67| 8| 260| |3 | 0| 171| 0| --- class: center middle inverse # K-means clustering --- Clusters are constructed by *partitioning* objects *iteratively*. The **number of clusters `\(K\)` has to be defined before estimation**. The goal is to partition objects `\(x\)` into `\(K\)` clusters so that distances between objects within cluster are small compared to distances to points outside the cluster. We can achieve this by assigning each object to the closest **centroid**, i.e. cluster mean. --- We thus need optimal cluster means. The optimal mean vector `\(\bar x_1, ... \bar x_K\)` can be found by minimizing the following function: `$$ESS = \sum^K_{k = 1} \sum_{c(i)=k} (x_i - \bar x_k)^T(x_i - \bar x_k),$$` where `\(c(i)\)` is the cluster containing `\(x_i\)`. -- An alternative is **K-medoids clustering** in which case the centroids are not some mean values but represented by actual objects. ??? Number of clusters has to be defined before clustering. We attempt to minimize the sum of distances within all clusters --- Let's attempt to cluster 392 vehicles. | mpg| cylinders| displacement| horsepower| weight| acceleration| year| |---:|---------:|------------:|----------:|------:|------------:|----:| | 18| 8| 307| 130| 3504| 12.0| 70| | 15| 8| 350| 165| 3693| 11.5| 70| | 18| 8| 318| 150| 3436| 11.0| 70| | 16| 8| 304| 150| 3433| 12.0| 70| -- We should scale the variables. | mpg| cylinders| displacement| horsepower| weight| acceleration| year| |------:|---------:|------------:|----------:|------:|------------:|-----:| | -0.698| 1.48| 1.08| 0.663| 0.620| -1.28| -1.62| | -1.082| 1.48| 1.49| 1.573| 0.842| -1.47| -1.62| | -0.698| 1.48| 1.18| 1.183| 0.540| -1.65| -1.62| | -0.954| 1.48| 1.05| 1.183| 0.536| -1.28| -1.62| --- We have 7 variables, so 7 dimensions. Let's use PCs to represent the data. <!-- --> --- What do we mean by "partitioning"? <!-- --> --- ## Process K-means clustering involves the following steps: 1. We start with a distance matrix `\(D\)` based on - random assignment of objects to `\(K\)` clusters with cluster means, or - some (random) cluster means. 2. Calculate squared Euclidean distance between each object and each cluster mean. Reassign each item to its nearest cluster mean, resulting in decreased `\(ESS\)`. 3. Update cluster means. 4. Repeat the previous two steps until objects can not be reassigned, so each object is closest to its own cluster mean. --- Can we see any clusters if we summarize the data into PCs? <!-- --> -- > How many clusters would you distinguish? --- ## Number of clusters (Gap statistic) The Gap statistic is a technique used to determine the optimal number of clusters. The measure compares the sum of average distances within cluster to the same sum obtained from uniformly distributed data. The optimal number of clusters is at the value of the Gap statistic `\(k\)` where `\(k + se(k)\)` is higher or equal to the estimate `\(k\)` of next number of clusters. --- <!-- --> > How many clusters should we estimate? --- We get a better picture the more dimensons we look at. <div id="rgl85224" style="width:800px;height:450px;" class="rglWebGL html-widget"></div> <script type="application/json" data-for="rgl85224">{"x":{"material":{"color":"#000000","alpha":1,"lit":true,"ambient":"#000000","specular":"#FFFFFF","emission":"#000000","shininess":50,"smooth":true,"front":"filled","back":"filled","size":3,"lwd":1,"fog":true,"point_antialias":false,"line_antialias":false,"texture":null,"textype":"rgb","texmipmap":false,"texminfilter":"linear","texmagfilter":"linear","texenvmap":false,"depth_mask":true,"depth_test":"less","isTransparent":false,"polygon_offset":[0,0],"margin":"","floating":false},"rootSubscene":7,"objects":{"24":{"id":24,"type":"spheres","material":{},"vertices":[[-2.63168549537659,-0.927853226661682,0.533996343612671],[-3.48934125900269,-0.80444473028183,0.648665547370911],[-2.96662354469299,-0.88006180524826,0.957518577575684],[-2.90648293495178,-0.960493505001068,0.582209050655365],[-2.90011978149414,-0.951573312282562,1.05348980426788],[-4.64668846130371,-0.420008331537247,0.993466973304749],[-5.15152215957642,-0.348722904920578,1.35310041904449],[-5.07032632827759,-0.393771827220917,1.52002942562103],[-5.14189100265503,-0.319673269987106,1.02730929851532],[-4.31437921524048,-0.631028056144714,1.64088249206543],[-3.77117085456848,-0.767089307308197,1.16702282428741],[-3.76488995552063,-0.87261825799942,1.76384377479553],[-3.7693657875061,-0.728443801403046,1.1600946187973],[-4.48918867111206,-0.67385071516037,1.46255922317505],[0.692081987857819,-1.95337212085724,0.486309975385666],[-0.419616967439651,-1.50638771057129,-0.135995075106621],[-0.620969474315643,-1.62180304527283,-0.240779086947441],[-0.197437986731529,-1.6179096698761,-0.296885430812836],[1.05397343635559,-1.97972762584686,0.810216069221497],[2.22771549224854,-2.19242262840271,-1.25477313995361],[0.955205202102661,-1.87673377990723,-0.420131653547287],[0.691713809967041,-1.95818150043488,0.613064348697662],[1.03671586513519,-1.94791781902313,-0.282570987939835],[0.372663259506226,-1.87692475318909,1.4782487154007],[-0.381259918212891,-1.58989822864532,0.0300822649151087],[-4.52591753005981,-0.571372628211975,-0.414466768503189],[-3.92395663261414,-0.761685788631439,-0.675891876220703],[-4.18574237823486,-0.688712477684021,-0.125654920935631],[-3.69787001609802,-0.723528385162354,-1.98583388328552],[1.11627697944641,-1.73278415203094,0.82032710313797],[1.00686538219452,-1.59483325481415,0.453524470329285],[0.772323250770569,-1.71550035476685,0.899979650974274],[-0.865907609462738,-1.3155609369278,0.638873636722565],[-1.17938148975372,-1.18941235542297,-0.502398014068604],[-1.12238574028015,-1.16000127792358,-0.479694753885269],[-0.875388026237488,-1.14073693752289,-0.457908809185028],[-0.977548241615295,-1.17538011074066,-0.416156798601151],[-3.67794156074524,-0.448768615722656,0.296194851398468],[-4.1688380241394,-0.271730482578278,0.37135523557663],[-3.36851859092712,-0.491958498954773,-0.22547972202301],[-3.22354888916016,-0.569558441638947,-0.02631875872612],[-4.49538326263428,-0.213065579533577,0.184181421995163],[-4.25075435638428,-0.235965877771378,0.0627945885062218],[-4.49773502349854,-0.120676450431347,-0.0432354658842087],[-1.24167692661285,-1.18959701061249,0.356561720371246],[1.18976628780365,-1.7643940448761,-1.00364971160889],[-1.04908812046051,-1.11818861961365,-0.236609160900116],[-0.950209081172943,-1.20781540870667,-0.114084333181381],[0.73614376783371,-1.77501654624939,0.788366496562958],[1.01915299892426,-1.66970443725586,1.00805795192719],[2.08450126647949,-1.75164699554443,-0.74367743730545],[1.46972906589508,-1.7125928401947,0.896048069000244],[2.31834435462952,-1.82837176322937,-0.465355575084686],[2.44907069206238,-1.75162220001221,0.0589442849159241],[2.03283214569092,-1.88445854187012,-0.663971126079559],[1.99250602722168,-1.87092959880829,-1.17211544513702],[0.914143025875092,-1.48561251163483,0.375189542770386],[1.42250156402588,-1.56305205821991,-0.0794070810079575],[2.21696925163269,-1.65704226493835,-2.40573406219482],[1.00395834445953,-1.53183257579803,-1.14199542999268],[0.951667547225952,-1.58578443527222,-0.0788717344403267],[-3.69833278656006,-0.211122557520866,0.252222120761871],[-4.01639270782471,-0.0469466783106327,0.245247930288315],[-3.07761287689209,-0.287072092294693,-0.157827541232109],[-3.34566164016724,-0.250364243984222,-0.0453445576131344],[-2.89900040626526,-0.375634849071503,0.719595551490784],[-4.87503957748413,0.0647720545530319,0.507240772247314],[-3.54472661018372,-0.1767508238554,-0.351667851209641],[-3.62823724746704,-0.20433583855629,-0.347628384828568],[-4.19857549667358,-0.0316951237618923,0.104493997991085],[0.825591564178467,-1.78533434867859,0.97272127866745],[-3.00499486923218,-0.372950911521912,0.258377522230148],[-2.8451452255249,-0.414354473352432,-0.451231360435486],[-2.82348942756653,-0.354036778211594,-1.11365604400635],[-3.0487174987793,-0.33016762137413,-0.333827137947083],[-0.0340252369642258,-1.41733860969543,0.355616331100464],[1.13260114192963,-1.51156878471375,-0.667376160621643],[0.891631960868835,-1.39542281627655,-1.28869962692261],[1.67305302619934,-1.54937016963959,-0.437780380249023],[0.868664741516113,-1.5133284330368,0.0610466636717319],[1.36679935455322,-1.41501688957214,0.0201793219894171],[0.598041415214539,-1.43277370929718,0.594023466110229],[1.34842538833618,-1.46761333942413,0.654217779636383],[1.39973640441895,-1.49882984161377,0.19259886443615],[-3.55779266357422,0.00941522140055895,0.039181400090456],[-2.98972725868225,-0.208162873983383,0.630841195583344],[-3.17365741729736,-0.086647242307663,-0.0566643103957176],[-2.7092821598053,-0.15011228621006,-0.516207814216614],[-2.94406628608704,-0.132295787334442,0.292448997497559],[-4.75574398040771,0.399175107479095,0.240632310509682],[-3.76899123191833,0.13903796672821,0.0865144357085228],[-3.50335621833801,0.0430567562580109,-0.122209750115871],[-3.01277375221252,-0.0421661771833897,-0.537622809410095],[-4.88245439529419,0.426137953996658,0.570420026779175],[-5.21013593673706,0.504780888557434,0.496957212686539],[-3.6693480014801,-0.0420929752290249,0.76787656545639],[-0.694477140903473,-0.729185223579407,-0.63669341802597],[-0.765763282775879,-0.712412178516388,-1.28533864021301],[-0.634110867977142,-0.773483097553253,-0.446338295936584],[-0.56155002117157,-0.750194489955902,-0.703096628189087],[-0.164672270417213,-0.721550107002258,-0.257683575153351],[2.41020178794861,-1.42243719100952,-1.42371928691864],[-3.92429304122925,0.221986219286919,-0.800241589546204],[-4.17056322097778,0.265844792127609,-0.177070364356041],[-3.81394243240356,0.162116065621376,-0.172528013586998],[-3.90985369682312,0.100800178945065,0.0604686848819256],[-0.661333918571472,-0.812219500541687,-0.0718340873718262],[1.27587962150574,-1.39629030227661,-0.895649671554565],[1.31840848922729,-1.30011260509491,-1.17600512504578],[0.959955632686615,-1.2782940864563,-0.0368509739637375],[1.01419341564178,-1.63487219810486,0.985979914665222],[1.08852136135101,-1.37687182426453,-0.813974976539612],[-0.218060702085495,-0.931284010410309,0.558543682098389],[1.2012619972229,-1.22605311870575,0.447774171829224],[-3.1174578666687,-0.00880237855017185,-0.0213093366473913],[-4.66223335266113,0.352794885635376,1.40800082683563],[2.53480505943298,-1.40446949005127,-0.77115523815155],[1.2423278093338,-1.30953097343445,0.333263397216797],[0.509156823158264,-1.26756453514099,0.621764063835144],[0.214239209890366,-1.16362464427948,0.0960156247019768],[-2.91470742225647,-0.228489682078362,0.901013374328613],[0.437750965356827,-1.08682870864868,0.80527925491333],[-0.652873873710632,-0.832958221435547,0.643759906291962],[-3.70873808860779,-0.142764806747437,0.784628510475159],[-0.300282269716263,-0.502968847751617,-0.57269674539566],[-0.49934908747673,-0.511687517166138,-0.38896968960762],[-0.886008381843567,-0.474090695381165,-1.00324058532715],[2.36418771743774,-1.02250528335571,-0.491409748792648],[1.28755617141724,-0.913217902183533,0.00653791753575206],[2.73208785057068,-1.04944062232971,-1.07013690471649],[1.22490239143372,-0.896950006484985,-0.257192581892014],[-1.05191576480865,-0.329893708229065,-1.11493527889252],[-1.01868414878845,-0.335898071527481,-1.35388255119324],[-0.872001469135284,-0.352103292942047,-0.786509931087494],[-2.67780327796936,0.183905869722366,-0.297244161367416],[-3.35795855522156,0.355644524097443,-0.741319298744202],[-3.16097640991211,0.265496283769608,-0.2752286195755],[-2.81555676460266,0.257331788539886,-1.17229819297791],[-2.79952073097229,0.183410376310349,-0.844387412071228],[1.61917781829834,-0.929834604263306,0.217048853635788],[1.74136853218079,-1.14267480373383,0.472873121500015],[1.27915692329407,-0.995623409748077,0.718361556529999],[2.42982339859009,-1.13419449329376,0.352670729160309],[2.43886137008667,-0.988389015197754,-0.505967557430267],[1.52815222740173,-1.0076402425766,0.834641873836517],[1.43566429615021,-1.1206259727478,0.384551882743835],[1.2088565826416,-0.982539117336273,0.8663729429245],[0.833578586578369,-0.918156325817108,0.490828841924667],[1.12816905975342,-0.921902000904083,0.420566916465759],[2.03001666069031,-1.00169885158539,0.46372389793396],[-0.530352115631104,-0.191865235567093,-0.512185513973236],[-0.888820707798004,-0.10154115408659,-0.588408589363098],[-0.149865210056305,-0.27387061715126,-2.44295191764832],[-0.171201601624489,-0.342555552721024,-1.86819338798523],[-3.86212086677551,0.811911463737488,0.389356136322021],[-3.06409358978271,0.577251851558685,-0.441252797842026],[-2.91337203979492,0.573738694190979,-0.536330163478851],[-3.30957078933716,0.617790579795837,-0.370580762624741],[-0.618859767913818,-0.0438417606055737,-2.34571003913879],[-0.946181178092957,-0.0449847504496574,-1.60618197917938],[-0.951895177364349,-0.0920501872897148,-1.73237764835358],[-0.525528192520142,-0.0881275683641434,-1.6858811378479],[-0.611304461956024,-0.152311503887177,0.0110319619998336],[-1.52100038528442,0.159345984458923,0.211730241775513],[-2.38042449951172,0.0749687328934669,0.529054462909698],[1.74522590637207,-0.713764309883118,0.370351761579514],[1.05002510547638,-0.65961354970932,-0.309255033731461],[-0.392372220754623,-0.234814867377281,-0.350129276514053],[1.28275799751282,-0.686908841133118,-0.80171263217926],[0.590707361698151,-0.589166402816772,0.899566769599915],[1.64012551307678,-0.828158736228943,0.0488075241446495],[1.07921922206879,-0.663177907466888,-0.163895577192307],[-0.400218337774277,-0.377271980047226,0.0921517312526703],[1.73639786243439,-0.793743371963501,1.07867431640625],[-0.375043749809265,-0.207419037818909,-0.847505509853363],[0.787424623966217,-0.6565260887146,0.397324681282043],[0.922137200832367,-0.598898410797119,-0.371407985687256],[0.504862248897552,-0.598376154899597,0.452151596546173],[0.501449882984161,-0.551205158233643,1.03882050514221],[2.5537257194519,-0.770110368728638,0.047467116266489],[1.40020740032196,-0.372950464487076,0.453069984912872],[1.5286910533905,-0.516060650348663,-0.0504651628434658],[0.93128764629364,-0.352173179388046,0.508191108703613],[1.74106419086456,-0.517787516117096,-0.279484629631042],[1.51382517814636,-0.485222160816193,0.562565267086029],[-2.62832570075989,0.74480003118515,0.0692759677767754],[-2.85583138465881,0.743003904819489,0.0595913417637348],[-2.2902843952179,0.576608717441559,-0.293020367622375],[-3.12253189086914,0.771693587303162,0.043972983956337],[-0.416789948940277,0.138939127326012,-0.176813080906868],[-0.725705325603485,0.227116927504539,0.0736720785498619],[0.331410080194473,0.0427377112209797,-0.811209082603455],[-0.0108255511149764,0.0973969250917435,-0.875371754169464],[2.81594848632812,-0.590079724788666,-1.67275106906891],[2.37198686599731,-0.63478946685791,-1.80779695510864],[1.81935465335846,-0.547305285930634,1.02402627468109],[2.6057026386261,-0.522914171218872,0.0899576917290688],[-0.485054552555084,0.190710753202438,-1.12332630157471],[-0.0696577876806259,0.103392191231251,-2.35368323326111],[-0.925948858261108,0.218413770198822,-0.664997518062592],[-0.459451913833618,0.0488919541239738,-1.14289522171021],[1.65322172641754,-0.544351398944855,1.72219896316528],[2.25620675086975,-0.469508230686188,0.203829482197762],[1.80562508106232,-0.498554229736328,0.223190367221832],[1.09666264057159,-0.35529550909996,0.892665266990662],[0.408276945352554,-0.328851163387299,-0.0498328283429146],[-2.86634469032288,0.596899747848511,-0.0227691140025854],[1.13383054733276,-0.387507915496826,-2.18145775794983],[-0.216612592339516,-0.122141748666763,-0.108152560889721],[-0.83509773015976,0.0912704914808273,-0.826997637748718],[-3.47669649124146,0.93037223815918,0.407299637794495],[-3.12267208099365,0.674432337284088,0.275685489177704],[-2.36101341247559,0.501966595649719,-0.655676782131195],[-2.69355273246765,0.545944035053253,-0.221670135855675],[2.38973355293274,-0.207075104117393,-0.327387481927872],[1.69236755371094,-0.159383475780487,0.825905382633209],[2.92463684082031,-0.20120632648468,-0.165826484560966],[1.24354672431946,-0.187613174319267,0.463806748390198],[2.39630794525146,-0.19422647356987,0.342759817838669],[-2.50928092002869,0.915469467639923,0.372220486402512],[-1.38223052024841,0.778338849544525,-1.9185928106308],[-2.66745042800903,0.950634896755219,-0.179221227765083],[-2.4141845703125,0.91456013917923,-0.685424745082855],[-0.833070278167725,0.405297756195068,-0.711968660354614],[-0.449231147766113,0.414556503295898,-0.747948527336121],[-0.463524580001831,0.40560907125473,-1.13934421539307],[-0.384189456701279,0.399965643882751,-1.57540786266327],[-3.6702868938446,1.21045506000519,0.728805482387543],[-3.32303977012634,1.07357883453369,0.636915445327759],[-3.74322891235352,1.244349360466,0.366106003522873],[-2.83370137214661,1.07917428016663,-0.499167531728745],[1.79458212852478,-0.270544618368149,0.964580118656158],[0.998599588871002,-0.0667160600423813,0.0628614649176598],[1.89816725254059,-0.280044347047806,-0.451196849346161],[1.05578553676605,-0.052497323602438,0.1706532984972],[2.23582291603088,-0.239260986447334,0.101358287036419],[2.04386830329895,-0.106369897723198,0.63676530122757],[2.14069390296936,-0.261312872171402,0.319203943014145],[1.70792722702026,-0.163670808076859,1.06226706504822],[0.113367348909378,0.134785547852516,0.323094755411148],[0.464767932891846,-0.178119093179703,1.1713935136795],[0.890703499317169,-0.335206687450409,1.02661514282227],[3.63531279563904,0.265626519918442,-0.967379033565521],[2.40468883514404,0.102851569652557,1.23270046710968],[2.89833831787109,-0.0137436995282769,-0.597708940505981],[2.88451838493347,0.237526074051857,-0.0761578306555748],[2.70584535598755,0.0724450275301933,0.565369606018066],[-1.1946474313736,0.927115559577942,-0.453713923692703],[-2.20549750328064,1.18395590782166,0.230918169021606],[-2.04894399642944,1.13272321224213,0.451364547014236],[-0.269348829984665,0.650346219539642,-1.56116497516632],[0.115949794650078,0.511220633983612,-1.08537268638611],[0.0552713684737682,0.436935752630234,-0.300473392009735],[1.08442425727844,0.173388376832008,0.304069012403488],[-0.278847724199295,0.640650033950806,-0.852891623973846],[-0.14658822119236,0.543237745761871,-0.868424415588379],[-0.473483741283417,0.655025839805603,-0.363739460706711],[0.127634152770042,0.478329211473465,-0.606247127056122],[-0.423331141471863,0.658930122852325,-1.41887617111206],[-0.963451683521271,0.678259134292603,-0.18897944688797],[-2.06618165969849,1.08532631397247,0.359598845243454],[-1.56001687049866,0.734336137771606,0.561175167560577],[-2.1433699131012,0.984590590000153,1.01887702941895],[-2.41895580291748,1.22361600399017,-0.10573860257864],[2.11536955833435,0.034282598644495,0.245125666260719],[1.10864329338074,0.202837154269218,0.860335469245911],[1.3112770318985,0.10342963039875,0.796262204647064],[1.81257855892181,0.110583357512951,0.922145307064056],[0.866073369979858,0.0198787841945887,0.469772964715958],[0.857187926769257,0.191609233617783,-0.128001689910889],[1.1673184633255,0.181415677070618,-0.518775463104248],[1.11241388320923,0.0432789847254753,0.58862030506134],[0.465790063142776,0.208165854215622,-0.0585965402424335],[-0.708052575588226,0.428825348615646,0.461341202259064],[0.68166708946228,0.126797333359718,0.204449251294136],[-0.741165637969971,0.491207629442215,-0.329870849847794],[2.11105608940125,0.0255490988492966,0.888118326663971],[2.10994029045105,0.0154944658279419,0.202769681811333],[-0.450617700815201,0.913258731365204,-0.106484100222588],[0.332823127508163,0.673830986022949,-1.08877992630005],[1.11723756790161,0.386323690414429,-0.44856521487236],[0.032979566603899,0.823394656181335,-1.17362284660339],[-0.349127650260925,0.895388960838318,-0.578369855880737],[-1.92643880844116,1.34498381614685,-0.616165637969971],[-2.02301597595215,1.32812821865082,0.0870473235845566],[-2.51177048683167,1.46471786499023,0.0334950685501099],[-1.98925876617432,1.40811216831207,-0.499030202627182],[-2.69587063789368,1.61406862735748,-0.559521436691284],[-2.54138469696045,1.47049236297607,-0.370182007551193],[-1.53015959262848,1.265545129776,-0.323371678590775],[-2.59182858467102,1.55632388591766,0.21331325173378],[2.13250875473022,0.268170028924942,1.22395598888397],[2.42249274253845,0.318183958530426,0.868075370788574],[2.23674559593201,0.40062153339386,1.2539656162262],[1.41293895244598,0.418580710887909,0.518694818019867],[0.953025639057159,0.796800911426544,-1.63236236572266],[-1.57277369499207,1.58123171329498,-1.150710105896],[2.17808365821838,0.54060310125351,-2.88896942138672],[-0.0435368716716766,1.23334658145905,-2.58725810050964],[1.9785168170929,0.429222464561462,1.44965207576752],[2.19374489784241,0.419648289680481,0.925339043140411],[2.70040059089661,0.257327139377594,-0.516572177410126],[2.3940863609314,0.462692201137543,1.09788620471954],[1.33429896831512,0.516397476196289,0.243179187178612],[0.0531457141041756,0.845101594924927,1.7285373210907],[0.0651683211326599,0.815850853919983,1.1104234457016],[1.36087572574615,0.628420650959015,1.35493397712708],[2.55819940567017,0.85216611623764,1.26600623130798],[3.10662364959717,0.65423858165741,-0.178299382328987],[2.23892378807068,0.581498026847839,0.678542673587799],[2.74539923667908,0.655854046344757,0.577490210533142],[1.4239319562912,0.753597378730774,0.0756099820137024],[1.48104476928711,0.734566509723663,-0.555873453617096],[1.44852924346924,0.732450664043427,-1.31763088703156],[0.0629764199256897,1.05855309963226,-1.39265620708466],[2.26520395278931,0.67299872636795,0.668027341365814],[1.46613740921021,0.783235192298889,0.464294731616974],[2.05376744270325,0.715866088867188,-0.131634086370468],[1.95636808872223,0.88053685426712,0.976706266403198],[2.0464174747467,0.651567697525024,0.744299292564392],[3.33543539047241,0.925144672393799,0.371995031833649],[0.957220852375031,0.830361723899841,0.773960947990417],[3.17792463302612,0.766491055488586,-0.23912014067173],[3.79303979873657,0.817247629165649,-1.00487554073334],[3.83179903030396,0.854479432106018,-1.7633900642395],[2.20270204544067,1.05322241783142,-0.991101682186127],[2.06759715080261,0.884931147098541,-1.85712778568268],[2.89428162574768,0.826799094676971,1.72218441963196],[2.60867619514465,0.618459820747375,-0.0953152477741241],[2.35979557037354,0.415106415748596,0.730256021022797],[0.0049392944201827,1.30745565891266,1.81213712692261],[1.38367700576782,0.347695618867874,1.51626420021057],[1.86413407325745,0.841073095798492,0.836453258991241],[2.26736831665039,0.650562584400177,0.147665530443192],[1.58600115776062,0.890777587890625,0.368391692638397],[1.49136328697205,0.939045488834381,0.059490405023098],[1.14294576644897,0.945273995399475,0.71606707572937],[0.0331991203129292,1.21861708164215,1.08886384963989],[1.49086737632751,0.944253146648407,1.40142428874969],[3.19055223464966,0.854468524456024,0.549984693527222],[2.98069930076599,0.910176634788513,0.689320147037506],[2.87128734588623,0.759726941585541,0.682474851608276],[2.6128077507019,0.805012226104736,-0.0438733287155628],[3.13284945487976,0.876556515693665,-0.375115096569061],[2.93168330192566,0.920495271682739,0.286485016345978],[2.57137274742126,0.827956140041351,0.634442448616028],[2.37376236915588,0.920524418354034,0.900164723396301],[2.58231949806213,0.856689751148224,0.540130853652954],[2.6541736125946,0.814721465110779,-1.17414057254791],[2.10611367225647,0.895007073879242,1.12741661071777],[2.13353562355042,0.923996984958649,1.08171617984772],[2.24271512031555,0.922249376773834,0.215298846364021],[1.61276996135712,1.08496737480164,0.892892479896545],[2.17963814735413,0.991119921207428,-0.405311524868011],[1.77586889266968,1.0999618768692,-1.38771426677704],[1.39963567256927,1.38316512107849,-1.20487058162689],[-0.000582694308832288,1.31990766525269,1.1258373260498],[0.0938129797577858,1.26396143436432,0.726708710193634],[-0.266738951206207,1.46386992931366,-0.263422161340714],[-0.794305980205536,2.07586073875427,-1.56120562553406],[0.297165811061859,1.20625329017639,-0.708729565143585],[-0.154116898775101,1.28221333026886,-0.801354050636292],[2.0862078666687,1.14869272708893,-0.85563462972641],[1.91487061977386,1.13398694992065,-0.576171219348907],[2.32940864562988,1.25613212585449,-0.0715694054961205],[1.93571138381958,1.22217702865601,0.340661823749542],[1.75404191017151,1.19390368461609,0.329306334257126],[1.62464308738708,1.2322803735733,-0.441344499588013],[1.26617383956909,1.17670798301697,-0.0450324974954128],[2.53740620613098,1.16309559345245,0.948479771614075],[2.98929309844971,1.15674304962158,0.014771050773561],[2.64808225631714,0.984768211841583,0.0291957072913647],[2.62761735916138,1.23158621788025,1.11304175853729],[2.7459123134613,1.17547202110291,0.242832571268082],[2.15173125267029,1.26958668231964,1.19634401798248],[2.3259265422821,1.23029339313507,1.13686966896057],[2.5030677318573,1.17247867584229,0.257847398519516],[2.75008368492126,1.17323482036591,1.09896659851074],[2.51630878448486,1.01252293586731,0.674579083919525],[2.85937929153442,1.17813766002655,0.700660407543182],[0.424370437860489,1.56767439842224,-0.161196708679199],[1.05766975879669,2.01329588890076,-0.137671336531639],[1.2428389787674,1.18800508975983,0.711766541004181],[-0.0817797482013702,1.55573451519012,0.286072462797165],[1.45323300361633,1.35780036449432,1.1069073677063],[1.87687039375305,1.34591937065125,1.58176231384277],[1.44755399227142,1.2909187078476,-0.284574687480927],[1.43905866146088,1.22507536411285,0.310798048973083],[4.10722541809082,1.32868552207947,-1.937251329422],[1.56481635570526,1.22365701198578,1.92763459682465],[2.03923630714417,1.15036725997925,-0.585685014724731],[2.19971966743469,1.25786578655243,-0.762552499771118]],"colors":[[0,0,0,1]],"radii":[[0.108964458107948]],"centers":[[-2.63168549537659,-0.927853226661682,0.533996343612671],[-3.48934125900269,-0.80444473028183,0.648665547370911],[-2.96662354469299,-0.88006180524826,0.957518577575684],[-2.90648293495178,-0.960493505001068,0.582209050655365],[-2.90011978149414,-0.951573312282562,1.05348980426788],[-4.64668846130371,-0.420008331537247,0.993466973304749],[-5.15152215957642,-0.348722904920578,1.35310041904449],[-5.07032632827759,-0.393771827220917,1.52002942562103],[-5.14189100265503,-0.319673269987106,1.02730929851532],[-4.31437921524048,-0.631028056144714,1.64088249206543],[-3.77117085456848,-0.767089307308197,1.16702282428741],[-3.76488995552063,-0.87261825799942,1.76384377479553],[-3.7693657875061,-0.728443801403046,1.1600946187973],[-4.48918867111206,-0.67385071516037,1.46255922317505],[0.692081987857819,-1.95337212085724,0.486309975385666],[-0.419616967439651,-1.50638771057129,-0.135995075106621],[-0.620969474315643,-1.62180304527283,-0.240779086947441],[-0.197437986731529,-1.6179096698761,-0.296885430812836],[1.05397343635559,-1.97972762584686,0.810216069221497],[2.22771549224854,-2.19242262840271,-1.25477313995361],[0.955205202102661,-1.87673377990723,-0.420131653547287],[0.691713809967041,-1.95818150043488,0.613064348697662],[1.03671586513519,-1.94791781902313,-0.282570987939835],[0.372663259506226,-1.87692475318909,1.4782487154007],[-0.381259918212891,-1.58989822864532,0.0300822649151087],[-4.52591753005981,-0.571372628211975,-0.414466768503189],[-3.92395663261414,-0.761685788631439,-0.675891876220703],[-4.18574237823486,-0.688712477684021,-0.125654920935631],[-3.69787001609802,-0.723528385162354,-1.98583388328552],[1.11627697944641,-1.73278415203094,0.82032710313797],[1.00686538219452,-1.59483325481415,0.453524470329285],[0.772323250770569,-1.71550035476685,0.899979650974274],[-0.865907609462738,-1.3155609369278,0.638873636722565],[-1.17938148975372,-1.18941235542297,-0.502398014068604],[-1.12238574028015,-1.16000127792358,-0.479694753885269],[-0.875388026237488,-1.14073693752289,-0.457908809185028],[-0.977548241615295,-1.17538011074066,-0.416156798601151],[-3.67794156074524,-0.448768615722656,0.296194851398468],[-4.1688380241394,-0.271730482578278,0.37135523557663],[-3.36851859092712,-0.491958498954773,-0.22547972202301],[-3.22354888916016,-0.569558441638947,-0.02631875872612],[-4.49538326263428,-0.213065579533577,0.184181421995163],[-4.25075435638428,-0.235965877771378,0.0627945885062218],[-4.49773502349854,-0.120676450431347,-0.0432354658842087],[-1.24167692661285,-1.18959701061249,0.356561720371246],[1.18976628780365,-1.7643940448761,-1.00364971160889],[-1.04908812046051,-1.11818861961365,-0.236609160900116],[-0.950209081172943,-1.20781540870667,-0.114084333181381],[0.73614376783371,-1.77501654624939,0.788366496562958],[1.01915299892426,-1.66970443725586,1.00805795192719],[2.08450126647949,-1.75164699554443,-0.74367743730545],[1.46972906589508,-1.7125928401947,0.896048069000244],[2.31834435462952,-1.82837176322937,-0.465355575084686],[2.44907069206238,-1.75162220001221,0.0589442849159241],[2.03283214569092,-1.88445854187012,-0.663971126079559],[1.99250602722168,-1.87092959880829,-1.17211544513702],[0.914143025875092,-1.48561251163483,0.375189542770386],[1.42250156402588,-1.56305205821991,-0.0794070810079575],[2.21696925163269,-1.65704226493835,-2.40573406219482],[1.00395834445953,-1.53183257579803,-1.14199542999268],[0.951667547225952,-1.58578443527222,-0.0788717344403267],[-3.69833278656006,-0.211122557520866,0.252222120761871],[-4.01639270782471,-0.0469466783106327,0.245247930288315],[-3.07761287689209,-0.287072092294693,-0.157827541232109],[-3.34566164016724,-0.250364243984222,-0.0453445576131344],[-2.89900040626526,-0.375634849071503,0.719595551490784],[-4.87503957748413,0.0647720545530319,0.507240772247314],[-3.54472661018372,-0.1767508238554,-0.351667851209641],[-3.62823724746704,-0.20433583855629,-0.347628384828568],[-4.19857549667358,-0.0316951237618923,0.104493997991085],[0.825591564178467,-1.78533434867859,0.97272127866745],[-3.00499486923218,-0.372950911521912,0.258377522230148],[-2.8451452255249,-0.414354473352432,-0.451231360435486],[-2.82348942756653,-0.354036778211594,-1.11365604400635],[-3.0487174987793,-0.33016762137413,-0.333827137947083],[-0.0340252369642258,-1.41733860969543,0.355616331100464],[1.13260114192963,-1.51156878471375,-0.667376160621643],[0.891631960868835,-1.39542281627655,-1.28869962692261],[1.67305302619934,-1.54937016963959,-0.437780380249023],[0.868664741516113,-1.5133284330368,0.0610466636717319],[1.36679935455322,-1.41501688957214,0.0201793219894171],[0.598041415214539,-1.43277370929718,0.594023466110229],[1.34842538833618,-1.46761333942413,0.654217779636383],[1.39973640441895,-1.49882984161377,0.19259886443615],[-3.55779266357422,0.00941522140055895,0.039181400090456],[-2.98972725868225,-0.208162873983383,0.630841195583344],[-3.17365741729736,-0.086647242307663,-0.0566643103957176],[-2.7092821598053,-0.15011228621006,-0.516207814216614],[-2.94406628608704,-0.132295787334442,0.292448997497559],[-4.75574398040771,0.399175107479095,0.240632310509682],[-3.76899123191833,0.13903796672821,0.0865144357085228],[-3.50335621833801,0.0430567562580109,-0.122209750115871],[-3.01277375221252,-0.0421661771833897,-0.537622809410095],[-4.88245439529419,0.426137953996658,0.570420026779175],[-5.21013593673706,0.504780888557434,0.496957212686539],[-3.6693480014801,-0.0420929752290249,0.76787656545639],[-0.694477140903473,-0.729185223579407,-0.63669341802597],[-0.765763282775879,-0.712412178516388,-1.28533864021301],[-0.634110867977142,-0.773483097553253,-0.446338295936584],[-0.56155002117157,-0.750194489955902,-0.703096628189087],[-0.164672270417213,-0.721550107002258,-0.257683575153351],[2.41020178794861,-1.42243719100952,-1.42371928691864],[-3.92429304122925,0.221986219286919,-0.800241589546204],[-4.17056322097778,0.265844792127609,-0.177070364356041],[-3.81394243240356,0.162116065621376,-0.172528013586998],[-3.90985369682312,0.100800178945065,0.0604686848819256],[-0.661333918571472,-0.812219500541687,-0.0718340873718262],[1.27587962150574,-1.39629030227661,-0.895649671554565],[1.31840848922729,-1.30011260509491,-1.17600512504578],[0.959955632686615,-1.2782940864563,-0.0368509739637375],[1.01419341564178,-1.63487219810486,0.985979914665222],[1.08852136135101,-1.37687182426453,-0.813974976539612],[-0.218060702085495,-0.931284010410309,0.558543682098389],[1.2012619972229,-1.22605311870575,0.447774171829224],[-3.1174578666687,-0.00880237855017185,-0.0213093366473913],[-4.66223335266113,0.352794885635376,1.40800082683563],[2.53480505943298,-1.40446949005127,-0.77115523815155],[1.2423278093338,-1.30953097343445,0.333263397216797],[0.509156823158264,-1.26756453514099,0.621764063835144],[0.214239209890366,-1.16362464427948,0.0960156247019768],[-2.91470742225647,-0.228489682078362,0.901013374328613],[0.437750965356827,-1.08682870864868,0.80527925491333],[-0.652873873710632,-0.832958221435547,0.643759906291962],[-3.70873808860779,-0.142764806747437,0.784628510475159],[-0.300282269716263,-0.502968847751617,-0.57269674539566],[-0.49934908747673,-0.511687517166138,-0.38896968960762],[-0.886008381843567,-0.474090695381165,-1.00324058532715],[2.36418771743774,-1.02250528335571,-0.491409748792648],[1.28755617141724,-0.913217902183533,0.00653791753575206],[2.73208785057068,-1.04944062232971,-1.07013690471649],[1.22490239143372,-0.896950006484985,-0.257192581892014],[-1.05191576480865,-0.329893708229065,-1.11493527889252],[-1.01868414878845,-0.335898071527481,-1.35388255119324],[-0.872001469135284,-0.352103292942047,-0.786509931087494],[-2.67780327796936,0.183905869722366,-0.297244161367416],[-3.35795855522156,0.355644524097443,-0.741319298744202],[-3.16097640991211,0.265496283769608,-0.2752286195755],[-2.81555676460266,0.257331788539886,-1.17229819297791],[-2.79952073097229,0.183410376310349,-0.844387412071228],[1.61917781829834,-0.929834604263306,0.217048853635788],[1.74136853218079,-1.14267480373383,0.472873121500015],[1.27915692329407,-0.995623409748077,0.718361556529999],[2.42982339859009,-1.13419449329376,0.352670729160309],[2.43886137008667,-0.988389015197754,-0.505967557430267],[1.52815222740173,-1.0076402425766,0.834641873836517],[1.43566429615021,-1.1206259727478,0.384551882743835],[1.2088565826416,-0.982539117336273,0.8663729429245],[0.833578586578369,-0.918156325817108,0.490828841924667],[1.12816905975342,-0.921902000904083,0.420566916465759],[2.03001666069031,-1.00169885158539,0.46372389793396],[-0.530352115631104,-0.191865235567093,-0.512185513973236],[-0.888820707798004,-0.10154115408659,-0.588408589363098],[-0.149865210056305,-0.27387061715126,-2.44295191764832],[-0.171201601624489,-0.342555552721024,-1.86819338798523],[-3.86212086677551,0.811911463737488,0.389356136322021],[-3.06409358978271,0.577251851558685,-0.441252797842026],[-2.91337203979492,0.573738694190979,-0.536330163478851],[-3.30957078933716,0.617790579795837,-0.370580762624741],[-0.618859767913818,-0.0438417606055737,-2.34571003913879],[-0.946181178092957,-0.0449847504496574,-1.60618197917938],[-0.951895177364349,-0.0920501872897148,-1.73237764835358],[-0.525528192520142,-0.0881275683641434,-1.6858811378479],[-0.611304461956024,-0.152311503887177,0.0110319619998336],[-1.52100038528442,0.159345984458923,0.211730241775513],[-2.38042449951172,0.0749687328934669,0.529054462909698],[1.74522590637207,-0.713764309883118,0.370351761579514],[1.05002510547638,-0.65961354970932,-0.309255033731461],[-0.392372220754623,-0.234814867377281,-0.350129276514053],[1.28275799751282,-0.686908841133118,-0.80171263217926],[0.590707361698151,-0.589166402816772,0.899566769599915],[1.64012551307678,-0.828158736228943,0.0488075241446495],[1.07921922206879,-0.663177907466888,-0.163895577192307],[-0.400218337774277,-0.377271980047226,0.0921517312526703],[1.73639786243439,-0.793743371963501,1.07867431640625],[-0.375043749809265,-0.207419037818909,-0.847505509853363],[0.787424623966217,-0.6565260887146,0.397324681282043],[0.922137200832367,-0.598898410797119,-0.371407985687256],[0.504862248897552,-0.598376154899597,0.452151596546173],[0.501449882984161,-0.551205158233643,1.03882050514221],[2.5537257194519,-0.770110368728638,0.047467116266489],[1.40020740032196,-0.372950464487076,0.453069984912872],[1.5286910533905,-0.516060650348663,-0.0504651628434658],[0.93128764629364,-0.352173179388046,0.508191108703613],[1.74106419086456,-0.517787516117096,-0.279484629631042],[1.51382517814636,-0.485222160816193,0.562565267086029],[-2.62832570075989,0.74480003118515,0.0692759677767754],[-2.85583138465881,0.743003904819489,0.0595913417637348],[-2.2902843952179,0.576608717441559,-0.293020367622375],[-3.12253189086914,0.771693587303162,0.043972983956337],[-0.416789948940277,0.138939127326012,-0.176813080906868],[-0.725705325603485,0.227116927504539,0.0736720785498619],[0.331410080194473,0.0427377112209797,-0.811209082603455],[-0.0108255511149764,0.0973969250917435,-0.875371754169464],[2.81594848632812,-0.590079724788666,-1.67275106906891],[2.37198686599731,-0.63478946685791,-1.80779695510864],[1.81935465335846,-0.547305285930634,1.02402627468109],[2.6057026386261,-0.522914171218872,0.0899576917290688],[-0.485054552555084,0.190710753202438,-1.12332630157471],[-0.0696577876806259,0.103392191231251,-2.35368323326111],[-0.925948858261108,0.218413770198822,-0.664997518062592],[-0.459451913833618,0.0488919541239738,-1.14289522171021],[1.65322172641754,-0.544351398944855,1.72219896316528],[2.25620675086975,-0.469508230686188,0.203829482197762],[1.80562508106232,-0.498554229736328,0.223190367221832],[1.09666264057159,-0.35529550909996,0.892665266990662],[0.408276945352554,-0.328851163387299,-0.0498328283429146],[-2.86634469032288,0.596899747848511,-0.0227691140025854],[1.13383054733276,-0.387507915496826,-2.18145775794983],[-0.216612592339516,-0.122141748666763,-0.108152560889721],[-0.83509773015976,0.0912704914808273,-0.826997637748718],[-3.47669649124146,0.93037223815918,0.407299637794495],[-3.12267208099365,0.674432337284088,0.275685489177704],[-2.36101341247559,0.501966595649719,-0.655676782131195],[-2.69355273246765,0.545944035053253,-0.221670135855675],[2.38973355293274,-0.207075104117393,-0.327387481927872],[1.69236755371094,-0.159383475780487,0.825905382633209],[2.92463684082031,-0.20120632648468,-0.165826484560966],[1.24354672431946,-0.187613174319267,0.463806748390198],[2.39630794525146,-0.19422647356987,0.342759817838669],[-2.50928092002869,0.915469467639923,0.372220486402512],[-1.38223052024841,0.778338849544525,-1.9185928106308],[-2.66745042800903,0.950634896755219,-0.179221227765083],[-2.4141845703125,0.91456013917923,-0.685424745082855],[-0.833070278167725,0.405297756195068,-0.711968660354614],[-0.449231147766113,0.414556503295898,-0.747948527336121],[-0.463524580001831,0.40560907125473,-1.13934421539307],[-0.384189456701279,0.399965643882751,-1.57540786266327],[-3.6702868938446,1.21045506000519,0.728805482387543],[-3.32303977012634,1.07357883453369,0.636915445327759],[-3.74322891235352,1.244349360466,0.366106003522873],[-2.83370137214661,1.07917428016663,-0.499167531728745],[1.79458212852478,-0.270544618368149,0.964580118656158],[0.998599588871002,-0.0667160600423813,0.0628614649176598],[1.89816725254059,-0.280044347047806,-0.451196849346161],[1.05578553676605,-0.052497323602438,0.1706532984972],[2.23582291603088,-0.239260986447334,0.101358287036419],[2.04386830329895,-0.106369897723198,0.63676530122757],[2.14069390296936,-0.261312872171402,0.319203943014145],[1.70792722702026,-0.163670808076859,1.06226706504822],[0.113367348909378,0.134785547852516,0.323094755411148],[0.464767932891846,-0.178119093179703,1.1713935136795],[0.890703499317169,-0.335206687450409,1.02661514282227],[3.63531279563904,0.265626519918442,-0.967379033565521],[2.40468883514404,0.102851569652557,1.23270046710968],[2.89833831787109,-0.0137436995282769,-0.597708940505981],[2.88451838493347,0.237526074051857,-0.0761578306555748],[2.70584535598755,0.0724450275301933,0.565369606018066],[-1.1946474313736,0.927115559577942,-0.453713923692703],[-2.20549750328064,1.18395590782166,0.230918169021606],[-2.04894399642944,1.13272321224213,0.451364547014236],[-0.269348829984665,0.650346219539642,-1.56116497516632],[0.115949794650078,0.511220633983612,-1.08537268638611],[0.0552713684737682,0.436935752630234,-0.300473392009735],[1.08442425727844,0.173388376832008,0.304069012403488],[-0.278847724199295,0.640650033950806,-0.852891623973846],[-0.14658822119236,0.543237745761871,-0.868424415588379],[-0.473483741283417,0.655025839805603,-0.363739460706711],[0.127634152770042,0.478329211473465,-0.606247127056122],[-0.423331141471863,0.658930122852325,-1.41887617111206],[-0.963451683521271,0.678259134292603,-0.18897944688797],[-2.06618165969849,1.08532631397247,0.359598845243454],[-1.56001687049866,0.734336137771606,0.561175167560577],[-2.1433699131012,0.984590590000153,1.01887702941895],[-2.41895580291748,1.22361600399017,-0.10573860257864],[2.11536955833435,0.034282598644495,0.245125666260719],[1.10864329338074,0.202837154269218,0.860335469245911],[1.3112770318985,0.10342963039875,0.796262204647064],[1.81257855892181,0.110583357512951,0.922145307064056],[0.866073369979858,0.0198787841945887,0.469772964715958],[0.857187926769257,0.191609233617783,-0.128001689910889],[1.1673184633255,0.181415677070618,-0.518775463104248],[1.11241388320923,0.0432789847254753,0.58862030506134],[0.465790063142776,0.208165854215622,-0.0585965402424335],[-0.708052575588226,0.428825348615646,0.461341202259064],[0.68166708946228,0.126797333359718,0.204449251294136],[-0.741165637969971,0.491207629442215,-0.329870849847794],[2.11105608940125,0.0255490988492966,0.888118326663971],[2.10994029045105,0.0154944658279419,0.202769681811333],[-0.450617700815201,0.913258731365204,-0.106484100222588],[0.332823127508163,0.673830986022949,-1.08877992630005],[1.11723756790161,0.386323690414429,-0.44856521487236],[0.032979566603899,0.823394656181335,-1.17362284660339],[-0.349127650260925,0.895388960838318,-0.578369855880737],[-1.92643880844116,1.34498381614685,-0.616165637969971],[-2.02301597595215,1.32812821865082,0.0870473235845566],[-2.51177048683167,1.46471786499023,0.0334950685501099],[-1.98925876617432,1.40811216831207,-0.499030202627182],[-2.69587063789368,1.61406862735748,-0.559521436691284],[-2.54138469696045,1.47049236297607,-0.370182007551193],[-1.53015959262848,1.265545129776,-0.323371678590775],[-2.59182858467102,1.55632388591766,0.21331325173378],[2.13250875473022,0.268170028924942,1.22395598888397],[2.42249274253845,0.318183958530426,0.868075370788574],[2.23674559593201,0.40062153339386,1.2539656162262],[1.41293895244598,0.418580710887909,0.518694818019867],[0.953025639057159,0.796800911426544,-1.63236236572266],[-1.57277369499207,1.58123171329498,-1.150710105896],[2.17808365821838,0.54060310125351,-2.88896942138672],[-0.0435368716716766,1.23334658145905,-2.58725810050964],[1.9785168170929,0.429222464561462,1.44965207576752],[2.19374489784241,0.419648289680481,0.925339043140411],[2.70040059089661,0.257327139377594,-0.516572177410126],[2.3940863609314,0.462692201137543,1.09788620471954],[1.33429896831512,0.516397476196289,0.243179187178612],[0.0531457141041756,0.845101594924927,1.7285373210907],[0.0651683211326599,0.815850853919983,1.1104234457016],[1.36087572574615,0.628420650959015,1.35493397712708],[2.55819940567017,0.85216611623764,1.26600623130798],[3.10662364959717,0.65423858165741,-0.178299382328987],[2.23892378807068,0.581498026847839,0.678542673587799],[2.74539923667908,0.655854046344757,0.577490210533142],[1.4239319562912,0.753597378730774,0.0756099820137024],[1.48104476928711,0.734566509723663,-0.555873453617096],[1.44852924346924,0.732450664043427,-1.31763088703156],[0.0629764199256897,1.05855309963226,-1.39265620708466],[2.26520395278931,0.67299872636795,0.668027341365814],[1.46613740921021,0.783235192298889,0.464294731616974],[2.05376744270325,0.715866088867188,-0.131634086370468],[1.95636808872223,0.88053685426712,0.976706266403198],[2.0464174747467,0.651567697525024,0.744299292564392],[3.33543539047241,0.925144672393799,0.371995031833649],[0.957220852375031,0.830361723899841,0.773960947990417],[3.17792463302612,0.766491055488586,-0.23912014067173],[3.79303979873657,0.817247629165649,-1.00487554073334],[3.83179903030396,0.854479432106018,-1.7633900642395],[2.20270204544067,1.05322241783142,-0.991101682186127],[2.06759715080261,0.884931147098541,-1.85712778568268],[2.89428162574768,0.826799094676971,1.72218441963196],[2.60867619514465,0.618459820747375,-0.0953152477741241],[2.35979557037354,0.415106415748596,0.730256021022797],[0.0049392944201827,1.30745565891266,1.81213712692261],[1.38367700576782,0.347695618867874,1.51626420021057],[1.86413407325745,0.841073095798492,0.836453258991241],[2.26736831665039,0.650562584400177,0.147665530443192],[1.58600115776062,0.890777587890625,0.368391692638397],[1.49136328697205,0.939045488834381,0.059490405023098],[1.14294576644897,0.945273995399475,0.71606707572937],[0.0331991203129292,1.21861708164215,1.08886384963989],[1.49086737632751,0.944253146648407,1.40142428874969],[3.19055223464966,0.854468524456024,0.549984693527222],[2.98069930076599,0.910176634788513,0.689320147037506],[2.87128734588623,0.759726941585541,0.682474851608276],[2.6128077507019,0.805012226104736,-0.0438733287155628],[3.13284945487976,0.876556515693665,-0.375115096569061],[2.93168330192566,0.920495271682739,0.286485016345978],[2.57137274742126,0.827956140041351,0.634442448616028],[2.37376236915588,0.920524418354034,0.900164723396301],[2.58231949806213,0.856689751148224,0.540130853652954],[2.6541736125946,0.814721465110779,-1.17414057254791],[2.10611367225647,0.895007073879242,1.12741661071777],[2.13353562355042,0.923996984958649,1.08171617984772],[2.24271512031555,0.922249376773834,0.215298846364021],[1.61276996135712,1.08496737480164,0.892892479896545],[2.17963814735413,0.991119921207428,-0.405311524868011],[1.77586889266968,1.0999618768692,-1.38771426677704],[1.39963567256927,1.38316512107849,-1.20487058162689],[-0.000582694308832288,1.31990766525269,1.1258373260498],[0.0938129797577858,1.26396143436432,0.726708710193634],[-0.266738951206207,1.46386992931366,-0.263422161340714],[-0.794305980205536,2.07586073875427,-1.56120562553406],[0.297165811061859,1.20625329017639,-0.708729565143585],[-0.154116898775101,1.28221333026886,-0.801354050636292],[2.0862078666687,1.14869272708893,-0.85563462972641],[1.91487061977386,1.13398694992065,-0.576171219348907],[2.32940864562988,1.25613212585449,-0.0715694054961205],[1.93571138381958,1.22217702865601,0.340661823749542],[1.75404191017151,1.19390368461609,0.329306334257126],[1.62464308738708,1.2322803735733,-0.441344499588013],[1.26617383956909,1.17670798301697,-0.0450324974954128],[2.53740620613098,1.16309559345245,0.948479771614075],[2.98929309844971,1.15674304962158,0.014771050773561],[2.64808225631714,0.984768211841583,0.0291957072913647],[2.62761735916138,1.23158621788025,1.11304175853729],[2.7459123134613,1.17547202110291,0.242832571268082],[2.15173125267029,1.26958668231964,1.19634401798248],[2.3259265422821,1.23029339313507,1.13686966896057],[2.5030677318573,1.17247867584229,0.257847398519516],[2.75008368492126,1.17323482036591,1.09896659851074],[2.51630878448486,1.01252293586731,0.674579083919525],[2.85937929153442,1.17813766002655,0.700660407543182],[0.424370437860489,1.56767439842224,-0.161196708679199],[1.05766975879669,2.01329588890076,-0.137671336531639],[1.2428389787674,1.18800508975983,0.711766541004181],[-0.0817797482013702,1.55573451519012,0.286072462797165],[1.45323300361633,1.35780036449432,1.1069073677063],[1.87687039375305,1.34591937065125,1.58176231384277],[1.44755399227142,1.2909187078476,-0.284574687480927],[1.43905866146088,1.22507536411285,0.310798048973083],[4.10722541809082,1.32868552207947,-1.937251329422],[1.56481635570526,1.22365701198578,1.92763459682465],[2.03923630714417,1.15036725997925,-0.585685014724731],[2.19971966743469,1.25786578655243,-0.762552499771118]],"ignoreExtent":false,"fastTransparency":true,"flags":32771},"26":{"id":26,"type":"text","material":{"lit":false,"margin":0,"floating":true,"edge":[0,1,1]},"vertices":[["NaN",4,1]],"colors":[[0,0,0,1]],"texts":[["PC1"]],"cex":[[1]],"adj":[[0.5,0.5]],"centers":[["NaN",4,1]],"family":[["sans"]],"font":[[1]],"ignoreExtent":true,"flags":33808},"27":{"id":27,"type":"text","material":{"lit":false,"margin":1,"floating":true,"edge":[1,1,1]},"vertices":[["NaN",4,1]],"colors":[[0,0,0,1]],"texts":[["PC2"]],"cex":[[1]],"adj":[[0.5,0.5]],"centers":[["NaN",4,1]],"family":[["sans"]],"font":[[1]],"ignoreExtent":true,"flags":33808},"28":{"id":28,"type":"text","material":{"lit":false,"margin":2,"floating":true,"edge":[1,1,1]},"vertices":[["NaN",4,1]],"colors":[[0,0,0,1]],"texts":[["PC3"]],"cex":[[1]],"adj":[[0.5,0.5]],"centers":[["NaN",4,1]],"family":[["sans"]],"font":[[1]],"ignoreExtent":true,"flags":33808},"11":{"id":11,"type":"light","vertices":[[0,0,1]],"colors":[[1,1,1,1],[1,1,1,1],[1,1,1,1]],"viewpoint":true,"finite":false},"12":{"id":12,"type":"background","material":{"lit":false,"back":"lines"},"colors":[[1,1,1,1]],"centers":[[0,0,0]],"sphere":false,"fogtype":"none","fogscale":1,"flags":32768},"25":{"id":25,"type":"bboxdeco","material":{"front":"lines","back":"lines"},"vertices":[[-4,"NA","NA"],[-2,"NA","NA"],[0,"NA","NA"],[2,"NA","NA"],[4,"NA","NA"],["NA",-2,"NA"],["NA",-1,"NA"],["NA",0,"NA"],["NA",1,"NA"],["NA",2,"NA"],["NA","NA",-2],["NA","NA",-1],["NA","NA",0],["NA","NA",1],["NA","NA",2]],"colors":[[0,0,0,1]],"axes":{"mode":["pretty","pretty","pretty"],"step":[2,1,1],"nticks":[5,5,5],"marklen":[15,15,15],"expand":[1.02999997138977,1.02999997138977,1.02999997138977]},"draw_front":true},"7":{"id":7,"type":"subscene","par3d":{"antialias":8,"FOV":30,"ignoreExtent":false,"listeners":7,"mouseMode":{"none":"none","left":"trackball","right":"zoom","middle":"fov","wheel":"pull"},"observer":[0,0,28.6334247589111],"modelMatrix":[[0.70168662071228,0,0,0.386948764324188],[0,0.523883283138275,1.27550148963928,0.643624365329742],[0,-1.43935739994049,0.464244604110718,-28.4941654205322],[0,0,0,1]],"projMatrix":[[3.73205089569092,0,0,0],[0,3.3827588558197,0,0],[0,0,-3.86370348930359,-103.220191955566],[0,0,-1,0]],"skipRedraw":false,"userMatrix":[[1,0,0,0],[0,0.342020143325668,0.939692620785909,0],[0,-0.939692620785909,0.342020143325668,0],[0,0,0,1]],"userProjection":[[1,0,0,0],[0,1,0,0],[0,0,1,0],[0,0,0,1]],"scale":[0.70168662071228,1.53173220157623,1.35736036300659],"viewport":{"x":0,"y":0,"width":1,"height":1},"zoom":1,"bbox":[-5.36542510986328,4.26251459121704,-2.26356077194214,2.1469988822937,-2.96924614906311,2.00791144371033],"windowRect":[1287,37,2546,1426],"family":"sans","font":1,"cex":1,"useFreeType":true,"fontname":"/home/jrl/R/x86_64-pc-linux-gnu-library/4.1/rgl/fonts/FreeSans.ttf","maxClipPlanes":8,"glVersion":4.6,"activeSubscene":0},"embeddings":{"viewport":"replace","projection":"replace","model":"replace","mouse":"replace"},"objects":[12,25,24,26,27,28,11],"subscenes":[],"flags":34067}},"crosstalk":{"key":[],"group":[],"id":[],"options":[]},"width":800,"height":450,"context":{"shiny":false,"rmarkdown":"_output.yml"},"players":[],"webGLoptions":{"preserveDrawingBuffer":true}},"evals":[],"jsHooks":[]}</script> --- Let's estimate 4 clusters as suggested by the Gap statistic. Depending on the centers we choose, we obtain different clusters. <!-- --> ??? Cluster number is arbitrary. It should not converge to illustrate that we may get different results! --- We can use the pairwise scatterplots to asses the results of clustering. <!-- --> --- The results are usually printed using first PCs instead of all variables. <div id="rgl15477" style="width:800px;height:450px;" class="rglWebGL html-widget"></div> <script type="application/json" data-for="rgl15477">{"x":{"material":{"color":"#000000","alpha":1,"lit":true,"ambient":"#000000","specular":"#FFFFFF","emission":"#000000","shininess":50,"smooth":true,"front":"filled","back":"filled","size":3,"lwd":1,"fog":true,"point_antialias":false,"line_antialias":false,"texture":null,"textype":"rgb","texmipmap":false,"texminfilter":"linear","texmagfilter":"linear","texenvmap":false,"depth_mask":true,"depth_test":"less","isTransparent":false,"polygon_offset":[0,0],"margin":"","floating":false},"rootSubscene":7,"objects":{"35":{"id":35,"type":"spheres","material":{},"vertices":[[-2.63168549537659,-0.927853226661682,0.533996343612671],[-3.48934125900269,-0.80444473028183,0.648665547370911],[-2.96662354469299,-0.88006180524826,0.957518577575684],[-2.90648293495178,-0.960493505001068,0.582209050655365],[-2.90011978149414,-0.951573312282562,1.05348980426788],[-4.64668846130371,-0.420008331537247,0.993466973304749],[-5.15152215957642,-0.348722904920578,1.35310041904449],[-5.07032632827759,-0.393771827220917,1.52002942562103],[-5.14189100265503,-0.319673269987106,1.02730929851532],[-4.31437921524048,-0.631028056144714,1.64088249206543],[-3.77117085456848,-0.767089307308197,1.16702282428741],[-3.76488995552063,-0.87261825799942,1.76384377479553],[-3.7693657875061,-0.728443801403046,1.1600946187973],[-4.48918867111206,-0.67385071516037,1.46255922317505],[0.692081987857819,-1.95337212085724,0.486309975385666],[-0.419616967439651,-1.50638771057129,-0.135995075106621],[-0.620969474315643,-1.62180304527283,-0.240779086947441],[-0.197437986731529,-1.6179096698761,-0.296885430812836],[1.05397343635559,-1.97972762584686,0.810216069221497],[2.22771549224854,-2.19242262840271,-1.25477313995361],[0.955205202102661,-1.87673377990723,-0.420131653547287],[0.691713809967041,-1.95818150043488,0.613064348697662],[1.03671586513519,-1.94791781902313,-0.282570987939835],[0.372663259506226,-1.87692475318909,1.4782487154007],[-0.381259918212891,-1.58989822864532,0.0300822649151087],[-4.52591753005981,-0.571372628211975,-0.414466768503189],[-3.92395663261414,-0.761685788631439,-0.675891876220703],[-4.18574237823486,-0.688712477684021,-0.125654920935631],[-3.69787001609802,-0.723528385162354,-1.98583388328552],[1.11627697944641,-1.73278415203094,0.82032710313797],[1.00686538219452,-1.59483325481415,0.453524470329285],[0.772323250770569,-1.71550035476685,0.899979650974274],[-0.865907609462738,-1.3155609369278,0.638873636722565],[-1.17938148975372,-1.18941235542297,-0.502398014068604],[-1.12238574028015,-1.16000127792358,-0.479694753885269],[-0.875388026237488,-1.14073693752289,-0.457908809185028],[-0.977548241615295,-1.17538011074066,-0.416156798601151],[-3.67794156074524,-0.448768615722656,0.296194851398468],[-4.1688380241394,-0.271730482578278,0.37135523557663],[-3.36851859092712,-0.491958498954773,-0.22547972202301],[-3.22354888916016,-0.569558441638947,-0.02631875872612],[-4.49538326263428,-0.213065579533577,0.184181421995163],[-4.25075435638428,-0.235965877771378,0.0627945885062218],[-4.49773502349854,-0.120676450431347,-0.0432354658842087],[-1.24167692661285,-1.18959701061249,0.356561720371246],[1.18976628780365,-1.7643940448761,-1.00364971160889],[-1.04908812046051,-1.11818861961365,-0.236609160900116],[-0.950209081172943,-1.20781540870667,-0.114084333181381],[0.73614376783371,-1.77501654624939,0.788366496562958],[1.01915299892426,-1.66970443725586,1.00805795192719],[2.08450126647949,-1.75164699554443,-0.74367743730545],[1.46972906589508,-1.7125928401947,0.896048069000244],[2.31834435462952,-1.82837176322937,-0.465355575084686],[2.44907069206238,-1.75162220001221,0.0589442849159241],[2.03283214569092,-1.88445854187012,-0.663971126079559],[1.99250602722168,-1.87092959880829,-1.17211544513702],[0.914143025875092,-1.48561251163483,0.375189542770386],[1.42250156402588,-1.56305205821991,-0.0794070810079575],[2.21696925163269,-1.65704226493835,-2.40573406219482],[1.00395834445953,-1.53183257579803,-1.14199542999268],[0.951667547225952,-1.58578443527222,-0.0788717344403267],[-3.69833278656006,-0.211122557520866,0.252222120761871],[-4.01639270782471,-0.0469466783106327,0.245247930288315],[-3.07761287689209,-0.287072092294693,-0.157827541232109],[-3.34566164016724,-0.250364243984222,-0.0453445576131344],[-2.89900040626526,-0.375634849071503,0.719595551490784],[-4.87503957748413,0.0647720545530319,0.507240772247314],[-3.54472661018372,-0.1767508238554,-0.351667851209641],[-3.62823724746704,-0.20433583855629,-0.347628384828568],[-4.19857549667358,-0.0316951237618923,0.104493997991085],[0.825591564178467,-1.78533434867859,0.97272127866745],[-3.00499486923218,-0.372950911521912,0.258377522230148],[-2.8451452255249,-0.414354473352432,-0.451231360435486],[-2.82348942756653,-0.354036778211594,-1.11365604400635],[-3.0487174987793,-0.33016762137413,-0.333827137947083],[-0.0340252369642258,-1.41733860969543,0.355616331100464],[1.13260114192963,-1.51156878471375,-0.667376160621643],[0.891631960868835,-1.39542281627655,-1.28869962692261],[1.67305302619934,-1.54937016963959,-0.437780380249023],[0.868664741516113,-1.5133284330368,0.0610466636717319],[1.36679935455322,-1.41501688957214,0.0201793219894171],[0.598041415214539,-1.43277370929718,0.594023466110229],[1.34842538833618,-1.46761333942413,0.654217779636383],[1.39973640441895,-1.49882984161377,0.19259886443615],[-3.55779266357422,0.00941522140055895,0.039181400090456],[-2.98972725868225,-0.208162873983383,0.630841195583344],[-3.17365741729736,-0.086647242307663,-0.0566643103957176],[-2.7092821598053,-0.15011228621006,-0.516207814216614],[-2.94406628608704,-0.132295787334442,0.292448997497559],[-4.75574398040771,0.399175107479095,0.240632310509682],[-3.76899123191833,0.13903796672821,0.0865144357085228],[-3.50335621833801,0.0430567562580109,-0.122209750115871],[-3.01277375221252,-0.0421661771833897,-0.537622809410095],[-4.88245439529419,0.426137953996658,0.570420026779175],[-5.21013593673706,0.504780888557434,0.496957212686539],[-3.6693480014801,-0.0420929752290249,0.76787656545639],[-0.694477140903473,-0.729185223579407,-0.63669341802597],[-0.765763282775879,-0.712412178516388,-1.28533864021301],[-0.634110867977142,-0.773483097553253,-0.446338295936584],[-0.56155002117157,-0.750194489955902,-0.703096628189087],[-0.164672270417213,-0.721550107002258,-0.257683575153351],[2.41020178794861,-1.42243719100952,-1.42371928691864],[-3.92429304122925,0.221986219286919,-0.800241589546204],[-4.17056322097778,0.265844792127609,-0.177070364356041],[-3.81394243240356,0.162116065621376,-0.172528013586998],[-3.90985369682312,0.100800178945065,0.0604686848819256],[-0.661333918571472,-0.812219500541687,-0.0718340873718262],[1.27587962150574,-1.39629030227661,-0.895649671554565],[1.31840848922729,-1.30011260509491,-1.17600512504578],[0.959955632686615,-1.2782940864563,-0.0368509739637375],[1.01419341564178,-1.63487219810486,0.985979914665222],[1.08852136135101,-1.37687182426453,-0.813974976539612],[-0.218060702085495,-0.931284010410309,0.558543682098389],[1.2012619972229,-1.22605311870575,0.447774171829224],[-3.1174578666687,-0.00880237855017185,-0.0213093366473913],[-4.66223335266113,0.352794885635376,1.40800082683563],[2.53480505943298,-1.40446949005127,-0.77115523815155],[1.2423278093338,-1.30953097343445,0.333263397216797],[0.509156823158264,-1.26756453514099,0.621764063835144],[0.214239209890366,-1.16362464427948,0.0960156247019768],[-2.91470742225647,-0.228489682078362,0.901013374328613],[0.437750965356827,-1.08682870864868,0.80527925491333],[-0.652873873710632,-0.832958221435547,0.643759906291962],[-3.70873808860779,-0.142764806747437,0.784628510475159],[-0.300282269716263,-0.502968847751617,-0.57269674539566],[-0.49934908747673,-0.511687517166138,-0.38896968960762],[-0.886008381843567,-0.474090695381165,-1.00324058532715],[2.36418771743774,-1.02250528335571,-0.491409748792648],[1.28755617141724,-0.913217902183533,0.00653791753575206],[2.73208785057068,-1.04944062232971,-1.07013690471649],[1.22490239143372,-0.896950006484985,-0.257192581892014],[-1.05191576480865,-0.329893708229065,-1.11493527889252],[-1.01868414878845,-0.335898071527481,-1.35388255119324],[-0.872001469135284,-0.352103292942047,-0.786509931087494],[-2.67780327796936,0.183905869722366,-0.297244161367416],[-3.35795855522156,0.355644524097443,-0.741319298744202],[-3.16097640991211,0.265496283769608,-0.2752286195755],[-2.81555676460266,0.257331788539886,-1.17229819297791],[-2.79952073097229,0.183410376310349,-0.844387412071228],[1.61917781829834,-0.929834604263306,0.217048853635788],[1.74136853218079,-1.14267480373383,0.472873121500015],[1.27915692329407,-0.995623409748077,0.718361556529999],[2.42982339859009,-1.13419449329376,0.352670729160309],[2.43886137008667,-0.988389015197754,-0.505967557430267],[1.52815222740173,-1.0076402425766,0.834641873836517],[1.43566429615021,-1.1206259727478,0.384551882743835],[1.2088565826416,-0.982539117336273,0.8663729429245],[0.833578586578369,-0.918156325817108,0.490828841924667],[1.12816905975342,-0.921902000904083,0.420566916465759],[2.03001666069031,-1.00169885158539,0.46372389793396],[-0.530352115631104,-0.191865235567093,-0.512185513973236],[-0.888820707798004,-0.10154115408659,-0.588408589363098],[-0.149865210056305,-0.27387061715126,-2.44295191764832],[-0.171201601624489,-0.342555552721024,-1.86819338798523],[-3.86212086677551,0.811911463737488,0.389356136322021],[-3.06409358978271,0.577251851558685,-0.441252797842026],[-2.91337203979492,0.573738694190979,-0.536330163478851],[-3.30957078933716,0.617790579795837,-0.370580762624741],[-0.618859767913818,-0.0438417606055737,-2.34571003913879],[-0.946181178092957,-0.0449847504496574,-1.60618197917938],[-0.951895177364349,-0.0920501872897148,-1.73237764835358],[-0.525528192520142,-0.0881275683641434,-1.6858811378479],[-0.611304461956024,-0.152311503887177,0.0110319619998336],[-1.52100038528442,0.159345984458923,0.211730241775513],[-2.38042449951172,0.0749687328934669,0.529054462909698],[1.74522590637207,-0.713764309883118,0.370351761579514],[1.05002510547638,-0.65961354970932,-0.309255033731461],[-0.392372220754623,-0.234814867377281,-0.350129276514053],[1.28275799751282,-0.686908841133118,-0.80171263217926],[0.590707361698151,-0.589166402816772,0.899566769599915],[1.64012551307678,-0.828158736228943,0.0488075241446495],[1.07921922206879,-0.663177907466888,-0.163895577192307],[-0.400218337774277,-0.377271980047226,0.0921517312526703],[1.73639786243439,-0.793743371963501,1.07867431640625],[-0.375043749809265,-0.207419037818909,-0.847505509853363],[0.787424623966217,-0.6565260887146,0.397324681282043],[0.922137200832367,-0.598898410797119,-0.371407985687256],[0.504862248897552,-0.598376154899597,0.452151596546173],[0.501449882984161,-0.551205158233643,1.03882050514221],[2.5537257194519,-0.770110368728638,0.047467116266489],[1.40020740032196,-0.372950464487076,0.453069984912872],[1.5286910533905,-0.516060650348663,-0.0504651628434658],[0.93128764629364,-0.352173179388046,0.508191108703613],[1.74106419086456,-0.517787516117096,-0.279484629631042],[1.51382517814636,-0.485222160816193,0.562565267086029],[-2.62832570075989,0.74480003118515,0.0692759677767754],[-2.85583138465881,0.743003904819489,0.0595913417637348],[-2.2902843952179,0.576608717441559,-0.293020367622375],[-3.12253189086914,0.771693587303162,0.043972983956337],[-0.416789948940277,0.138939127326012,-0.176813080906868],[-0.725705325603485,0.227116927504539,0.0736720785498619],[0.331410080194473,0.0427377112209797,-0.811209082603455],[-0.0108255511149764,0.0973969250917435,-0.875371754169464],[2.81594848632812,-0.590079724788666,-1.67275106906891],[2.37198686599731,-0.63478946685791,-1.80779695510864],[1.81935465335846,-0.547305285930634,1.02402627468109],[2.6057026386261,-0.522914171218872,0.0899576917290688],[-0.485054552555084,0.190710753202438,-1.12332630157471],[-0.0696577876806259,0.103392191231251,-2.35368323326111],[-0.925948858261108,0.218413770198822,-0.664997518062592],[-0.459451913833618,0.0488919541239738,-1.14289522171021],[1.65322172641754,-0.544351398944855,1.72219896316528],[2.25620675086975,-0.469508230686188,0.203829482197762],[1.80562508106232,-0.498554229736328,0.223190367221832],[1.09666264057159,-0.35529550909996,0.892665266990662],[0.408276945352554,-0.328851163387299,-0.0498328283429146],[-2.86634469032288,0.596899747848511,-0.0227691140025854],[1.13383054733276,-0.387507915496826,-2.18145775794983],[-0.216612592339516,-0.122141748666763,-0.108152560889721],[-0.83509773015976,0.0912704914808273,-0.826997637748718],[-3.47669649124146,0.93037223815918,0.407299637794495],[-3.12267208099365,0.674432337284088,0.275685489177704],[-2.36101341247559,0.501966595649719,-0.655676782131195],[-2.69355273246765,0.545944035053253,-0.221670135855675],[2.38973355293274,-0.207075104117393,-0.327387481927872],[1.69236755371094,-0.159383475780487,0.825905382633209],[2.92463684082031,-0.20120632648468,-0.165826484560966],[1.24354672431946,-0.187613174319267,0.463806748390198],[2.39630794525146,-0.19422647356987,0.342759817838669],[-2.50928092002869,0.915469467639923,0.372220486402512],[-1.38223052024841,0.778338849544525,-1.9185928106308],[-2.66745042800903,0.950634896755219,-0.179221227765083],[-2.4141845703125,0.91456013917923,-0.685424745082855],[-0.833070278167725,0.405297756195068,-0.711968660354614],[-0.449231147766113,0.414556503295898,-0.747948527336121],[-0.463524580001831,0.40560907125473,-1.13934421539307],[-0.384189456701279,0.399965643882751,-1.57540786266327],[-3.6702868938446,1.21045506000519,0.728805482387543],[-3.32303977012634,1.07357883453369,0.636915445327759],[-3.74322891235352,1.244349360466,0.366106003522873],[-2.83370137214661,1.07917428016663,-0.499167531728745],[1.79458212852478,-0.270544618368149,0.964580118656158],[0.998599588871002,-0.0667160600423813,0.0628614649176598],[1.89816725254059,-0.280044347047806,-0.451196849346161],[1.05578553676605,-0.052497323602438,0.1706532984972],[2.23582291603088,-0.239260986447334,0.101358287036419],[2.04386830329895,-0.106369897723198,0.63676530122757],[2.14069390296936,-0.261312872171402,0.319203943014145],[1.70792722702026,-0.163670808076859,1.06226706504822],[0.113367348909378,0.134785547852516,0.323094755411148],[0.464767932891846,-0.178119093179703,1.1713935136795],[0.890703499317169,-0.335206687450409,1.02661514282227],[3.63531279563904,0.265626519918442,-0.967379033565521],[2.40468883514404,0.102851569652557,1.23270046710968],[2.89833831787109,-0.0137436995282769,-0.597708940505981],[2.88451838493347,0.237526074051857,-0.0761578306555748],[2.70584535598755,0.0724450275301933,0.565369606018066],[-1.1946474313736,0.927115559577942,-0.453713923692703],[-2.20549750328064,1.18395590782166,0.230918169021606],[-2.04894399642944,1.13272321224213,0.451364547014236],[-0.269348829984665,0.650346219539642,-1.56116497516632],[0.115949794650078,0.511220633983612,-1.08537268638611],[0.0552713684737682,0.436935752630234,-0.300473392009735],[1.08442425727844,0.173388376832008,0.304069012403488],[-0.278847724199295,0.640650033950806,-0.852891623973846],[-0.14658822119236,0.543237745761871,-0.868424415588379],[-0.473483741283417,0.655025839805603,-0.363739460706711],[0.127634152770042,0.478329211473465,-0.606247127056122],[-0.423331141471863,0.658930122852325,-1.41887617111206],[-0.963451683521271,0.678259134292603,-0.18897944688797],[-2.06618165969849,1.08532631397247,0.359598845243454],[-1.56001687049866,0.734336137771606,0.561175167560577],[-2.1433699131012,0.984590590000153,1.01887702941895],[-2.41895580291748,1.22361600399017,-0.10573860257864],[2.11536955833435,0.034282598644495,0.245125666260719],[1.10864329338074,0.202837154269218,0.860335469245911],[1.3112770318985,0.10342963039875,0.796262204647064],[1.81257855892181,0.110583357512951,0.922145307064056],[0.866073369979858,0.0198787841945887,0.469772964715958],[0.857187926769257,0.191609233617783,-0.128001689910889],[1.1673184633255,0.181415677070618,-0.518775463104248],[1.11241388320923,0.0432789847254753,0.58862030506134],[0.465790063142776,0.208165854215622,-0.0585965402424335],[-0.708052575588226,0.428825348615646,0.461341202259064],[0.68166708946228,0.126797333359718,0.204449251294136],[-0.741165637969971,0.491207629442215,-0.329870849847794],[2.11105608940125,0.0255490988492966,0.888118326663971],[2.10994029045105,0.0154944658279419,0.202769681811333],[-0.450617700815201,0.913258731365204,-0.106484100222588],[0.332823127508163,0.673830986022949,-1.08877992630005],[1.11723756790161,0.386323690414429,-0.44856521487236],[0.032979566603899,0.823394656181335,-1.17362284660339],[-0.349127650260925,0.895388960838318,-0.578369855880737],[-1.92643880844116,1.34498381614685,-0.616165637969971],[-2.02301597595215,1.32812821865082,0.0870473235845566],[-2.51177048683167,1.46471786499023,0.0334950685501099],[-1.98925876617432,1.40811216831207,-0.499030202627182],[-2.69587063789368,1.61406862735748,-0.559521436691284],[-2.54138469696045,1.47049236297607,-0.370182007551193],[-1.53015959262848,1.265545129776,-0.323371678590775],[-2.59182858467102,1.55632388591766,0.21331325173378],[2.13250875473022,0.268170028924942,1.22395598888397],[2.42249274253845,0.318183958530426,0.868075370788574],[2.23674559593201,0.40062153339386,1.2539656162262],[1.41293895244598,0.418580710887909,0.518694818019867],[0.953025639057159,0.796800911426544,-1.63236236572266],[-1.57277369499207,1.58123171329498,-1.150710105896],[2.17808365821838,0.54060310125351,-2.88896942138672],[-0.0435368716716766,1.23334658145905,-2.58725810050964],[1.9785168170929,0.429222464561462,1.44965207576752],[2.19374489784241,0.419648289680481,0.925339043140411],[2.70040059089661,0.257327139377594,-0.516572177410126],[2.3940863609314,0.462692201137543,1.09788620471954],[1.33429896831512,0.516397476196289,0.243179187178612],[0.0531457141041756,0.845101594924927,1.7285373210907],[0.0651683211326599,0.815850853919983,1.1104234457016],[1.36087572574615,0.628420650959015,1.35493397712708],[2.55819940567017,0.85216611623764,1.26600623130798],[3.10662364959717,0.65423858165741,-0.178299382328987],[2.23892378807068,0.581498026847839,0.678542673587799],[2.74539923667908,0.655854046344757,0.577490210533142],[1.4239319562912,0.753597378730774,0.0756099820137024],[1.48104476928711,0.734566509723663,-0.555873453617096],[1.44852924346924,0.732450664043427,-1.31763088703156],[0.0629764199256897,1.05855309963226,-1.39265620708466],[2.26520395278931,0.67299872636795,0.668027341365814],[1.46613740921021,0.783235192298889,0.464294731616974],[2.05376744270325,0.715866088867188,-0.131634086370468],[1.95636808872223,0.88053685426712,0.976706266403198],[2.0464174747467,0.651567697525024,0.744299292564392],[3.33543539047241,0.925144672393799,0.371995031833649],[0.957220852375031,0.830361723899841,0.773960947990417],[3.17792463302612,0.766491055488586,-0.23912014067173],[3.79303979873657,0.817247629165649,-1.00487554073334],[3.83179903030396,0.854479432106018,-1.7633900642395],[2.20270204544067,1.05322241783142,-0.991101682186127],[2.06759715080261,0.884931147098541,-1.85712778568268],[2.89428162574768,0.826799094676971,1.72218441963196],[2.60867619514465,0.618459820747375,-0.0953152477741241],[2.35979557037354,0.415106415748596,0.730256021022797],[0.0049392944201827,1.30745565891266,1.81213712692261],[1.38367700576782,0.347695618867874,1.51626420021057],[1.86413407325745,0.841073095798492,0.836453258991241],[2.26736831665039,0.650562584400177,0.147665530443192],[1.58600115776062,0.890777587890625,0.368391692638397],[1.49136328697205,0.939045488834381,0.059490405023098],[1.14294576644897,0.945273995399475,0.71606707572937],[0.0331991203129292,1.21861708164215,1.08886384963989],[1.49086737632751,0.944253146648407,1.40142428874969],[3.19055223464966,0.854468524456024,0.549984693527222],[2.98069930076599,0.910176634788513,0.689320147037506],[2.87128734588623,0.759726941585541,0.682474851608276],[2.6128077507019,0.805012226104736,-0.0438733287155628],[3.13284945487976,0.876556515693665,-0.375115096569061],[2.93168330192566,0.920495271682739,0.286485016345978],[2.57137274742126,0.827956140041351,0.634442448616028],[2.37376236915588,0.920524418354034,0.900164723396301],[2.58231949806213,0.856689751148224,0.540130853652954],[2.6541736125946,0.814721465110779,-1.17414057254791],[2.10611367225647,0.895007073879242,1.12741661071777],[2.13353562355042,0.923996984958649,1.08171617984772],[2.24271512031555,0.922249376773834,0.215298846364021],[1.61276996135712,1.08496737480164,0.892892479896545],[2.17963814735413,0.991119921207428,-0.405311524868011],[1.77586889266968,1.0999618768692,-1.38771426677704],[1.39963567256927,1.38316512107849,-1.20487058162689],[-0.000582694308832288,1.31990766525269,1.1258373260498],[0.0938129797577858,1.26396143436432,0.726708710193634],[-0.266738951206207,1.46386992931366,-0.263422161340714],[-0.794305980205536,2.07586073875427,-1.56120562553406],[0.297165811061859,1.20625329017639,-0.708729565143585],[-0.154116898775101,1.28221333026886,-0.801354050636292],[2.0862078666687,1.14869272708893,-0.85563462972641],[1.91487061977386,1.13398694992065,-0.576171219348907],[2.32940864562988,1.25613212585449,-0.0715694054961205],[1.93571138381958,1.22217702865601,0.340661823749542],[1.75404191017151,1.19390368461609,0.329306334257126],[1.62464308738708,1.2322803735733,-0.441344499588013],[1.26617383956909,1.17670798301697,-0.0450324974954128],[2.53740620613098,1.16309559345245,0.948479771614075],[2.98929309844971,1.15674304962158,0.014771050773561],[2.64808225631714,0.984768211841583,0.0291957072913647],[2.62761735916138,1.23158621788025,1.11304175853729],[2.7459123134613,1.17547202110291,0.242832571268082],[2.15173125267029,1.26958668231964,1.19634401798248],[2.3259265422821,1.23029339313507,1.13686966896057],[2.5030677318573,1.17247867584229,0.257847398519516],[2.75008368492126,1.17323482036591,1.09896659851074],[2.51630878448486,1.01252293586731,0.674579083919525],[2.85937929153442,1.17813766002655,0.700660407543182],[0.424370437860489,1.56767439842224,-0.161196708679199],[1.05766975879669,2.01329588890076,-0.137671336531639],[1.2428389787674,1.18800508975983,0.711766541004181],[-0.0817797482013702,1.55573451519012,0.286072462797165],[1.45323300361633,1.35780036449432,1.1069073677063],[1.87687039375305,1.34591937065125,1.58176231384277],[1.44755399227142,1.2909187078476,-0.284574687480927],[1.43905866146088,1.22507536411285,0.310798048973083],[4.10722541809082,1.32868552207947,-1.937251329422],[1.56481635570526,1.22365701198578,1.92763459682465],[2.03923630714417,1.15036725997925,-0.585685014724731],[2.19971966743469,1.25786578655243,-0.762552499771118]],"colors":[[1,0,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0,1,1,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0,1,1,1],[0.501960813999176,1,0,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0,1,1,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[0.501960813999176,1,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0,1,1,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[1,0,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[1,0,0,1],[1,0,0,1],[0,1,1,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[1,0,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[1,0,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0,1,1,1],[0.501960813999176,1,0,1],[0,1,1,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,0,1,1],[1,0,0,1],[1,0,0,1],[0.501960813999176,0,1,1],[0.501960813999176,0,1,1],[0,1,1,1],[0,1,1,1],[0.501960813999176,1,0,1],[0,1,1,1],[0,1,1,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0,1,1,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[1,0,0,1],[0.501960813999176,0,1,1],[0.501960813999176,0,1,1],[0.501960813999176,0,1,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,0,1,1],[0.501960813999176,0,1,1],[0,1,1,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0,1,1,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0,1,1,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0.501960813999176,1,0,1],[0,1,1,1],[0,1,1,1],[0.501960813999176,0,1,1],[0.501960813999176,0,1,1],[0.501960813999176,0,1,1],[0.501960813999176,0,1,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,0,1,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,0,1,1],[0.501960813999176,0,1,1],[0.501960813999176,0,1,1],[0.501960813999176,0,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0.501960813999176,0,1,1],[0.501960813999176,0,1,1],[0.501960813999176,0,1,1],[0.501960813999176,0,1,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,0,1,1],[0.501960813999176,0,1,1],[0.501960813999176,0,1,1],[0.501960813999176,0,1,1],[0,1,1,1],[0.501960813999176,1,0,1],[0,1,1,1],[0.501960813999176,1,0,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0.501960813999176,0,1,1],[0.501960813999176,0,1,1],[0.501960813999176,0,1,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0,1,1,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,0,1,1],[0.501960813999176,0,1,1],[0.501960813999176,0,1,1],[0.501960813999176,0,1,1],[0.501960813999176,0,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0,1,1,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0,1,1,1],[0,1,1,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0,1,1,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,0,1,1],[0.501960813999176,0,1,1],[0.501960813999176,0,1,1],[0.501960813999176,0,1,1],[0.501960813999176,0,1,1],[0.501960813999176,0,1,1],[0.501960813999176,0,1,1],[0.501960813999176,0,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0.501960813999176,0,1,1],[0,1,1,1],[0.501960813999176,1,0,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0.501960813999176,1,0,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0.501960813999176,1,0,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0.501960813999176,1,0,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0.501960813999176,0,1,1],[0.501960813999176,1,0,1],[0.501960813999176,1,0,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0.501960813999176,1,0,1],[0,1,1,1],[0,1,1,1],[0.501960813999176,1,0,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1],[0,1,1,1]],"radii":[[0.108964458107948]],"centers":[[-2.63168549537659,-0.927853226661682,0.533996343612671],[-3.48934125900269,-0.80444473028183,0.648665547370911],[-2.96662354469299,-0.88006180524826,0.957518577575684],[-2.90648293495178,-0.960493505001068,0.582209050655365],[-2.90011978149414,-0.951573312282562,1.05348980426788],[-4.64668846130371,-0.420008331537247,0.993466973304749],[-5.15152215957642,-0.348722904920578,1.35310041904449],[-5.07032632827759,-0.393771827220917,1.52002942562103],[-5.14189100265503,-0.319673269987106,1.02730929851532],[-4.31437921524048,-0.631028056144714,1.64088249206543],[-3.77117085456848,-0.767089307308197,1.16702282428741],[-3.76488995552063,-0.87261825799942,1.76384377479553],[-3.7693657875061,-0.728443801403046,1.1600946187973],[-4.48918867111206,-0.67385071516037,1.46255922317505],[0.692081987857819,-1.95337212085724,0.486309975385666],[-0.419616967439651,-1.50638771057129,-0.135995075106621],[-0.620969474315643,-1.62180304527283,-0.240779086947441],[-0.197437986731529,-1.6179096698761,-0.296885430812836],[1.05397343635559,-1.97972762584686,0.810216069221497],[2.22771549224854,-2.19242262840271,-1.25477313995361],[0.955205202102661,-1.87673377990723,-0.420131653547287],[0.691713809967041,-1.95818150043488,0.613064348697662],[1.03671586513519,-1.94791781902313,-0.282570987939835],[0.372663259506226,-1.87692475318909,1.4782487154007],[-0.381259918212891,-1.58989822864532,0.0300822649151087],[-4.52591753005981,-0.571372628211975,-0.414466768503189],[-3.92395663261414,-0.761685788631439,-0.675891876220703],[-4.18574237823486,-0.688712477684021,-0.125654920935631],[-3.69787001609802,-0.723528385162354,-1.98583388328552],[1.11627697944641,-1.73278415203094,0.82032710313797],[1.00686538219452,-1.59483325481415,0.453524470329285],[0.772323250770569,-1.71550035476685,0.899979650974274],[-0.865907609462738,-1.3155609369278,0.638873636722565],[-1.17938148975372,-1.18941235542297,-0.502398014068604],[-1.12238574028015,-1.16000127792358,-0.479694753885269],[-0.875388026237488,-1.14073693752289,-0.457908809185028],[-0.977548241615295,-1.17538011074066,-0.416156798601151],[-3.67794156074524,-0.448768615722656,0.296194851398468],[-4.1688380241394,-0.271730482578278,0.37135523557663],[-3.36851859092712,-0.491958498954773,-0.22547972202301],[-3.22354888916016,-0.569558441638947,-0.02631875872612],[-4.49538326263428,-0.213065579533577,0.184181421995163],[-4.25075435638428,-0.235965877771378,0.0627945885062218],[-4.49773502349854,-0.120676450431347,-0.0432354658842087],[-1.24167692661285,-1.18959701061249,0.356561720371246],[1.18976628780365,-1.7643940448761,-1.00364971160889],[-1.04908812046051,-1.11818861961365,-0.236609160900116],[-0.950209081172943,-1.20781540870667,-0.114084333181381],[0.73614376783371,-1.77501654624939,0.788366496562958],[1.01915299892426,-1.66970443725586,1.00805795192719],[2.08450126647949,-1.75164699554443,-0.74367743730545],[1.46972906589508,-1.7125928401947,0.896048069000244],[2.31834435462952,-1.82837176322937,-0.465355575084686],[2.44907069206238,-1.75162220001221,0.0589442849159241],[2.03283214569092,-1.88445854187012,-0.663971126079559],[1.99250602722168,-1.87092959880829,-1.17211544513702],[0.914143025875092,-1.48561251163483,0.375189542770386],[1.42250156402588,-1.56305205821991,-0.0794070810079575],[2.21696925163269,-1.65704226493835,-2.40573406219482],[1.00395834445953,-1.53183257579803,-1.14199542999268],[0.951667547225952,-1.58578443527222,-0.0788717344403267],[-3.69833278656006,-0.211122557520866,0.252222120761871],[-4.01639270782471,-0.0469466783106327,0.245247930288315],[-3.07761287689209,-0.287072092294693,-0.157827541232109],[-3.34566164016724,-0.250364243984222,-0.0453445576131344],[-2.89900040626526,-0.375634849071503,0.719595551490784],[-4.87503957748413,0.0647720545530319,0.507240772247314],[-3.54472661018372,-0.1767508238554,-0.351667851209641],[-3.62823724746704,-0.20433583855629,-0.347628384828568],[-4.19857549667358,-0.0316951237618923,0.104493997991085],[0.825591564178467,-1.78533434867859,0.97272127866745],[-3.00499486923218,-0.372950911521912,0.258377522230148],[-2.8451452255249,-0.414354473352432,-0.451231360435486],[-2.82348942756653,-0.354036778211594,-1.11365604400635],[-3.0487174987793,-0.33016762137413,-0.333827137947083],[-0.0340252369642258,-1.41733860969543,0.355616331100464],[1.13260114192963,-1.51156878471375,-0.667376160621643],[0.891631960868835,-1.39542281627655,-1.28869962692261],[1.67305302619934,-1.54937016963959,-0.437780380249023],[0.868664741516113,-1.5133284330368,0.0610466636717319],[1.36679935455322,-1.41501688957214,0.0201793219894171],[0.598041415214539,-1.43277370929718,0.594023466110229],[1.34842538833618,-1.46761333942413,0.654217779636383],[1.39973640441895,-1.49882984161377,0.19259886443615],[-3.55779266357422,0.00941522140055895,0.039181400090456],[-2.98972725868225,-0.208162873983383,0.630841195583344],[-3.17365741729736,-0.086647242307663,-0.0566643103957176],[-2.7092821598053,-0.15011228621006,-0.516207814216614],[-2.94406628608704,-0.132295787334442,0.292448997497559],[-4.75574398040771,0.399175107479095,0.240632310509682],[-3.76899123191833,0.13903796672821,0.0865144357085228],[-3.50335621833801,0.0430567562580109,-0.122209750115871],[-3.01277375221252,-0.0421661771833897,-0.537622809410095],[-4.88245439529419,0.426137953996658,0.570420026779175],[-5.21013593673706,0.504780888557434,0.496957212686539],[-3.6693480014801,-0.0420929752290249,0.76787656545639],[-0.694477140903473,-0.729185223579407,-0.63669341802597],[-0.765763282775879,-0.712412178516388,-1.28533864021301],[-0.634110867977142,-0.773483097553253,-0.446338295936584],[-0.56155002117157,-0.750194489955902,-0.703096628189087],[-0.164672270417213,-0.721550107002258,-0.257683575153351],[2.41020178794861,-1.42243719100952,-1.42371928691864],[-3.92429304122925,0.221986219286919,-0.800241589546204],[-4.17056322097778,0.265844792127609,-0.177070364356041],[-3.81394243240356,0.162116065621376,-0.172528013586998],[-3.90985369682312,0.100800178945065,0.0604686848819256],[-0.661333918571472,-0.812219500541687,-0.0718340873718262],[1.27587962150574,-1.39629030227661,-0.895649671554565],[1.31840848922729,-1.30011260509491,-1.17600512504578],[0.959955632686615,-1.2782940864563,-0.0368509739637375],[1.01419341564178,-1.63487219810486,0.985979914665222],[1.08852136135101,-1.37687182426453,-0.813974976539612],[-0.218060702085495,-0.931284010410309,0.558543682098389],[1.2012619972229,-1.22605311870575,0.447774171829224],[-3.1174578666687,-0.00880237855017185,-0.0213093366473913],[-4.66223335266113,0.352794885635376,1.40800082683563],[2.53480505943298,-1.40446949005127,-0.77115523815155],[1.2423278093338,-1.30953097343445,0.333263397216797],[0.509156823158264,-1.26756453514099,0.621764063835144],[0.214239209890366,-1.16362464427948,0.0960156247019768],[-2.91470742225647,-0.228489682078362,0.901013374328613],[0.437750965356827,-1.08682870864868,0.80527925491333],[-0.652873873710632,-0.832958221435547,0.643759906291962],[-3.70873808860779,-0.142764806747437,0.784628510475159],[-0.300282269716263,-0.502968847751617,-0.57269674539566],[-0.49934908747673,-0.511687517166138,-0.38896968960762],[-0.886008381843567,-0.474090695381165,-1.00324058532715],[2.36418771743774,-1.02250528335571,-0.491409748792648],[1.28755617141724,-0.913217902183533,0.00653791753575206],[2.73208785057068,-1.04944062232971,-1.07013690471649],[1.22490239143372,-0.896950006484985,-0.257192581892014],[-1.05191576480865,-0.329893708229065,-1.11493527889252],[-1.01868414878845,-0.335898071527481,-1.35388255119324],[-0.872001469135284,-0.352103292942047,-0.786509931087494],[-2.67780327796936,0.183905869722366,-0.297244161367416],[-3.35795855522156,0.355644524097443,-0.741319298744202],[-3.16097640991211,0.265496283769608,-0.2752286195755],[-2.81555676460266,0.257331788539886,-1.17229819297791],[-2.79952073097229,0.183410376310349,-0.844387412071228],[1.61917781829834,-0.929834604263306,0.217048853635788],[1.74136853218079,-1.14267480373383,0.472873121500015],[1.27915692329407,-0.995623409748077,0.718361556529999],[2.42982339859009,-1.13419449329376,0.352670729160309],[2.43886137008667,-0.988389015197754,-0.505967557430267],[1.52815222740173,-1.0076402425766,0.834641873836517],[1.43566429615021,-1.1206259727478,0.384551882743835],[1.2088565826416,-0.982539117336273,0.8663729429245],[0.833578586578369,-0.918156325817108,0.490828841924667],[1.12816905975342,-0.921902000904083,0.420566916465759],[2.03001666069031,-1.00169885158539,0.46372389793396],[-0.530352115631104,-0.191865235567093,-0.512185513973236],[-0.888820707798004,-0.10154115408659,-0.588408589363098],[-0.149865210056305,-0.27387061715126,-2.44295191764832],[-0.171201601624489,-0.342555552721024,-1.86819338798523],[-3.86212086677551,0.811911463737488,0.389356136322021],[-3.06409358978271,0.577251851558685,-0.441252797842026],[-2.91337203979492,0.573738694190979,-0.536330163478851],[-3.30957078933716,0.617790579795837,-0.370580762624741],[-0.618859767913818,-0.0438417606055737,-2.34571003913879],[-0.946181178092957,-0.0449847504496574,-1.60618197917938],[-0.951895177364349,-0.0920501872897148,-1.73237764835358],[-0.525528192520142,-0.0881275683641434,-1.6858811378479],[-0.611304461956024,-0.152311503887177,0.0110319619998336],[-1.52100038528442,0.159345984458923,0.211730241775513],[-2.38042449951172,0.0749687328934669,0.529054462909698],[1.74522590637207,-0.713764309883118,0.370351761579514],[1.05002510547638,-0.65961354970932,-0.309255033731461],[-0.392372220754623,-0.234814867377281,-0.350129276514053],[1.28275799751282,-0.686908841133118,-0.80171263217926],[0.590707361698151,-0.589166402816772,0.899566769599915],[1.64012551307678,-0.828158736228943,0.0488075241446495],[1.07921922206879,-0.663177907466888,-0.163895577192307],[-0.400218337774277,-0.377271980047226,0.0921517312526703],[1.73639786243439,-0.793743371963501,1.07867431640625],[-0.375043749809265,-0.207419037818909,-0.847505509853363],[0.787424623966217,-0.6565260887146,0.397324681282043],[0.922137200832367,-0.598898410797119,-0.371407985687256],[0.504862248897552,-0.598376154899597,0.452151596546173],[0.501449882984161,-0.551205158233643,1.03882050514221],[2.5537257194519,-0.770110368728638,0.047467116266489],[1.40020740032196,-0.372950464487076,0.453069984912872],[1.5286910533905,-0.516060650348663,-0.0504651628434658],[0.93128764629364,-0.352173179388046,0.508191108703613],[1.74106419086456,-0.517787516117096,-0.279484629631042],[1.51382517814636,-0.485222160816193,0.562565267086029],[-2.62832570075989,0.74480003118515,0.0692759677767754],[-2.85583138465881,0.743003904819489,0.0595913417637348],[-2.2902843952179,0.576608717441559,-0.293020367622375],[-3.12253189086914,0.771693587303162,0.043972983956337],[-0.416789948940277,0.138939127326012,-0.176813080906868],[-0.725705325603485,0.227116927504539,0.0736720785498619],[0.331410080194473,0.0427377112209797,-0.811209082603455],[-0.0108255511149764,0.0973969250917435,-0.875371754169464],[2.81594848632812,-0.590079724788666,-1.67275106906891],[2.37198686599731,-0.63478946685791,-1.80779695510864],[1.81935465335846,-0.547305285930634,1.02402627468109],[2.6057026386261,-0.522914171218872,0.0899576917290688],[-0.485054552555084,0.190710753202438,-1.12332630157471],[-0.0696577876806259,0.103392191231251,-2.35368323326111],[-0.925948858261108,0.218413770198822,-0.664997518062592],[-0.459451913833618,0.0488919541239738,-1.14289522171021],[1.65322172641754,-0.544351398944855,1.72219896316528],[2.25620675086975,-0.469508230686188,0.203829482197762],[1.80562508106232,-0.498554229736328,0.223190367221832],[1.09666264057159,-0.35529550909996,0.892665266990662],[0.408276945352554,-0.328851163387299,-0.0498328283429146],[-2.86634469032288,0.596899747848511,-0.0227691140025854],[1.13383054733276,-0.387507915496826,-2.18145775794983],[-0.216612592339516,-0.122141748666763,-0.108152560889721],[-0.83509773015976,0.0912704914808273,-0.826997637748718],[-3.47669649124146,0.93037223815918,0.407299637794495],[-3.12267208099365,0.674432337284088,0.275685489177704],[-2.36101341247559,0.501966595649719,-0.655676782131195],[-2.69355273246765,0.545944035053253,-0.221670135855675],[2.38973355293274,-0.207075104117393,-0.327387481927872],[1.69236755371094,-0.159383475780487,0.825905382633209],[2.92463684082031,-0.20120632648468,-0.165826484560966],[1.24354672431946,-0.187613174319267,0.463806748390198],[2.39630794525146,-0.19422647356987,0.342759817838669],[-2.50928092002869,0.915469467639923,0.372220486402512],[-1.38223052024841,0.778338849544525,-1.9185928106308],[-2.66745042800903,0.950634896755219,-0.179221227765083],[-2.4141845703125,0.91456013917923,-0.685424745082855],[-0.833070278167725,0.405297756195068,-0.711968660354614],[-0.449231147766113,0.414556503295898,-0.747948527336121],[-0.463524580001831,0.40560907125473,-1.13934421539307],[-0.384189456701279,0.399965643882751,-1.57540786266327],[-3.6702868938446,1.21045506000519,0.728805482387543],[-3.32303977012634,1.07357883453369,0.636915445327759],[-3.74322891235352,1.244349360466,0.366106003522873],[-2.83370137214661,1.07917428016663,-0.499167531728745],[1.79458212852478,-0.270544618368149,0.964580118656158],[0.998599588871002,-0.0667160600423813,0.0628614649176598],[1.89816725254059,-0.280044347047806,-0.451196849346161],[1.05578553676605,-0.052497323602438,0.1706532984972],[2.23582291603088,-0.239260986447334,0.101358287036419],[2.04386830329895,-0.106369897723198,0.63676530122757],[2.14069390296936,-0.261312872171402,0.319203943014145],[1.70792722702026,-0.163670808076859,1.06226706504822],[0.113367348909378,0.134785547852516,0.323094755411148],[0.464767932891846,-0.178119093179703,1.1713935136795],[0.890703499317169,-0.335206687450409,1.02661514282227],[3.63531279563904,0.265626519918442,-0.967379033565521],[2.40468883514404,0.102851569652557,1.23270046710968],[2.89833831787109,-0.0137436995282769,-0.597708940505981],[2.88451838493347,0.237526074051857,-0.0761578306555748],[2.70584535598755,0.0724450275301933,0.565369606018066],[-1.1946474313736,0.927115559577942,-0.453713923692703],[-2.20549750328064,1.18395590782166,0.230918169021606],[-2.04894399642944,1.13272321224213,0.451364547014236],[-0.269348829984665,0.650346219539642,-1.56116497516632],[0.115949794650078,0.511220633983612,-1.08537268638611],[0.0552713684737682,0.436935752630234,-0.300473392009735],[1.08442425727844,0.173388376832008,0.304069012403488],[-0.278847724199295,0.640650033950806,-0.852891623973846],[-0.14658822119236,0.543237745761871,-0.868424415588379],[-0.473483741283417,0.655025839805603,-0.363739460706711],[0.127634152770042,0.478329211473465,-0.606247127056122],[-0.423331141471863,0.658930122852325,-1.41887617111206],[-0.963451683521271,0.678259134292603,-0.18897944688797],[-2.06618165969849,1.08532631397247,0.359598845243454],[-1.56001687049866,0.734336137771606,0.561175167560577],[-2.1433699131012,0.984590590000153,1.01887702941895],[-2.41895580291748,1.22361600399017,-0.10573860257864],[2.11536955833435,0.034282598644495,0.245125666260719],[1.10864329338074,0.202837154269218,0.860335469245911],[1.3112770318985,0.10342963039875,0.796262204647064],[1.81257855892181,0.110583357512951,0.922145307064056],[0.866073369979858,0.0198787841945887,0.469772964715958],[0.857187926769257,0.191609233617783,-0.128001689910889],[1.1673184633255,0.181415677070618,-0.518775463104248],[1.11241388320923,0.0432789847254753,0.58862030506134],[0.465790063142776,0.208165854215622,-0.0585965402424335],[-0.708052575588226,0.428825348615646,0.461341202259064],[0.68166708946228,0.126797333359718,0.204449251294136],[-0.741165637969971,0.491207629442215,-0.329870849847794],[2.11105608940125,0.0255490988492966,0.888118326663971],[2.10994029045105,0.0154944658279419,0.202769681811333],[-0.450617700815201,0.913258731365204,-0.106484100222588],[0.332823127508163,0.673830986022949,-1.08877992630005],[1.11723756790161,0.386323690414429,-0.44856521487236],[0.032979566603899,0.823394656181335,-1.17362284660339],[-0.349127650260925,0.895388960838318,-0.578369855880737],[-1.92643880844116,1.34498381614685,-0.616165637969971],[-2.02301597595215,1.32812821865082,0.0870473235845566],[-2.51177048683167,1.46471786499023,0.0334950685501099],[-1.98925876617432,1.40811216831207,-0.499030202627182],[-2.69587063789368,1.61406862735748,-0.559521436691284],[-2.54138469696045,1.47049236297607,-0.370182007551193],[-1.53015959262848,1.265545129776,-0.323371678590775],[-2.59182858467102,1.55632388591766,0.21331325173378],[2.13250875473022,0.268170028924942,1.22395598888397],[2.42249274253845,0.318183958530426,0.868075370788574],[2.23674559593201,0.40062153339386,1.2539656162262],[1.41293895244598,0.418580710887909,0.518694818019867],[0.953025639057159,0.796800911426544,-1.63236236572266],[-1.57277369499207,1.58123171329498,-1.150710105896],[2.17808365821838,0.54060310125351,-2.88896942138672],[-0.0435368716716766,1.23334658145905,-2.58725810050964],[1.9785168170929,0.429222464561462,1.44965207576752],[2.19374489784241,0.419648289680481,0.925339043140411],[2.70040059089661,0.257327139377594,-0.516572177410126],[2.3940863609314,0.462692201137543,1.09788620471954],[1.33429896831512,0.516397476196289,0.243179187178612],[0.0531457141041756,0.845101594924927,1.7285373210907],[0.0651683211326599,0.815850853919983,1.1104234457016],[1.36087572574615,0.628420650959015,1.35493397712708],[2.55819940567017,0.85216611623764,1.26600623130798],[3.10662364959717,0.65423858165741,-0.178299382328987],[2.23892378807068,0.581498026847839,0.678542673587799],[2.74539923667908,0.655854046344757,0.577490210533142],[1.4239319562912,0.753597378730774,0.0756099820137024],[1.48104476928711,0.734566509723663,-0.555873453617096],[1.44852924346924,0.732450664043427,-1.31763088703156],[0.0629764199256897,1.05855309963226,-1.39265620708466],[2.26520395278931,0.67299872636795,0.668027341365814],[1.46613740921021,0.783235192298889,0.464294731616974],[2.05376744270325,0.715866088867188,-0.131634086370468],[1.95636808872223,0.88053685426712,0.976706266403198],[2.0464174747467,0.651567697525024,0.744299292564392],[3.33543539047241,0.925144672393799,0.371995031833649],[0.957220852375031,0.830361723899841,0.773960947990417],[3.17792463302612,0.766491055488586,-0.23912014067173],[3.79303979873657,0.817247629165649,-1.00487554073334],[3.83179903030396,0.854479432106018,-1.7633900642395],[2.20270204544067,1.05322241783142,-0.991101682186127],[2.06759715080261,0.884931147098541,-1.85712778568268],[2.89428162574768,0.826799094676971,1.72218441963196],[2.60867619514465,0.618459820747375,-0.0953152477741241],[2.35979557037354,0.415106415748596,0.730256021022797],[0.0049392944201827,1.30745565891266,1.81213712692261],[1.38367700576782,0.347695618867874,1.51626420021057],[1.86413407325745,0.841073095798492,0.836453258991241],[2.26736831665039,0.650562584400177,0.147665530443192],[1.58600115776062,0.890777587890625,0.368391692638397],[1.49136328697205,0.939045488834381,0.059490405023098],[1.14294576644897,0.945273995399475,0.71606707572937],[0.0331991203129292,1.21861708164215,1.08886384963989],[1.49086737632751,0.944253146648407,1.40142428874969],[3.19055223464966,0.854468524456024,0.549984693527222],[2.98069930076599,0.910176634788513,0.689320147037506],[2.87128734588623,0.759726941585541,0.682474851608276],[2.6128077507019,0.805012226104736,-0.0438733287155628],[3.13284945487976,0.876556515693665,-0.375115096569061],[2.93168330192566,0.920495271682739,0.286485016345978],[2.57137274742126,0.827956140041351,0.634442448616028],[2.37376236915588,0.920524418354034,0.900164723396301],[2.58231949806213,0.856689751148224,0.540130853652954],[2.6541736125946,0.814721465110779,-1.17414057254791],[2.10611367225647,0.895007073879242,1.12741661071777],[2.13353562355042,0.923996984958649,1.08171617984772],[2.24271512031555,0.922249376773834,0.215298846364021],[1.61276996135712,1.08496737480164,0.892892479896545],[2.17963814735413,0.991119921207428,-0.405311524868011],[1.77586889266968,1.0999618768692,-1.38771426677704],[1.39963567256927,1.38316512107849,-1.20487058162689],[-0.000582694308832288,1.31990766525269,1.1258373260498],[0.0938129797577858,1.26396143436432,0.726708710193634],[-0.266738951206207,1.46386992931366,-0.263422161340714],[-0.794305980205536,2.07586073875427,-1.56120562553406],[0.297165811061859,1.20625329017639,-0.708729565143585],[-0.154116898775101,1.28221333026886,-0.801354050636292],[2.0862078666687,1.14869272708893,-0.85563462972641],[1.91487061977386,1.13398694992065,-0.576171219348907],[2.32940864562988,1.25613212585449,-0.0715694054961205],[1.93571138381958,1.22217702865601,0.340661823749542],[1.75404191017151,1.19390368461609,0.329306334257126],[1.62464308738708,1.2322803735733,-0.441344499588013],[1.26617383956909,1.17670798301697,-0.0450324974954128],[2.53740620613098,1.16309559345245,0.948479771614075],[2.98929309844971,1.15674304962158,0.014771050773561],[2.64808225631714,0.984768211841583,0.0291957072913647],[2.62761735916138,1.23158621788025,1.11304175853729],[2.7459123134613,1.17547202110291,0.242832571268082],[2.15173125267029,1.26958668231964,1.19634401798248],[2.3259265422821,1.23029339313507,1.13686966896057],[2.5030677318573,1.17247867584229,0.257847398519516],[2.75008368492126,1.17323482036591,1.09896659851074],[2.51630878448486,1.01252293586731,0.674579083919525],[2.85937929153442,1.17813766002655,0.700660407543182],[0.424370437860489,1.56767439842224,-0.161196708679199],[1.05766975879669,2.01329588890076,-0.137671336531639],[1.2428389787674,1.18800508975983,0.711766541004181],[-0.0817797482013702,1.55573451519012,0.286072462797165],[1.45323300361633,1.35780036449432,1.1069073677063],[1.87687039375305,1.34591937065125,1.58176231384277],[1.44755399227142,1.2909187078476,-0.284574687480927],[1.43905866146088,1.22507536411285,0.310798048973083],[4.10722541809082,1.32868552207947,-1.937251329422],[1.56481635570526,1.22365701198578,1.92763459682465],[2.03923630714417,1.15036725997925,-0.585685014724731],[2.19971966743469,1.25786578655243,-0.762552499771118]],"ignoreExtent":false,"fastTransparency":true,"flags":32771},"37":{"id":37,"type":"text","material":{"lit":false,"margin":0,"floating":true,"edge":[0,1,1]},"vertices":[["NaN",4,1]],"colors":[[0,0,0,1]],"texts":[["PC1"]],"cex":[[1]],"adj":[[0.5,0.5]],"centers":[["NaN",4,1]],"family":[["sans"]],"font":[[1]],"ignoreExtent":true,"flags":33808},"38":{"id":38,"type":"text","material":{"lit":false,"margin":1,"floating":true,"edge":[1,1,1]},"vertices":[["NaN",4,1]],"colors":[[0,0,0,1]],"texts":[["PC2"]],"cex":[[1]],"adj":[[0.5,0.5]],"centers":[["NaN",4,1]],"family":[["sans"]],"font":[[1]],"ignoreExtent":true,"flags":33808},"39":{"id":39,"type":"text","material":{"lit":false,"margin":2,"floating":true,"edge":[1,1,1]},"vertices":[["NaN",4,1]],"colors":[[0,0,0,1]],"texts":[["PC3"]],"cex":[[1]],"adj":[[0.5,0.5]],"centers":[["NaN",4,1]],"family":[["sans"]],"font":[[1]],"ignoreExtent":true,"flags":33808},"11":{"id":11,"type":"light","vertices":[[0,0,1]],"colors":[[1,1,1,1],[1,1,1,1],[1,1,1,1]],"viewpoint":true,"finite":false},"12":{"id":12,"type":"background","material":{"lit":false,"back":"lines"},"colors":[[1,1,1,1]],"centers":[[0,0,0]],"sphere":false,"fogtype":"none","fogscale":1,"flags":32768},"36":{"id":36,"type":"bboxdeco","material":{"front":"lines","back":"lines"},"vertices":[[-4,"NA","NA"],[-2,"NA","NA"],[0,"NA","NA"],[2,"NA","NA"],[4,"NA","NA"],["NA",-2,"NA"],["NA",-1,"NA"],["NA",0,"NA"],["NA",1,"NA"],["NA",2,"NA"],["NA","NA",-2],["NA","NA",-1],["NA","NA",0],["NA","NA",1],["NA","NA",2]],"colors":[[0,0,0,1]],"axes":{"mode":["pretty","pretty","pretty"],"step":[2,1,1],"nticks":[5,5,5],"marklen":[15,15,15],"expand":[1.02999997138977,1.02999997138977,1.02999997138977]},"draw_front":true},"7":{"id":7,"type":"subscene","par3d":{"antialias":8,"FOV":30,"ignoreExtent":false,"listeners":7,"mouseMode":{"none":"none","left":"trackball","right":"zoom","middle":"fov","wheel":"pull"},"observer":[0,0,28.6334247589111],"modelMatrix":[[0.70168662071228,0,0,0.386948764324188],[0,0.523883283138275,1.27550148963928,0.643624365329742],[0,-1.43935739994049,0.464244604110718,-28.4941654205322],[0,0,0,1]],"projMatrix":[[3.73205089569092,0,0,0],[0,3.3827588558197,0,0],[0,0,-3.86370348930359,-103.220191955566],[0,0,-1,0]],"skipRedraw":false,"userMatrix":[[1,0,0,0],[0,0.342020143325668,0.939692620785909,0],[0,-0.939692620785909,0.342020143325668,0],[0,0,0,1]],"userProjection":[[1,0,0,0],[0,1,0,0],[0,0,1,0],[0,0,0,1]],"scale":[0.70168662071228,1.53173220157623,1.35736036300659],"viewport":{"x":0,"y":0,"width":1,"height":1},"zoom":1,"bbox":[-5.36542510986328,4.26251459121704,-2.26356077194214,2.1469988822937,-2.96924614906311,2.00791144371033],"windowRect":[1287,37,2546,1426],"family":"sans","font":1,"cex":1,"useFreeType":true,"fontname":"/home/jrl/R/x86_64-pc-linux-gnu-library/4.1/rgl/fonts/FreeSans.ttf","maxClipPlanes":8,"glVersion":4.6,"activeSubscene":0},"embeddings":{"viewport":"replace","projection":"replace","model":"replace","mouse":"replace"},"objects":[12,36,35,37,38,39,11],"subscenes":[],"flags":34067}},"crosstalk":{"key":[],"group":[],"id":[],"options":[]},"width":800,"height":450,"context":{"shiny":false,"rmarkdown":"_output.yml"},"players":[],"webGLoptions":{"preserveDrawingBuffer":true}},"evals":[],"jsHooks":[]}</script> --- ## Cluster plot When we apply K-means clustering we do not obtain a nested structure and therefore can not express the clustering as a dendrogram. We can create a plot that shows the locations of objects and cluster centroids on a two-dimensional plot. The dimensions can be found via PCA or multidimensional scaling. --- Here is the final estimate if we run 10 iterations and 10 different starting values. <!-- --> --- class: center middle inverse # How to use clusters? --- Save clusters as a new variable. .smaller[ | mpg| cylinders| displacement| horsepower| weight| acceleration| year| origin|name | Cluster| |---:|---------:|------------:|----------:|------:|------------:|----:|------:|:-------------------------|-------:| | 18| 8| 307| 130| 3504| 12.0| 70| 1|chevrolet chevelle malibu | 3| | 15| 8| 350| 165| 3693| 11.5| 70| 1|buick skylark 320 | 3| | 18| 8| 318| 150| 3436| 11.0| 70| 1|plymouth satellite | 3| | 16| 8| 304| 150| 3433| 12.0| 70| 1|amc rebel sst | 3| | 17| 8| 302| 140| 3449| 10.5| 70| 1|ford torino | 3| | 15| 8| 429| 198| 4341| 10.0| 70| 1|ford galaxie 500 | 3| ] --- You can describe clusters using descriptive statistics, e.g. mean values as below .small[ | Cluster| mpg| cylinders| displacement| horsepower| weight| acceleration| year| |-------:|----:|---------:|------------:|----------:|------:|------------:|----:| | 1| 19.8| 6.05| 219| 103.1| 3222| 16.2| 76.2| | 2| 25.3| 3.99| 108| 82.0| 2301| 16.4| 73.5| | 3| 14.5| 8.00| 349| 161.8| 4151| 12.6| 73.6| | 4| 32.6| 4.04| 112| 74.8| 2327| 16.8| 80.0| ] --- Or create some visualizations. <!-- --> --- class: center middle inverse # Practical application --- Use the data set `UN98`. Cluster countries according to social indicators using the hierarchical clustering method. > How many clusters seem natural? -- > Can clusters be explained by world regions? --- Use the data set `HousePrices`. Cluster houses using the K-means method. > How many clusters should be extracted? -- > Describe each cluster. --- class: inverse